[AINews] Creating a LLM-as-a-Judge • ButtondownTwitterTwitter

Chapters

Creating a LLM-as-a-Judge

AI Discord Recap

AI Community Discussions

HuggingFace Today I'm Learning

HuggingFace NLP Course Highlights

Discussion on Various AI Tools and Applications

Conversations on Various AI Topics

Interconnects with Nathan Lambert

ThunderKittens Update and Livestream Highlights

Mojo and Modular Discussions

Technical Challenges and Discussions

Creating a LLM-as-a-Judge

In this section, Hamel Husain discusses the process of creating a LLM-as-a-Judge that focuses on aligning with domain experts through a technique called Critique Shadowing. The workflow involves finding a principal domain expert, creating a dataset, having the expert review the data and provide critiques, fixing errors, building the LLM Judge, performing error analysis, and creating specialized judges as needed. This iterative process emphasizes the importance of critique and domain expert involvement, which is also reflected in the building process of AINews.

AI Discord Recap

Theme 1: Apple's M4 Chips Supercharge AI Performance

- Users discuss the impact of LM Studio on Apple's new MacBook Pro powered by M4 chips, with the model M3 Max running at 60 tokens per second.

- Rumors circulate about the M4 Ultra outshining NVIDIA's 4090 GPUs.

Theme 2: AI Models Stir Up the Community with Updates and Controversies

- Anticipation builds for Haiku 3.5 release, and Gemini's performance is lauded.

- Microsoft's Phi-3.5 model faces mockeries for overcautiousness.

Theme 3: Fine-Tuning and Training Hurdles Challenge AI Developers

- Unsloth team's insights on gradient issues and Apple's compact new Mac Mini developments are discussed.

- ThunderKittens introduce new features, but users encounter fine-tuning challenges and VRAM efficiency concerns.

Theme 4: AI Disrupts Software Engineering and Automation Tools Flourish

- AI's impact on software engineering jobs is debated, and Skyvern automates browser tasks.

- Discussion revolves around ThunderKittens' new features and challenges like quantization mishaps.

Theme 5: OpenAI Tackles Factuality and Enhances User Experience

- Debate on achieving AGI, model efficiency, and prompt generation tools ensues.

- ChatGPT introduces search history feature, and OpenAI's SimpleQA aims to combat hallucinations.

AI Community Discussions

Haiku 3.5 Release

- Users anticipate the release of Haiku 3.5 with expected enhancements over previous versions. Notable comparisons include Qodo AI vs. Cline for usability and features.

- Skyvern introduces browser automation using AI for efficient repetitive workflows across web pages.

- Feedback on Gemini's coding effectiveness highlights its proficiency in handling database-related tasks compared to Claude and Aider, with fluctuating performance based on context.

OpenRouter Discord

- Discussion on OAuth authentication issues and fixes, alpha testing for macOS chat app, security concerns around API keys, and excitement over the Haiku 3.5 release.

- Requests for access to integration features indicate strong user demand.

Stability.ai Discord

- Members analyze GPU price variances, discuss training for custom styles, troubleshoot grey images in Stable Diffusion, and compare UI options.

- Anticipation surrounds upcoming AI model releases like SD 3.5 compared to SDXL, focusing on performance improvements.

Nous Research AI Discord

- Discussion on Microsoft's risk management strategy in relation to OpenAI, reported AI latency issues, and surprising performance of Hermes 3 8B model over Mistral 7B.

- Members seek Spanish function calling datasets for journalistic relevance and discuss Sundar Pichai's comments on AI's role at Google.

Eleuther Discord

- Members discuss running multiple instances of GPT-NeoX, challenges with RAG and CSV data, entity extraction tuning, optimizations in LLMs, and limitations of diffusion models compared to GANs.

Interconnects Discord

- Elon Musk's discussions to boost xAI valuation, insights on Claude 3 tokenizer, AI2's new office location, and reactions to high MacBook Pro prices.

- Voiceover for personal articles, reflecting a shift towards audio elements for enhanced content delivery.

Notebook LM Discord

- Invitation for usability study, advancements with Simli avatars in podcasts, usage of Pictory for podcast video creation.

- User challenges in generating Spanish podcasts and techniques for voice splitting in podcasts.

GPU MODE Discord

- AI's impact on software engineer roles, growing interest in deep tech, API deprecation warnings, memory profiling in Rust applications.

- Discussions on ThunderKittens talk and community bonding.

Torchtune Discord

- Training issues with Llama 3.2 QLoRA, challenges in quantization layers, activation checkpointing impacts, and proposals for dynamic cache resizing.

- Implementation of multi-query attention and discussions on exporting models to ONNX for lower-end hardware optimization.

Cohere Discord

- Dr. Vyas' upcoming talk on SOAP Optimizer, concerns on Command R model's detectability, and inquiries on invite responses.

- Comparisons between Embed V3, ColPali, and JINA CLIP, and guidance on seeking help for account issues.

Latent Space Discord

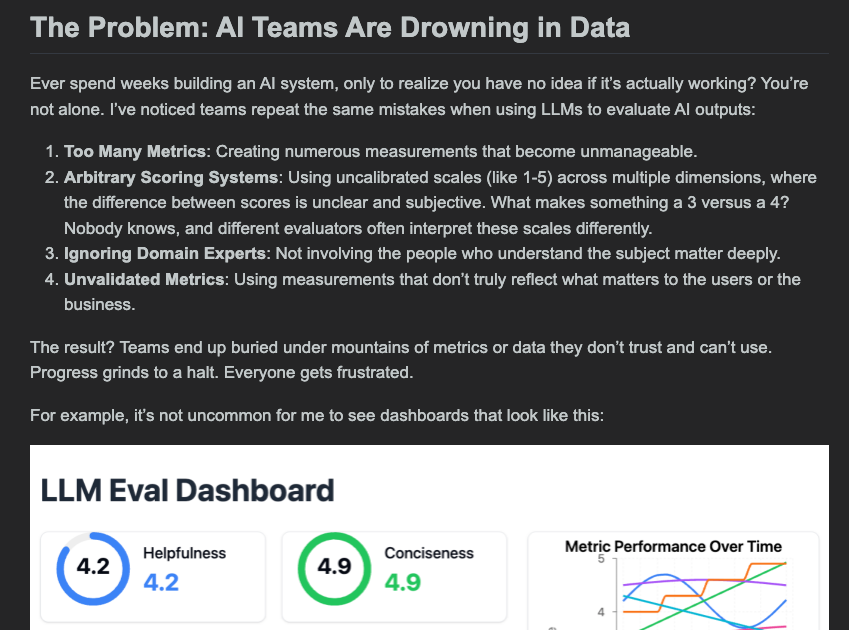

- Browserbase's funding announcement, ChatGPT's new feature for chat history search, and insights on LLM evaluation traps.

- OpenAI's Realtime API updates, launch of SimpleQA benchmark, and focus on combating hallucinations in AI outputs.

Tinygrad Discord

- Discussions on Tinycorp's Ethos NPU stance, management of long training jobs on Tinybox, and unique building blocks in Qwen2 model.

- Reports of EfficientNet issues, exporting models to ONNX debates, and concerns over output configurations.

Modular Discord

- Evolving idioms in Mojo language, resource scarcity in learning linear algebra, and ambitions for compatibility with C++.

- Syntax debates and plans for custom decorators in Mojo to enhance functionality.

LlamaIndex Discord

- Launch of create-llama app for rapid LlamaIndex app development, game-changer tools from ToolhouseAI, and demonstrations on multi-agent query pipelines.

- Integration insights of RAG and Text-to-SQL, user interaction enhancements via LlamaIndex for automating SQL generation.

HuggingFace Today I'm Learning

Llama-3.1 70B compatibility:

- Concerns were raised about PC capability to handle Llama-3.1 70B, highlighting the need for parallel computing.

Fine-tuning datasets on Hugging Face:

- Discussions on finding dedicated spaces for fine-tuning tasks and the importance of not cross-posting.

Adding datasets for fine-tuning on Hugging Face:

- Reference to a Hugging Face course on dataset preparation provided to assist in starting fine-tuning processes.

LLM sizing for general Q&A and sentiment analysis:

- Struggles with LLM selection for general Q&A and sentiment analysis due to hardware limitations discussed, with consideration given to optimizing laptop resources.

HuggingFace NLP Course Highlights

This section discusses various topics highlighted in the HuggingFace NLP Course discussions. It covers diverse subjects such as Latent Space Regularization, Computational Modeling Guidelines, Turing's Contributions, and Congressional Discourse analysis using Nomic Atlas. Each topic provides valuable insights and resources for researchers and policy analysts. The section also includes discussions on improvements in Transformer Tokenizer, Docker deployment using HF Chat UI, and Dstack configuration for Docker and Compose use. These discussions showcase the ongoing advancements and challenges in the NLP and AI communities.

Discussion on Various AI Tools and Applications

This section delves into a variety of conversations surrounding different AI tools and applications. Members discuss the organization of custom GPT files, emphasizing the importance of data management for optimal performance. The implementation of RAG on scientific papers and the compilation choices for a Cave Johnson AI are explored. Additionally, the significance of organized data for chatbots to avoid unnecessary token usage costs is highlighted. Another thread focuses on stochasticity implications in AI behaviors and seeks access to a prompt generation tool for effective task prompts within the OpenAI Playground. The section also covers the launch of Perplexity Supply, shipping to multiple countries and inviting sign-ups for updates. Conversations in various AI-specific channels touch on file upload issues, Pro subscription promo code problems, changes in Spaces and Collections features, and the launch of NFL widgets. Discussions also compare Perplexity and Consensus for medical research effectiveness. Aider's incorporation of new commands, anticipation for Haiku 3.5 release, and comparisons between Qodo AI and Cline are further outlined. Lastly, OpenRouter announcements address key issues like OAuth disruptions and the seeking of alpha testers for a flexible macOS chat app.

Conversations on Various AI Topics

This section covers diverse discussions on AI-related topics within different communities. Members engaged in conversations ranging from user requests for integration features to market insights on GPUs, training models, stable diffusion issues, and UI preferences. The section also delves into AI ethics discussions such as Microsoft's de-risking from OpenAI dependency, concerns over AI latency, and deployment of autonomous Twitter agents. Furthermore, topics like the effectiveness of Hermes 3, deployment of Twitter agents, and concerns over data privacy policies of API-based AIs were explored. The section also includes research insights on modular duality in optimization, training diffusion models, limitations of diffusion models, operator norms in neural networks, and interpretability discussions on Winogrande, evaluation metrics, and AI concepts. Conversations on new features like the sae_dashboard tool, advancements in generating synthetic data, and insights into the Chatbot Arena's Creative Writing category were also highlighted. Lastly, updates on Elon Musk's xAI funding talks, discussions on Cursor premium, Robonato's embodiment needs, and insights from the Chatbot Arena were shared.

Interconnects with Nathan Lambert

This section discusses various topics related to AI and technology. It covers insights on the Claude 3 Tokenizer, AI2's new office location, pricing of MacBook Pros, and critiquing AI2's video. Members express enthusiasm for the Pacific Northwest scenery and engage in discussions about living near scenic views. The section also mentions a usability study for NotebookLM, remote chat opportunities, and essential study details. Additionally, it touches on topics like podcast generation limitations, issues with language switching, and audio segmentation techniques in NotebookLM Discord channels. Various GPU modes are explored, including torch, torchao, and rocm, discussing topics such as FSDP2 API updates, memory profiling, ROCM version concerns, and sparsity-pruning techniques.

ThunderKittens Update and Livestream Highlights

A user plans to schedule a talk about ThunderKittens to discuss features and feedback. A livestream titled 'CUDA + ThunderKittens, but increasingly drunk' showcased CUDA content. ThunderKittens is aimed at offering higher abstraction than libraries like Cutlass and easier use than compiler-based solutions like Triton. ThunderKittens allows users to write custom CUDA code if needed. Examples of Mamba-2 and H100 kernels demonstrate the platform's flexibility. Viewers of the livestream were informed about a minor screen sharing hiccup. The conversation emphasized the trade-off between power and ease of use in compiler-based solutions versus libraries. ThunderKittens is designed for handling complex tasks while accommodating custom CUDA code. The discussion delved into the extensibility and precision of ThunderKittens, showcasing different kernel examples.

Mojo and Modular Discussions

The discussions in this chunk cover various topics related to Mojo and Modular, highlighting evolving idioms in Mojo, the challenges of learning linear algebra, model export strategies, implications of test time training, and the potential compatibility of Mojo with C++. There is also a debate on C++ macros, custom decorators in Mojo, and alternative preprocessing options. Members are exploring tools like GPP for enhancing development experiences. These discussions showcase the ongoing evolution and adaptation of programming languages and methodologies in the AI and machine learning space.

Technical Challenges and Discussions

- LoRA Finetuning Remains Unresolved: Members discuss challenges with LoRA finetuning on H100 GPUs, mentioning QLoRA as a potential workaround.

- Quantization Challenges Persist: Ongoing issues with BitsAndBytes for Hopper 8bit quantization are raised.

- Clamping Issue in Image Decoding: Concerns about out-of-range values affecting image quality are highlighted.

- LLM Agents Quizzes and Hackathon Announcements: Details shared about quizzes, hackathon, course sign-up, and joining course discussions.

- Transformer Labs Event: Event details for training RAG on LLMs and Lumigator tech talk are provided.

- Performance Comparison in Function Calling Models: Discussion on underperformance of Llama-3.1-8B-Instruct (FC) compared to Llama-3.1-8B-Instruct (Prompting).

FAQ

Q: What is the process discussed by Hamel Husain for creating a LLM-as-a-Judge?

A: The process involves aligning with domain experts through a technique called Critique Shadowing. It includes finding a principal domain expert, creating a dataset, having the expert review the data and provide critiques, fixing errors, building the LLM Judge, performing error analysis, and creating specialized judges as needed.

Q: What are the key themes discussed in relation to AI developments and models?

A: Some key themes include Apple's M4 chips supercharging AI performance, updates and controversies surrounding AI models, challenges faced in fine-tuning and training AI models, and the disruption of software engineering by AI.

Q: What are some of the advancements and challenges discussed in the NLP and AI communities?

A: Topics include Latent Space Regularization, Computational Modeling Guidelines, Turing's Contributions, Congressional Discourse analysis using Nomic Atlas, improvements in Transformer Tokenizer, Docker deployment using HF Chat UI, and Dstack configuration for Docker and Compose use.

Q: What are some of the discussions focused on in the various AI-related Discord channels?

A: Discussions cover topics such as AI ethics, market insights on GPUs, training models, stable diffusion issues, UI preferences, AI behavior stochasticity implications, prompt generation tools, deployment of Twitter agents, modular duality in optimization, training diffusion models, operator norms in neural networks, and more.

Q: What specific challenges and advancements were mentioned in the Torchtune Discord channel?

A: Challenges discussed include training issues with Llama 3.2 QLoRA, challenges in quantization layers, activation checkpointing impacts, proposals for dynamic cache resizing, implementation of multi-query attention, and exporting models to ONNX for lower-end hardware optimization.

Q: What are some of the topics covered in the Eleuther Discord channel?

A: Topics include running multiple instances of GPT-NeoX, challenges with RAG and CSV data, entity extraction tuning, optimizations in LLMs, and limitations of diffusion models compared to GANs.

Q: What discussions take place in the Interconnects Discord channel?

A: Discussions include Elon Musk's talks to boost xAI valuation, insights on Claude 3 tokenizer, AI2's new office location, reactions to high MacBook Pro prices, and a shift towards audio elements for enhanced content delivery.

Q: What are some of the AI tools and applications discussed in the Tinygrad Discord channel?

A: Topics include discussions on Tinycorp's Ethos NPU stance, management of long training jobs on Tinybox, unique building blocks in Qwen2 model, reports of EfficientNet issues, exporting models to ONNX debates, and concerns over output configurations.

Q: What programming language discussions are highlighted in the Modular Discord channel?

A: Discussions focus on evolving idioms in Mojo, challenges in learning linear algebra, model export strategies, compatibility of Mojo with C++, debate on C++ macros, custom decorators in Mojo, and alternative preprocessing options.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!