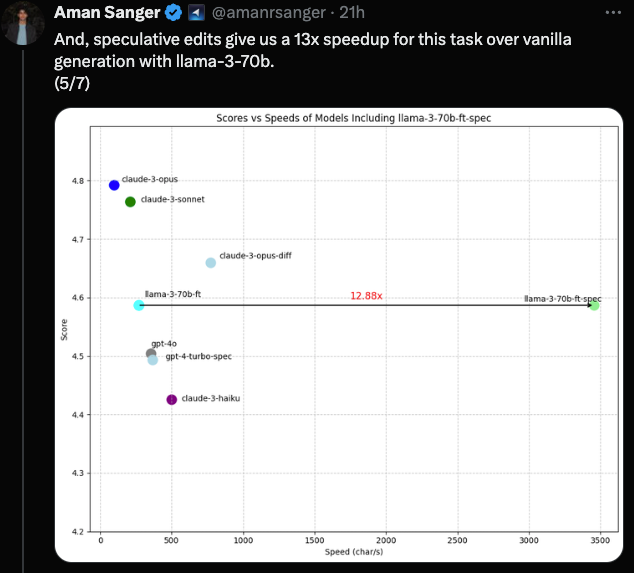

[AINews] Cursor reaches >1000 tok/s finetuning Llama3-70b for fast file editing • ButtondownTwitterTwitter

Chapters

AI Discord Recap

Innovations in AI Technologies and Community Discussions

Discord Community Highlights

Cohere Discord

Topics Discussed in OpenAI Community Channels

Curiosity-driven Exploration and AI Applications

Nous Research AI Updates

Mojo SDK Discussion

Interesting Discourse on GPU Programming

New Video Generation Dataset and AI Multimodal Models

Interconnects and LangChain AI Updates

AI Collective Discussions

Future Hardware Predictions and Industry Updates

AI Discord Recap

The AI Discord Recap section provides a summary of discussions across different Discord channels related to AI developments and advancements. The content includes conversations about GPT-4o, quantization and optimization techniques, new benchmarks, datasets, and models unveiled, and various technical discussions and tutorials shared. It also highlights the buzz and criticism surrounding GPT-4o, advancements in quantization and optimization techniques, and the introduction of new resources in the AI community. The section offers insights into the latest trends and conversations within the AI Discord community.

Innovations in AI Technologies and Community Discussions

The section highlights various advancements in AI technologies and ongoing discussions within different Discord communities, such as the release of models like GPT-4o, Viking 7B, and Hermes 2 Θ. Additionally, the community engages in debates around AI transparency, model performance, and the impact of AI on future work and emotional design. In the Modular and CUDA MODE Discords, members explore advancements in Mojo's portability and improvements in CUDA stream handling. Furthermore, engineers discuss challenges and solutions related to CUDA, including tensor operations, compiler issues, and performance optimization techniques. The section also covers updates on AI tools like the Perplexity AI's performance, the HuggingFace models, and discussions on model building, voice assistants, and API refinements. Overall, the community is actively involved in refining AI models and exploring new technological possibilities.

Discord Community Highlights

This section highlights various discussions and developments from different Discord communities related to AI and machine learning. It includes updates on the energy demands of AI models, advancements in multimodal models like GPT-4o, breakthroughs in video dataset creation, challenges and solutions faced by developers in using different AI tools, as well as licensing debates and technical discussions on model optimizations and AI model representations. The content also touches on community events, product launches, and ongoing research initiatives, showcasing the dynamic and collaborative nature of these AI communities.

Cohere Discord

Users in the Cohere Discord channel discussed the success of the rerank-multilingual-v3.0 model and expressed a desire for a feature like ColBERT to highlight key words. The community also sought advice on a PHP client for Cohere. Another topic of interest was the scalability of Cohere's application toolkit and the performance of its reranking model compared to other open-source alternatives.

Topics Discussed in OpenAI Community Channels

The OpenAI community channels witnessed a range of discussions on various topics. Users debated GPU models like 4060 TI vs 4070 TI for gaming and AI tasks, discussed API alternatives like Invoke and Forge, and expressed frustrations about economic inequality. There were also debates about GPT-4o's performance and capabilities in vision analysis. Users shared a benchmark website for evaluating GPU performance and explored the concept of hyperstition in AI. Furthermore, discussions on the future job market in the AI-dominated world and concerns about AI's emotional realism were prevalent. In other channels, users inquired about GPT-4o's accessibility and functionality issues, discussed custom GPTs and voice features, and raised concerns about technical glitches. The effectiveness of various prompt strategies for GPT models, the AI's occasional production of incorrect data, and character role-playing for enhanced interaction were also explored. Additionally, challenges with maintaining prompt fidelity and practical concerns about API usage limits were discussed. The community also delved into the impact of AI on the job market, with some members predicting new job opportunities and others expressing concerns about mass unemployment. The balance between creating relatable AI and efficient, emotionless assistants was a key point of debate. Further discussions included concerns over GPT-4o's performance, its vision capabilities, and its implications for the future job market.

Curiosity-driven Exploration and AI Applications

In a community dedicated to HuggingFace, discussions centered around encouraging exploration in reinforcement learning using epsilon greedy policy, curiosity-driven exploration, and choosing actions leading to unusual situations. Members shared papers and models focusing on joint language modeling for speech and text, the PaliGemma Vision Language Model, Veo Video Generation Model, and more. The section also highlighted the release of Hermes 2 Θ, a model merging Hermes 2 Pro and Llama-3 Instruct, and advancements in Nordic Language Models and AI tools like SUPRA for uptraining transformers.

Nous Research AI Updates

The section covers various updates from the Nous Research AI community, including the release of the Hermes 2 Θ model surpassing benchmarks, challenges with GPT-4 variants in reasoning tasks, and concerns about GPT-4o's generic output structure. Discussions also revolve around experimenting with self-merging Hermes for a new model, issues with model inference triggering Chinese text, and users expressing disappointment in the performance of large language models. Meta's ImageBind multimodal AI model release and the substantial resources required to build LLMs from scratch are also highlighted. The section also delves into discussions about Hermes 2 Theta's math performance, challenges faced in building Imatrix for large models, model recommendations for coding tasks, and strategies for handling long contexts and controlling model outputs for coding purposes.

Mojo SDK Discussion

The discussion on the Mojo SDK covered various aspects such as the recommended resources for learning Mojo, optimism about Mojo's future, the flexibility of Mojo compared to CUDA, concerns around open-source commitment, and community project suggestions. Members emphasized the importance of the SDK, highlighted its potential, and shared learning opportunities like the Mandelbrot tutorial and community contributions. The conversation also touched on the advantages of Mojo's GPU flexibility, debates on open-source commitments, and ideas for beginner-friendly and advanced learning projects within the Mojo community.

Interesting Discourse on GPU Programming

CUDA MODE ▷ #off-topic (3 messages):

- A member shared a link to the GPU Programming Guide, offering insights for GPU programming enthusiasts.

- Another member shared a Twitter link without discussing its content.

CUDA MODE ▷ #triton-puzzles (1 message):

- A user shared their experience with the CUDA puzzle repository, encountering a floating-point overflow error when initializing float arrays.

- They sought help understanding the discrepancy in results between their naive and reduction implementations.

CUDA MODE ▷ #llmdotc (141 messages🔥🔥):

- Discussions included wiping dependencies for code fixes, tracking CUDA streams, and proposing NVMe GPU DMA usage.

- Notable Pull Requests aimed to improve gradient accumulation and remove parallel CUDA streams.

CUDA MODE ▷ #bitnet (12 messages🔥):

- Members discussed the potential of bitnet 1.58, challenges in its quantization, and proposals for centralizing the bitnet work.

- Existing solutions like HQQ and BitBLAS were highlighted for addressing quantization challenges.

Summary

New Video Generation Dataset and AI Multimodal Models

- New Video Generation Dataset Introduced: A user shared a link to a paper introducing VidProM, a dataset comprising 1.67 million text-to-video prompts and 6.69 million videos generated by diffusion models, aiming to address the lack of publicly available prompt studies.

- Neural Approach for Bilinear Sampling: A discussion on challenges using bilinear sampling for neural networks and a proposal to train a small neural network to approximate bilinear sampling for optimizable sampling locations.

- Google's Imagen 3 Dominates: Excitement around Google's Imagen 3 model, claiming superiority in image generation with better detail, lighting, and less artifacts, particularly for synthetic data generation.

- Community Dataset Idea: Enthusiasm for generating new community datasets using models like Imagen 3 for data collection.

- Stable Diffusion Super Upscale Method: Reddit post discussing a new method for super upscaling images using Stable Diffusion, promising high-quality results without distortion.

Interconnects and LangChain AI Updates

The section discusses updates related to Nathan Lambert's posts and interactions on Interconnects. There are discussions on Huberman approval, OpenAI critique, crafting posts, misunderstanding in LangChain, fix for Jsonloader, transfer of embeddings, Neo4j index issue, adding memory to chatbot. Additionally, LangChain's discussion reflects on rate exceeded errors, server inactivity, logs for deployed revisions. The LangChain section also covers incorporating crypto payments, launching a real estate AI assistant. Finally, the section provides insights into a universal web scraper agent, a Hindi 8B chatbot model, and an Invisibility MacOS Copilot. The content touches on various technical advancements, discussions, and tool launches in the AI landscape.

AI Collective Discussions

- LLaMA vs Falcon Debate: Discussion comparing Falcon 11B and LLaMA 3, noting differences in licensing.

- Training Mistral and TinyLlama: Issues with TinyLlama training crashing and manual workarounds.

- Hunyuan-DiT Model Announcement: Introduction of the Hunyuan-DiT model for Chinese understanding.

- Chat Format Consistency: Discussions on using Alpaca format for training and concerns with follow-up questions.

- ChatML and LLaMA Tokens: Addressing queries about special tokenizers and conversation types for LLaMA 3.

The AI Collective also touches on training approaches, model announcements, and chat format consistency.

Future Hardware Predictions and Industry Updates

The author expresses optimism about NVMe drives and Tenstorrent technology over the next 3-4 years in the hardware industry. Additionally, there are lukewarm predictions for GPUs in the 5-10 year timeframe. Nvidia's market valuation surpassing $2.2 trillion is highlighted in the current market scenario.

FAQ

Q: What are the key discussions highlighted in the AI Discord Recap section?

A: The AI Discord Recap section provides a summary of discussions across different Discord channels related to AI developments and advancements, including topics like GPT-4o, quantization and optimization techniques, new benchmarks, datasets, models unveiled, technical discussions, and tutorials.

Q: What models were discussed in the AI Discord communities?

A: Models like GPT-4o, Viking 7B, Hermes 2 Θ, and others were discussed in different AI Discord communities, showcasing advancements in AI technologies and ongoing discussions.

Q: What topics were debated in the OpenAI community channels?

A: Users in the OpenAI community channels debated topics such as GPU models for gaming and AI tasks, API alternatives like Invoke and Forge, economic inequality, GPT-4o's performance in vision analysis, hyperstition in AI, the future job market in an AI-dominated world, AI's emotional realism, and more.

Q: What discussions took place in the community dedicated to HuggingFace?

A: Discussions in the HuggingFace community centered around reinforcement learning using epsilon greedy policy, joint language modeling for speech and text, the PaliGemma Vision Language Model, Veo Video Generation Model, advancements in Nordic Language Models, and AI tools like SUPRA for uptraining transformers.

Q: What updates were shared in the section covering the Nous Research AI community?

A: Updates from the Nous Research AI community included the release of the Hermes 2 Θ model surpassing benchmarks, challenges with GPT-4 variants in reasoning tasks, concerns about GPT-4o's generic output structure, experimenting with self-merging Hermes for a new model, and discussions on various AI topics.

Q: What were the key points of discussion in the Mojo SDK conversation?

A: The Mojo SDK conversation covered recommended learning resources, the flexibility of Mojo compared to CUDA, concerns around open-source commitment, community project suggestions, the advantages of Mojo's GPU flexibility, and ideas for beginner-friendly and advanced learning projects.

Q: What were the key updates shared in the CUDA MODE Discord channels?

A: Updates included discussions on wiping dependencies for code fixes, tracking CUDA streams, NVMe GPU DMA usage proposals, challenges in bitnet quantization, bilinear sampling for neural networks, and excitement around Google's Imagen 3 model.

Q: What are some of the technical advancements and tool launches discussed in the AI landscape?

A: Discussions covered various technical advancements, tool launches, training approaches, model announcements, and chat format consistency in different AI Discord communities.

Q: What future predictions and evaluations were made in the AI Collective regarding hardware technologies?

A: The AI Collective expressed optimism about NVMe drives and Tenstorrent technology in the next 3-4 years, lukewarm predictions for GPUs in the 5-10 year timeframe, and highlighted Nvidia's market valuation surpassing $2.2 trillion in the current market scenario.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!