[AINews] Did Nvidia's Nemotron 70B train on test? • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Reddit Recap

Exciting Developments and Challenges in AI Communities

Latent Space Discord

Discussion on AI Model Performance

Yandex YaLM 100B and Embedding Debates

Mechanistic Interpretability and Sparse Autoencoders Discussion

Skepticism on A100 Matrix Kernel Speeds

tinygrad (George Hotz): ML Library and Tinybox

Models and Collaborative Insights

Community Updates and Discussions

AI Community Conversations

AI Twitter Recap

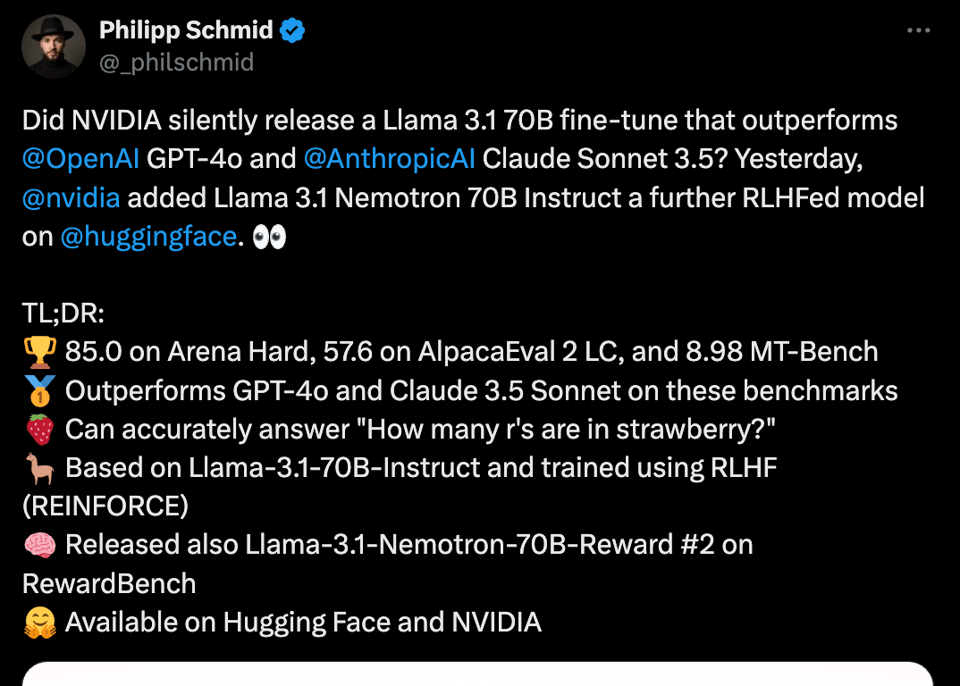

The AI Twitter recap section provides highlights from recent AI model releases, updates, research, innovations, tools, APIs, and industry news shared on Twitter. Some key points include Mistral releasing new models, Nvidia's Nemotron 70B outperforming competitors, updates on Hugging Face integrations, discussions on advanced cognitive architectures, in-context reinforcement learning, task superposition in LLMs, serverless workflows with Amazon Bedrock, dynamic few-shot prompting techniques, the TorchTitan repository for parallelism capabilities, and insights on the impact of energy on human civilization and deep learning.

AI Reddit Recap

The /r/LocalLlama community shared various themes and discussions related to AI developments and projects. One theme focused on democratizing medical LLMs for 50 languages, introducing ApolloMoE's circuits-based paradigm. Another theme highlighted serving 3.3 million context for Llama-3-8B on a single GPU using DuoAttention. The community also discussed chain-of-thought reasoning without prompting in LLMs, showcasing Google's CoT decoding method. Additionally, they explored local text-to-speech alternatives, and a project using LLM as a game master for procedural content generation.

Exciting Developments and Challenges in AI Communities

The latest updates from various AI Discord communities showcase exciting developments and challenges within the space. Perplexity AI introduces 'Perplexity Purchases' for streamlined shopping while Reasoning Mode impresses users with its analytical capabilities. Meanwhile, in the OpenRouter Discord, discussions highlight the maintenance of Grok 2 and the strong performance of NVIDIA's Nemotron 70B model. Unsloth AI launches INTELLECT-1 for decentralized training and Mistral introduces Pocket LLMs for edge use cases. Additionally, discussions in the Eleuther Discord focus on Yandex YaLM 100B's popularity and debates between SwiGLU and SinGLU. These communities are actively engaged in exploring new models, addressing performance challenges, and sharing insights on the future of AI technology.

Latent Space Discord

Users in the Latent Space Discord discussed various topics related to AI models and advancements. Gemini's struggles with their free tier were noted, as well as Mistral and Nvidia introducing new models. E2B received funding, and there was a call for benchmarking tools. Cohere Discord members found inspiration in the community, while Modular Mojo Discord members appreciated the Playground feature. Stability AI Discord members discussed various issues, and OpenInterpreter Discord proposed a GitHub Copilot extension. Mozilla AI Discord shared updates on their AI Stewardship Practice Program. Torchtune Discord members celebrated a new paper, and LLM Agents Discord highlighted the optimization capabilities of Large Language Models. The LangChain AI Discord announced plans to revamp their community, and HuggingFace announced several updates including Gradio 5.0 launch, Sentence Transformers v3.2.0, HuggingChat multimodal feature, FLUX LoRA Lab, and the LLM Evaluation Guidebook release.

Discussion on AI Model Performance

Members discussed the hypothetical performance of a model setup with 72GB of VRAM and 128GB of DDR4 RAM, questioning if it could achieve 5-6 t/s in processing speed. Additionally, there was a mention of a custom PyTorch linear layer and its integration with Autograd for automatic gradients.

Using Ollama with Hugging Face: An introduction to using Ollama, which allows interaction with GGUF models directly from the computer without creating new Modelfiles was shared, emphasizing its simple command syntax. The discussion highlighted accessibility, as Ollama claims to run any of the 45K public GGUF checkpoints on Hugging Face.

Issues with Gradio Documentation: A user raised concerns about the usability of the Gradio documentation, noting readability issues with text on a dark background and lack of replies from the maintainers. This highlighted a need for better engagement from the community and maintainers regarding documentation improvements.

Recommendations for TTS Models on Hugging Face: The community member asked for recommendations for Text-to-Speech (TTS) models from the Hugging Face library, prompting a user to point to trending models. Specifically, the SWivid/F5-TTS model was highlighted as an updated option available for TTS tasks.

AI's Role in the Job Market: A conversation about AI roles in the workplace emphasized that while AI tools, like large language models, are emerging, the job market will always evolve. Members noted the importance of adapting to new tools and technologies similar to the transition seen with spreadsheet software in various jobs.

Yandex YaLM 100B and Embedding Debates

Members discussed the Yandex YaLM 100B model, which is trained on 100 billion parameters from diverse sources including English and Russian. The model's performance in non-Western contexts was highlighted, noting its popularity in Russia. Additionally, debates arose regarding the preference between SwiGLU and SinGLU despite the latter's reported advantages in speed and lower loss. Critiques were made on OpenAI's embedding models, stating their underperformance based on current standards. Discussions also revolved around open source model licensing and the confusion it creates, especially concerning major companies like Meta. Finally, the exploration of embedding approaches for semantic search showcased the effectiveness of decoder-only models in generating embeddings.

Mechanistic Interpretability and Sparse Autoencoders Discussion

Several discussions took place regarding mechanistic interpretability and the use of sparse autoencoders (SAEs) in unlearning knowledge in language models. Suggestions were made to expand key terms to include 'explainability' for a more comprehensive search of relevant papers. The discussion around a paper on using SAEs to unlearn knowledge revealed mixed excitement ratings. Members expressed curiosity about the project's application and potential effectiveness in AI safety. The effectiveness of SAEs in extracting interpretable features from InceptionV1 was highlighted, revealing new curve detectors and simplifying polysemantic neurons. The application of SAEs also led to the discovery of additional curve detectors in the InceptionV1 framework. Overall, the progress in feature extraction showcased the efficacy of mechanistic interpretability methods in neural network analysis.

Skepticism on A100 Matrix Kernel Speeds

Concerns were raised about the matrix multiplication kernels on A100, particularly in terms of the speed and the inclusion of L1 cache effects for the naive kernel. This issue has led to discussions within the community regarding real-world performance and potential problems like warp stalls. One suggestion made was to include educational footnotes in the new edition to address real-world performance considerations when discussing these kernels, as presenting the naive kernel's speed without context may lead to misconceptions about practical application.

tinygrad (George Hotz): ML Library and Tinybox

This section discusses the potential of the tinygrad ML library and inquiries about preordering the tinybox model. There are concerns about OpenCL handling issues, implementation of MSE and MAE, and compatibility with Windows. Users share insights on disabling gradient calculations, ensuring JIT input size consistency, progress on TD-MPC implementation, and discussions on learning rate schedulers. Backpropagation functionality is confirmed, and the community explores ways to enhance cloud resources for development. Links to GitHub repositories and tools mentioned are also provided.

Models and Collaborative Insights

Participants shared thoughts on replication strategies from other successful repositories, indicating a willingness to improve efficiency and data management processes. Ideas included converting to MDS format for streaming data from Cloudflare, which would expedite training and reduce costs.

-

Dinov2 gets optimized in layers: Discussion emerged around distilling Dinov2 into the early layers, leveraging its training on meaningful downstream tasks related to images for efficiency. It was noted that this approach performs better than simply using cross attention with CLIP embedding.

-

Introduction of EchoPrime for echocardiography: EchoPrime is a new multi-view, view-informed, video-based vision-language foundation model trained on over 12 million video-report pairs, addressing limitations in traditional echocardiography AI models. This model utilizes contrastive learning to create a unified embedding model, enhancing the performance and application scope in cardiac imaging.

-

Enhancements on EchoCLIP model: A member announced the preprint release from a coworker who has significantly improved upon their earlier EchoCLIP model by scaling it up and refining the experimental design. This new model exhibits much better capabilities compared to the original one created about six months prior.

Links mentioned:

- EchoPrime: A Multi-Video View-Informed Vision-Language Model for Comprehensive Echocardiography Interpretation: Echocardiography is the most widely used cardiac imaging modality, capturing ultrasound video data to assess cardiac structure and function. Artificial intelligence (AI) in echocardiography has the po...

Community Updates and Discussions

- Cohere Embed API error handling: Users suggested retry logic based on error codes for handling failed batch embedding.

- Speeding up RAG functionality: Switching

citations_qualitytoFASTcan enhance speed. - Reducing citations: Options include manual truncation or ranking for top chunks.

- Trial Key limits: Users noted trial keys allow 1,000 API calls per month and are tied to accounts.

- Issues with trial key usage: Some faced access problems with trial keys in Cohere dependency, advised to wait for resets.

- Community meetings and showcases: Scheduled events for project demos and sharing learning opportunities.

- Text-to-speech for chatbots: New feature adds dynamic audio responses and receives user excitement.

- Modular Mojo updates: Updates and praises for Playground feature, upcoming community showcase, and bug fixes.

- Mojo projects discussion: Excitement around System Prompt Templates and launch of In-Depth Question Answering Evaluation App.

- Cohere Toolkit developments: Text-to-speech availability for chatbots and user enthusiasm.

- OpenInterpreter extension ideas: Suggested Copilot extension and discussions on bandwidth, kernel panic, and GitHub extension listing.

- LightRAG advancements: Improved contextual awareness with LightRAG compared to GraphRAG.

- DSPy community activities: Updates on workflow system testing, dspygen framework, Livecoding DSPy Signatures, and Loom Recordings.

- LangChain community changes: Planned closure of Discord community to build a new, improved space.

AI Community Conversations

The LangChain AI community is seeking moderators, discussing API routing with agents and Docker Compose setup. In LangServe, there are inquiries about binding tools and issues with optional fields in Playground, leading to a GitHub issue. OpenAccess AI Collective discusses mentorship for AIFoundry and access requirements for the Mistral AI model. LLM Agents talk about optimization tasks and introducing the Language-Model-Based Evolutionary Optimizer (LEO). Torchtune members express excitement over a new paper. Mozilla AI announces a pilot program for AI stewardship practice.

FAQ

Q: What are some key highlights from recent AI model releases, updates, research, and innovations shared on Twitter?

A: Some key highlights include Mistral releasing new models, Nvidia's Nemotron 70B outperforming competitors, updates on Hugging Face integrations, discussions on advanced cognitive architectures, in-context reinforcement learning, task superposition in LLMs, serverless workflows with Amazon Bedrock, dynamic few-shot prompting techniques, the TorchTitan repository for parallelism capabilities, and insights on the impact of energy on human civilization and deep learning.

Q: What were the main themes discussed by the /r/LocalLlama community related to AI developments and projects?

A: The themes included democratizing medical LLMs for 50 languages with ApolloMoE's circuits-based paradigm, serving 3.3 million context for Llama-3-8B on a single GPU using DuoAttention, chain-of-thought reasoning without prompting in LLMs with Google's CoT decoding method, local text-to-speech alternatives, and using LLM as a game master for procedural content generation.

Q: What were the latest updates and discussions from various AI Discord communities?

A: Updates included Perplexity AI's 'Perplexity Purchases', Reasoning Mode's analytical capabilities, maintenance of Grok 2 and the performance of NVIDIA's Nemotron 70B in OpenRouter Discord, INTELLECT-1 launch by Unsloth AI, Mistral's Pocket LLMs for edge use cases, discussions on Yandex YaLM 100B in Eleuther Discord, and updates from Mozilla AI Discord on AI Stewardship Practice Program.

Q: What were some discussions around AI models, advancements, and community interactions seen in various Discord communities?

A: Discussions ranged from Gemini's struggles with their free tier, Mistral and Nvidia introducing new models, E2B funding, benchmarking tools call-outs, discussions on SwiGLU and SinGLU, critiques on embedding models from OpenAI, open-source model licensing debates, to exploration of embedding approaches for semantic search and mechanistic interpretability using sparse autoencoders.

Q: What were the concerns and recommendations raised around AI-related topics like TTS models, role in the job market, and documentation usability?

A: Concerns and recommendations included issues with Gradio documentation, recommendations for TTS models on Hugging Face library like SWivid/F5-TTS, discussions on AI's role in the job market evolution, and the need for better engagement regarding documentation improvements.

Q: What insights were shared on specific AI-related projects and models like Dinov2, EchoPrime, and EchoCLIP?

A: Insights included optimizing Dinov2 in layers, introducing EchoPrime for echocardiography, enhancing the EchoCLIP model, and advancements in feature extraction and interpretability using Sparse Autoencoders in neural networks.

Q: What were some notable discussions related to performance considerations, model handling, and tool developments in the AI space?

A: Discussions touched upon matrix multiplication kernels on A100, the potential of the tinygrad ML library, implications of using custom PyTorch linear layers, concerns about OpenCL handling, incorporating MSE and MAE implementations, and exploring ways to optimize cloud resources for development among others.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!