[AINews] Execuhires: Tempting The Wrath of Khan • ButtondownTwitterTwitter

Chapters

AI News Recap and Developments

AI Reddit Recap

Discord Community Highlights

Discord Updates

Discussion in Discord Channels

HuggingFace 🤖 Cool Finds

Perplexity AI, Medallion Fund, Hybrid Antibodies, Ducks Classification

CUDA Mode Discussions and Updates

Discussing AI Tools and Features

Gemma 2B and Qwen 1.5B Comparison

Quarto Website Setup and File Structure Confirmation

OpenAccess AI Collective Discussion Recap

LangChain AI & Share Your Work

AI News Recap and Developments

AI Twitter Recap

-

AI Model Developments and Benchmarks

- Gemini 1.5 Pro Performance: GoogleDeepMind's Gemini 1.5 Pro claimed the #1 spot on Chatbot Arena, excelling in multi-lingual tasks and technical areas like Math and Coding.

- Model Comparisons: OpenAI, Google, Anthropic, & Meta are at the frontier of AI development.

- FLUX.1 Release: Black Forest Labs launched the state-of-the-art text-to-image model, FLUX.1.

- LangGraph Studio: LangChainAI introduced LangGraph Studio for developing LLM applications.

- Llama 3.1 405B: Llama 3.1 405B is now available for free testing – the largest open-source model competitive with closed models.

-

AI Research and Developments

- BitNet b1.58: Discussion on BitNet b1.58, a 1-bit LLM aiding large models on memory-limited devices.

- Distributed Shampoo: Outperformed Nesterov Adam in deep learning optimization.

- Schedule-Free AdamW: Achieved a new SOTA for self-tuning training algorithms.

- Adam-atan2: Shared a code change to address divide by zero and numeric precision issues.

- Industry Updates and Partnerships

- Perplexity and Uber Partnership: Offered Uber One subscribers 1 year of Perplexity Pro for free.

- GitHub Model Hosting: Introduced model hosting on GitHub.

AI Reddit Recap

Theme 1. Efficient LLM Innovations: BitNet and Gemma

- A developer successfully fine-tuned BitNet, creating a compact 74MB model that achieves 198 tokens per second on a single CPU core.

- Gemma2-2B can now run locally on multiple platforms with the MLC-LLM framework.

- Gemma-2-9B-IT model outperforms Meta-Llama-3.1-8B-Instruct in BBH, GPQA, and MMLU-PRO.

Theme 2. Advancements in Open-Source AI Models

- fal.ai releases Flux, a 12 billion parameter text-to-image model similar to Midjourney.

- Writer introduces 70B parameter models for medical and financial domains, outperforming Google's medical model.

- Discussion on LLM benchmarking and potential capabilities compared to human doctors.

Theme 3. AI Development Tools and Platforms

- Microsoft launches GitHub Models to compete with Hugging Face.

- SQLite-vec v0.1.0 released for vector search in SQLite, supporting various platforms.

Theme 4. Local LLM Deployment and Optimization Techniques

- Open-source RAG implementations and a guide to building llama.cpp with NVIDIA GPU acceleration on Windows 11.

Discord Community Highlights

This section provides insights into various discussions happening in different Discord channels related to AI and machine learning communities. Topics range from model performance and improvements to industry trends and challenges. Users share experiences with models like Flux and Gemma, discuss GPU hosting services, debate on licensing and model ownership, and explore advancements in AI art and healthcare applications. Each Discord channel presents unique perspectives and contributions, fostering a vibrant community of AI enthusiasts.

Discord Updates

FLUX Schnell Weaknesses:

- The FLUX Schnell model was found to be undertrained and struggling with prompt adherence, leading to nonsensical outputs. Members cautioned against using it for synthetic datasets due to representational collapse.

Curated Datasets Importance:

- Emphasis was placed on the value of curated datasets over random noise to avoid wasting resources.

Bugs in LLM and Focus on Baseline Models:

- Bugs impacting over 50 experiments were resolved, highlighting the importance of debugging. Discussions shifted towards creating strong baseline models.

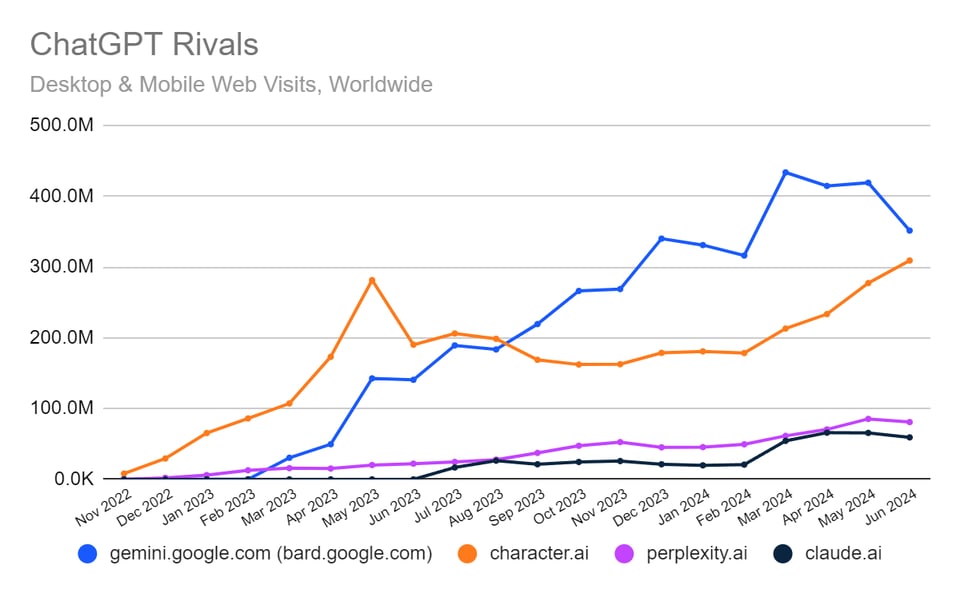

Interconnects Events and Character AI Deal:

- Interest in sponsoring events was shown, while skepticism surrounded a Character AI deal. Ai2's new brand with sparkles emojis was launched.

Magpie Ultra Dataset and RL Conference Dinner:

- HuggingFace released the Magpie Ultra dataset, and plans for a dinner at the RL Conference were considered for networking opportunities.

OpenRouter Website and Anthropic Services Concerns:

- Users faced issues with accessing the OpenRouter website and noted load problems with Anthropic services.

Chatroom Revamp and AI Key Acquisition:

- The chatroom saw enhancements in UI, and getting an API key was simplified for users.

Free Model Usage Limits and LLM Post-Facto Validation:

- Limits on free model usage were discussed, and post-facto validation challenges for LLM-generated outputs were highlighted.

Mojo's Error Handling and Installation Woes:

- Mojo's error handling dilemmas were discussed, along with installation challenges with Max.

Chat Sessions in RAG Apps and Feedback on New Models Page:

- Discussions on incorporating chat sessions in RAG apps and feedback on a potential new models page were shared.

Computer Vision Enthusiasm and Funding Influence:

- Members expressed interest in computer vision, and discussions reflected skepticism on genAI ROI and funding influence on technology focus.

Image Generation Time and Batch Processing Inquiry:

- Inquiries were made about image generation time and batch processing capabilities.

Discussion in Discord Channels

Challenges and Conversations in Discord Channels:

-

Mozilla AI Discord, DiscoResearch Discord, and AI21 Labs Discord: These guilds are inactive, and users are encouraged to notify if they need to be removed.

-

Stability.ai (Stable Diffusion) General Chat: Discussions on Flux model performance, GPU hosting services, licensing and model ownership, practical usage in AI art models, and photo-realistic rendering.

-

Unsloth AI (Daniel Han) General: Talks about training with LoRA, using TPUs for faster training, the impact of padding tokens, fine-tuning datasets for chat AI, and model loading best practices.

-

Unsloth AI (Daniel Han) Off-Topic: Conversations around Google surpassing OpenAI, debate on the nature of 'real', and skepticism about chat ratings.

-

Unsloth AI (Daniel Han) Help: Discussions on GGUF quantization issues, challenges in fine-tuning Llama 3.1, impact of small datasets on training, LoRA parameters, and model incompatibility.

-

Unsloth AI (Daniel Han) Showcase: Updates on Bellman model finetuning, testing the Q8 version of Bellman, and issues during model uploading process.

-

Unsloth AI (Daniel Han) Community Collaboration: A single message about timelines for assistance.

-

HuggingFace Announcements: Cover neural network simulation, image clustering techniques, new synthetic datasets, knowledge distillation trends, and finance/medical model releases.

-

HuggingFace General: Topics include learning resources for application development, model performance discussions, drafting project ideas, training autoencoders, and dataset licensing inquiries.

HuggingFace 🤖 Cool Finds

Exploring Knowledge Distillation:

Knowledge distillation is a machine learning technique for transferring learnings from a large ‘teacher model’ to a smaller ‘student model,’ facilitating model compression.

- It's particularly valuable in deep learning, allowing compact models to mimic complex ones effectively.

Emergence of Local LLM Applications:

An article highlighted the significant role of large language models (LLMs) in transforming enterprises over the next decade, focusing on Generative AI.

- It discusses advancements in R&D leading to models like Gemini and Llama 2, with Retrieval Augmented Generation (RAG) for better data interaction.

Building NLP Applications with Hugging Face:

An article on building NLP applications emphasizes the collaborative aspects of the Hugging Face platform, highlighting its open-source libraries.

- The resource aims to equip both beginners and experienced developers with tools to enhance their NLP projects effectively.

AI Bots and the New Tools:

A piece examined the evolution of AI bots, focusing on LLMs and RAG as pivotal technologies in 2024.

- It serves as a comprehensive overview suitable for newcomers, offering insights into patterns and architectural designs in AI bot development.

Overview of AI Bot Development:

The article synthesizes knowledge on various tools for developing intelligent AI applications, focusing on patterns, pipelines, and architectures.

- It's tailored to provide a generalist technical level while also addressing deeper theoretical aspects, making it accessible for tech-savvy newcomers.

Perplexity AI, Medallion Fund, Hybrid Antibodies, Ducks Classification

A recent discovery in 'Massive Mathematical Breakthrough' may reshape understanding of complex equations. 'Digital Organization' impacts workplace efficiency, with a survey revealing the dissatisfaction of employees. The 'Medallion Fund' managed by Renaissance Technologies boasts impressive returns. 'Hybrid Antibodies' show promise against HIV strains. A detailed classification of ducks and their habitats aids in studying their behaviors.

CUDA Mode Discussions and Updates

This section discusses various topics related to CUDA mode in the AI community. It covers off-topic messages, discussions on LLMDOTC, IRL, and general chat conversations. Users shared insights on the implementation progress of Llama 3, identified KV cache issues, discussed acquihires in the AI industry, highlighted randomness in tensor operations, and compared the performance of RDNA vs CDNA. Additionally, the section mentions various links shared during the discussions related to Llma.c, PyTorch, and other AI-related topics.

Discussing AI Tools and Features

The section covers various discussions related to AI tools and features. Listeners expressed excitement over innovative use cases for a Singapore accent in role-playing games. Feature clamping was discussed, raising questions regarding its impact on model performance in coding. The shift in the AI landscape from models like Claude 3.5 to Llama 3.1 and the expansion of RAG/Ops amidst competition in the LLM OS space were highlighted. Confusion over the podcast version was resolved, discussing minor editing discrepancies. In another part, users debated the effectiveness of Cursor and Cody in context management, praised Aider.nvim's unique functions, reported upcoming features in Claude, and discussed Composer's predictive editing capabilities. They also appreciated the collective usefulness of AI tools as digital toolboxes for developers. Links mentioned in these discussions provided additional context and resources for interested users.

Gemma 2B and Qwen 1.5B Comparison

Members discussed the comparative performance of Gemma 2B and Qwen 1.5B in various benchmarks, with Qwen 1.5B reportedly outperforming Gemma 2B. Despite Gemma 2B being perceived as overhyped, Qwen 1.5B demonstrated better results, including in MMLU and GSM8K metrics. The discussion highlighted the importance of recognizing the capabilities of different models in the AI landscape.

Quarto Website Setup and File Structure Confirmation

-

New PR for Quarto Website Launch: A new PR has been created to set up the Quarto website, enhancing the online presence of reasoning tasks. The PR includes all necessary images for clarity and detailed description on the changes made.

-

Clarification on Folder Structure: A member confirmed that 'chapters' is a top-level directory while all other files are also top-level files, ensuring a clear project organization. This structure is meant for ease of navigation and management within the repository.

OpenAccess AI Collective Discussion Recap

In this section, various discussions within the OpenAccess AI Collective (axolotl) Discord channel are highlighted. Members explored fine-tuning Gemma2 2B model and sought insights on efficient Japanese language models. Additionally, advancements in installing BitsAndBytes on ROCm were shared, showcasing improved compatibility with the latest GPUs.

LangChain AI & Share Your Work

Users discussed LangChain 0.2 features, implementing chat sessions in RAG apps, Postgres schema issues, fine-tuning for summarization, and the performance comparison of GPT-4o Mini and GPT-4. A blog post on testing LLMs using Testcontainers and Ollama in Python was shared. The Community Research Call #2 highlighted multimodality and autonomous agents. Collaboration opportunities were discussed, and a potential new models page was previewed. In the Torchtune section, users clarified QAT quantizers, reviewed SimPO PR, proposed documentation overhaul, and shared feedback on a potential new models page. MLOps @Chipro members discussed computer vision, machine learning conferences, genAI ROI, funding trends, and the need for diverse conversations. The Alignment Lab AI section touched on image generation time on A100 with FLUX Schnell and batch processing capabilities.

FAQ

Q: What is the purpose of knowledge distillation in machine learning?

A: Knowledge distillation is a technique used to transfer learnings from a large 'teacher model' to a smaller 'student model' in order to facilitate model compression.

Q: Can you explain the significance of large language models (LLMs) in the transformation of enterprises?

A: Large language models play a significant role in transforming enterprises by focusing on Generative AI and advancements in R&D, leading to models like Gemini and Llama 2, with techniques like Retrieval Augmented Generation for better data interaction.

Q: What are some key aspects of building NLP applications with Hugging Face?

A: Building NLP applications with Hugging Face emphasizes collaborative aspects of the platform, utilizing open-source libraries to equip developers with tools for enhancing their NLP projects effectively.

Q: What technology has been highlighted as pivotal in AI bot development for 2024?

A: In 2024, large language models (LLMs) and Retrieval Augmented Generation (RAG) have been highlighted as pivotal technologies in AI bot development, shaping the evolution of AI bots.

Q: What is the purpose of knowledge distillation in deep learning?

A: Knowledge distillation in deep learning allows compact models to effectively mimic complex models, making it valuable for model compression purposes.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!