[AINews] Execuhires: Tempting The Wrath of Khan • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Reddit Recap

Latent Space Discord

LAION, FLUX Schnell Weaknesses, and More

Discord Guild Status and Discussions

Exploring Knowledge Distillation, Local LLM Applications, AI Bots, and More

LM Studio and Perplexity AI Updates

CUDA and AI Discussions

Exploring AI Technologies and Toolkits

Nous Research AI ▷ #reasoning-tasks-master-list

Quarto Website Setup and File Structure Confirmation

Service Issues, Group Chat, Models Availability, and Model Limitations

OpenAccess AI Collective (axolotl) messages

AI Twitter Recap

AI Twitter Recap

-

AI Model Developments and Benchmarks

- Gemini 1.5 Pro Performance: @lmsysorg announced that GoogleDeepMind's Gemini 1.5 Pro has claimed the #1 spot on Chatbot Arena. The model excels in multi-lingual tasks and technical areas like Math, Hard Prompts, and Coding.

-

Model Comparisons: @alexandr_wang noted that OpenAI, Google, Anthropic, & Meta are at the frontier of AI development. Google's long-term compute edge with TPUs could be a significant advantage.

-

FLUX.1 Release: @robrombach launched Black Forest Labs and FLUX.1, a new state-of-the-art text-to-image model available under an Apache 2.0 license.

-

LangGraph Studio: @LangChainAI introduced LangGraph Studio, an agent IDE for developing LLM applications.

-

Llama 3.1 405B: @svpino shared that Llama 3.1 405B is now available for free testing, the largest open-source model to date.

-

AI Research and Developments

- BitNet b1.58: @rohanpaul_ai discussed BitNet b1.58, a 1-bit LLM approach potentially running large models on memory-limited devices.

-

Distributed Shampoo: @arohan announced Distributed Shampoo outperformed Nesterov Adam in deep learning optimization.

-

Schedule-Free AdamW: @aaron_defazio reported that Schedule-Free AdamW set a new SOTA for self-tuning training algorithms.

-

Adam-atan2: @ID_AA_Carmack shared a one-line code change with atan2() for Adam.

-

Industry Updates and Partnerships

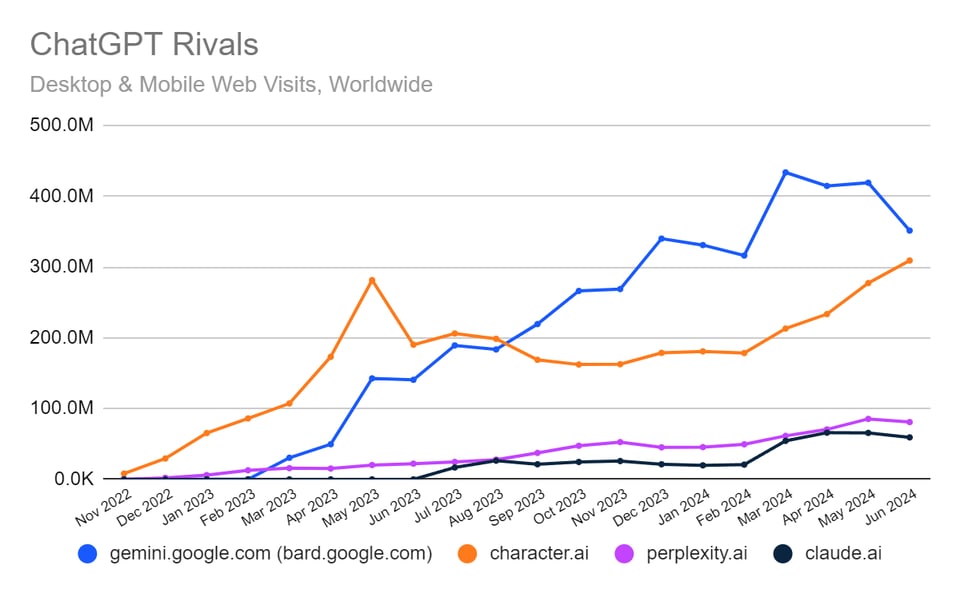

- Perplexity and Uber Partnership: @AravSrinivas announced a partnership offering Uber One subscribers 1 year of Perplexity Pro for free.

- GitHub Model Hosting: @rohanpaul_ai shared updates on model hosting on GitHub.

AI Reddit Recap

Theme 1: Efficient LLM Innovations

- A developer successfully fine-tuned BitNet, creating a remarkably compact 74MB model that performs at 198 tokens per second on a single CPU core.

- Gemma2-2B can be run locally on multiple platforms and offers detailed documentation for deployment.

- Gemma-2-9B-IT model outperforms Meta-Llama-3.1-8B-Instruct in BBH, GPQA, and MMLU-PRO categories.

Theme 2: Advancements in Open-Source AI Models

- Fal.ai released Flux, a text-to-image model with 12 billion parameters similar to Midjourney.

- Writer introduced new 70B 32K models for medical and financial domains that outperform Google's medical model and ChatGPT-4.

Theme 3: AI Development Tools and Platforms

- Microsoft launched GitHub Models as a competitor to Hugging Face, with a wait-list signup for early access.

- SQLite-vec v0.1.0 extension for SQLite enables vector similarity search on various platforms.

Theme 4: Local LLM Deployment and Optimization Techniques

- An open-source collection of RAG implementations and building llama.cpp with GPU acceleration guide are shared within the community.

Latent Space Discord

A new architecture called MoMa is introduced to enhance mixed-modal language modeling, achieving significant advancements. Users discuss the efficient fine-tuning of BitNet, leading to rapid results and productivity boosts. The shifting strategy of Character.ai following acquisition by Google sparks conversations on talent transfer and startup viability. DeepSeek API introduces a context caching feature, reducing costs and improving performance in multi-turn conversations. The latest episode of the Winds of AI Winter podcast celebrates 1 million downloads, recapping recent AI advancements in an engaging discussion.

LAION, FLUX Schnell Weaknesses, and More

Members on the LAION Discord discussed weaknesses in the FLUX Schnell model, caution advised against using it for synthetic datasets, and emphasized the value of curated datasets over random noise. Bugs halting progress on LLM were identified, underscoring the importance of debugging. Additionally, the focus shifted towards creating strong baseline models. On the Interconnects Discord, interest in event sponsorship was shown, while skepticism emerged regarding a Character AI deal. Ai2 unveiled a new brand with sparkles emojis, and HuggingFace launched the Magpie Ultra dataset. A member proposed hosting a dinner at the RL Conference. Meanwhile, on the OpenRouter Discord, users reported website accessibility issues and Anthropic service struggles. Chatroom enhancements were mentioned, and acquiring an API key was simplified. Members discussed free model usage limits. The LlamaIndex Discord saw the release of a tutorial on building a RAG pipeline and the development of an AI Voice Agent for Indian farmers. Strategies for ReAct agents without tools and concerns about changes in LlamaIndex's service context were addressed. Issues with DSPy's latest update were reported. Discussions in modular (Mojo 🔥) Discord revolved around error handling, installation difficulties with Max, and the reliability of Mojo nightly builds. Recommendations for Conda installation to ease issues were shared. In the OpenInterpreter Discord, sessions on open interpretation, running local LLMs, starting LlamaFile server, and Aider browser UI demo were highlighted. Post-facto validation in LLM applications was discussed. DSPy Discord members explored meta-rewarding in LLMs, MindSearch framework, and building summarization pipelines. A call for Discord channel exports and integrating AI in game character development were noted. In the OpenAccess AI Collective (axolotl) Discord, discussions covered fine-tuning Gemma2 2B, the search for Japan's top language model, and ROCm installation simplification. Struggles with training Gemma2 and Llama3.1 were shared, along with concerns about prompting engineering impact. Lastly, the LangChain AI Discord addressed documentation gaps and discussed implementing chat sessions, Postgres schema issues, testing LLMs, and Community Research Call updates. In the Torchtune Discord, topics included QAT quantizers, SimPO PR review requests, documentation overhaul proposals, and feedback on new models page. Conversations in the MLOps @Chipro Discord centered around computer vision, machine learning trends at conferences, skepticism on genAI ROI, funding influence on discussions, and the desire for broader conversations. The Alignment Lab AI Discord touched on image generation time inquiries and batch processing capabilities.

Discord Guild Status and Discussions

The section discusses the status of various Discord guilds such as Mozilla AI Discord, DiscoResearch Discord, and AI21 Labs Discord, highlighting the offer to remove inactive guilds. It then delves into detailed channel summaries including discussions on topics like Flux model performance, GPU hosting services, licensing and model ownership, practical usage in AI art, and photo-realistic rendering. The section also covers Discord channels related to Unsloth AI, featuring discussions on training techniques, dataset preparation, model loading best practices, and inter-model compatibility issues. Lastly, it showcases a community-collaboration channel discussing timeline inquiries and announcements channel discussing neural network simulation, image clustering, synthetic datasets, knowledge distillation trends, and new models in finance and medical sectors.

Exploring Knowledge Distillation, Local LLM Applications, AI Bots, and More

This section delves into various topics discussed within the HuggingFace community. Members explored the concept of knowledge distillation, a technique for transferring learnings from a large 'teacher model' to a smaller 'student model,' particularly valuable in deep learning. The emergence of local Large Language Models (LLMs) in transformative enterprises was highlighted, focusing on Generative AI. An overview of building NLP applications with Hugging Face was provided, emphasizing collaborative aspects and open-source libraries. Additionally, the evolution of AI bots, featuring LLMs and Retrieval Augmented Generation (RAG), was discussed in depth, offering insights suitable for newcomers. The section also covers a range of topics from the Perplexity AI section, including Uber One members' access to Perplexity Pro and user experiences.

LM Studio and Perplexity AI Updates

The section discusses updates and insights related to LM Studio and Perplexity AI. Some highlights include a mathematical breakthrough, the impact of digital organization on workplace efficiency, the impressive performance of the Medallion Fund, and the development of hybrid antibodies to combat HIV. Additionally, discussions in LM Studio cover topics such as GPU performance, model training challenges, feature requests, and debates on different GPU technologies. In CUDA Mode channels, discussions revolve around Nvidia GPU instruction cycles, accuracy score fluctuations, Triton tutorials, and requests for lectures on ML compilers. Overall, the sections provide a glimpse into the latest developments, challenges, and interactions in the LM Studio and Perplexity AI communities.

CUDA and AI Discussions

The section discusses various topics related to CUDA Mode and AI, including Llama 3 implementation progress, KV cache issues identified, acquihires in the AI industry, randomness in tensor operations, and comparative performance of RDNA vs CDNA. Additionally, the section highlights discussions on GPU compute learning, travel plans and event links for the PyTorch conference, and invites expected to be sent out soon. It also covers topics like MoMa architecture for mixed-modal language modeling, BitNet fine-tuning, Character.ai's strategy shift following acquisition, DeepSeek API improvements introducing disk context caching, and LlamaCoder enabling efficient React app generation. The section mentions links to related tweets, GitHub repositories, and other resources.

Exploring AI Technologies and Toolkits

The section delves into various AI technologies and toolkits, including discussions around the Singapore accent's impact on user experience, feature clamping in coding models, and shifts in the AI landscape from models like Claude 3.5 to Llama 3.1. Additionally, conversations revolve around the effectiveness of tools like Cody and Aider.nvim, upcoming features in tools like Claude, and the innovative functionalities of Composer. Participants also share insights on the Cohere AI platform, such as aspect-based sentiment analysis, AI project suggestions, and utilizing Cohere API for classification. Further discussions touch on AI hackathons, tools for productivity like the Neurosity Crown, Dwarf Fortress gameplay experiences, and gaming equipment preferences. Lastly, the Eleuther channel explores topics such as GitHub competition with Hugging Face, EU AI regulations, model evaluation metrics, developing new neural network architectures, and tools for code comprehension by LLMs.

Nous Research AI ▷ #reasoning-tasks-master-list

This section includes discussions on various topics related to reasoning tasks master list. Members engaged in conversations about Llama 3.1 performance on Groq, the impact of temperature settings on the model's output quality, and recommendations for setting a temperature baseline to enhance performance. The discussions highlight challenges with instability in outputs, the influence of temperature settings on performance, and the collaborative efforts to optimize the model's behavior.

Quarto Website Setup and File Structure Confirmation

A new PR has been created for the Quarto website to set up the project and enhance the online presence of reasoning tasks. The PR includes detailed descriptions of the changes made and necessary images for clarity. Additionally, a member clarified the folder structure, emphasizing that 'chapters' is a top-level directory while other files are also organized at the top level for clear project organization. This structure aims to facilitate navigation and management within the repository.

Service Issues, Group Chat, Models Availability, and Model Limitations

Issues with regional connection highlighted potential localized outages affecting user experience. Anthropic services reported down or struggling under severe load. Group Chat clarification in OR Playground, models Yi Large and Fireworks status inquiry, ongoing adjustments to available models on the platform. Understanding free model limitations crucial for managing server load and fair access for users. Various links mentioned related to model updates and incidents. Members discussing various AI-focused topics in different channels.

OpenAccess AI Collective (axolotl) messages

The OpenAccess AI Collective (axolotl) messages included discussions on topics like Merged PR feedback, KD development status, adam-atan2 paper insights, and excitement over distilkit release. Another section focused on challenges faced with Training Gemma2 and Llama3.1, prompt engineering impact, concerns about training duration, and issues with non-traditional template structure. Additionally, there were conversations in LangChain AI regarding community research call highlights, collaboration opportunities, and a blog post on testing LLMs. The Torchtune Dev channel covered clarification on QAT quantizers, SimPO PR review, documentation overhaul RFC, and feedback on a potential new models page. In the MLOps @Chipro channel, discussions involved Computer Vision importance, conference trends, genAI ROI skepticism, funding influences, and the desire for broader conversations in ML. Lastly, Alignment Lab AI discussed image generation time on A100 with FLUX Schnell and batch processing capabilities.

FAQ

Q: What is Gemini 1.5 Pro performance known for in the AI space?

A: Gemini 1.5 Pro excels in multi-lingual tasks and technical areas like Math, Hard Prompts, and Coding, claiming the #1 spot on Chatbot Arena.

Q: What is FLUX.1 and who released it?

A: FLUX.1 is a new state-of-the-art text-to-image model released by Black Forest Labs and Robrombach under an Apache 2.0 license.

Q: What are some key features of the Llama 3.1 405B model?

A: Llama 3.1 405B is now available for free testing and is known as the largest open-source model to date.

Q: What are some notable advancements in open-source AI models mentioned in the essai?

A: Fal.ai released Flux, a text-to-image model with 12 billion parameters similar to Midjourney, and Writer introduced new 70B 32K models for medical and financial domains.

Q: What are some AI development tools and platforms discussed in the essai?

A: LangGraph Studio introduced LangGraph Studio, an agent IDE for developing LLM applications, and Microsoft launched GitHub Models as a competitor to Hugging Face.

Q: What are some techniques mentioned for local LLM deployment and optimization?

A: An open-source collection of RAG implementations and building llama.cpp with GPU acceleration guide are shared within the community. Additionally, a new architecture called MoMa is introduced to enhance mixed-modal language modeling.

Q: What are some recent partnership announcements in the AI industry?

A: Perplexity and Uber partnered to offer Uber One subscribers 1 year of Perplexity Pro for free, and GitHub shared updates on model hosting.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!