[AINews] FrontierMath: A Benchmark for Evaluating Advanced Mathematical Reasoning in AI • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Reddit Recap

LM Studio and Model Performance

Advancing AI Technologies in Different Discords

Perplexity AI Server Issues and User Concerns

HuggingFace AI Discussions

Innovations and Collaborations in AI Communities

AI Project Collaborations and Model Insights

FrontierMath Benchmark and Qwen2.5 Coder Model

Navigating AI Conversations

AI Use Cases and User Feedback

Stanislas Polu shares Dust's journey

Approach with NVIDIA Enroot, CUDA Coalescing, and Model Optimization with Bitwidth Integration

Discussing AI Projects and API Issues

LLM Agents (Berkeley MOOC) Hackathon Announcements

AI Twitter Recap

AI Twitter Recap

-

AI Research and Development

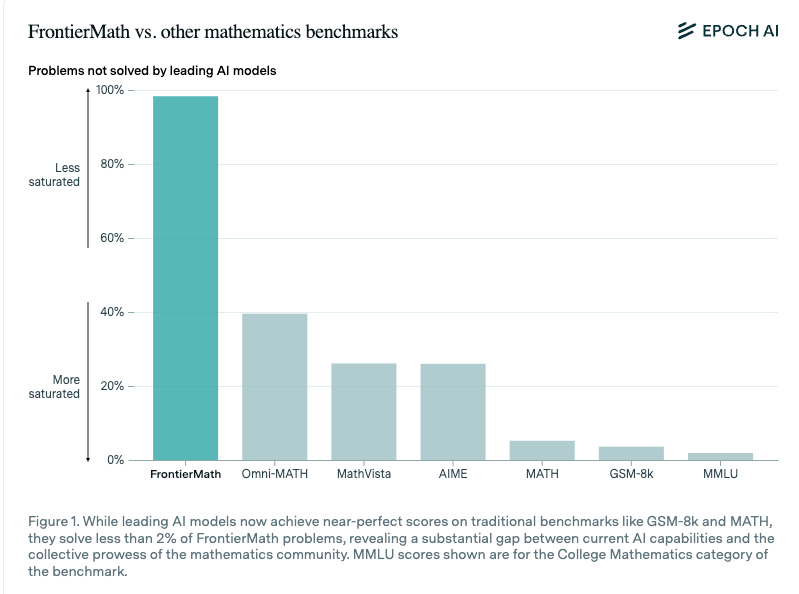

- Frontier Models Performance: @karpathy discusses how FrontierMath benchmark reveals that current models struggle with complex problem-solving, highlighting Moravec's paradox in AI evaluations.

- Mixture-of-Transformers: @TheAITimeline introduces Mixture-of-Transformers (MoT), a sparse multi-modal transformer architecture that reduces computational costs while maintaining performance across various tasks.

- Chain-of-Thought Improvements: @_philschmid explores how Incorrect Reasoning + Explanations can enhance Chain-of-Thought (CoT) prompting, improving LLM reasoning across models.

-

AI Industry News and Acquisitions

- OpenAI Domain Acquisition: @adcock_brett reports that OpenAI acquired the chat.com domain, now redirecting to ChatGPT, although the purchase price remains undisclosed.

- Microsoft's Magentic-One Framework: @adcock_brett announces Microsoft's Magentic-One, an agent framework coordinating multiple agents for real-world tasks, signaling the AI agent era.

- Anthropic's Claude 3.5 Haiku: @adcock_brett shares that Anthropic released Claude 3.5 Haiku on various platforms, outperforming GPT-4o on certain benchmarks despite higher pricing.

- xAI Grid Power Approval: @dylan522p mentions that xAI received approval for 150MW of grid power from the Tennessee Valley Authority, with Trump's support aiding Elon Musk in expediting power acquisition.

-

AI Applications and Tools

- LangChain AI Tools:

- @LangChainAI unveils the Financial Metrics API, enabling real-time retrieval of various financial metrics for over 10,000+ active stocks.

- @LangChainAI introduces Document GPT, featuring PDF Upload, Q&A System, and API Documentation through Swagger.

- @LangChainAI launches LangPost, an AI agent that generates LinkedIn posts from newsletter articles or blog posts using Few Shot encoding.

- Grok Engineer with xAI: @skirano demonstrates how to create a Grok Engineer with @xai, utilizing the compatibility with OpenAI and Anthropic APIs to generate code and folders seamlessly.

- LangChain AI Tools:

-

Technical Discussions and Insights

- Human Inductive Bias vs. LLMs: @jd_pressman debates whether the human inductive bias generalizes algebraic structures out-of-distribution (OOD) without tool use, suggesting that LLMs need further polishing to match human biases.

- Handling Semi-Structured Data in RAG: @LangChainAI addresses the limitations of text embeddings in RAG applications, proposing the use of knowledge graphs and structured tools to overcome these challenges.

- Autonomous AI Agents in Bureaucracy: @nearcyan envisions using **agentic

AI Reddit Recap

Theme 1: MIT's ARC-AGI-PUB Model and Testing:

- MIT achieved a 61.9% score on ARC-AGI-PUB using an 8B LLM with Test-Time-Training (TTT).

- Discussions around Test-Time-Training (TTT) fairness and legitimacy.

- Debate on specialized optimization versus general-purpose systems.

- Skepticism on the broader applicability of the model and the future of AGI.

Theme 2: Qwen Coder 32B and AI Coding:

- Anticipation for Qwen coder 32B release and its potential impact on AI community.

- Discussions on training models for direct translation from English to machine language.

- Optimism on AI improving coding workflows and fixing simple errors.

Theme 3: M4 128 Hardware Exploration and Model Testing:

- Exploration of LLama 3.2 Vision 90b and Mixtral 8x22b models on M4 128 hardware.

- Discussions on processing speeds, hardware configurations, and enthusiast motivations.

Theme 4: AlphaFold 3 Open-Sourcing for Academic Research:

- AlphaFold 3 model code and weights available for academic research.

Other AI Subreddit Recap:

- Various breakthroughs including EasyAnimate, OpenAI advancements, AI model deployment, GPU optimizations, and model benchmarking techniques discussed among different AI subreddits.

LM Studio and Model Performance

Users on the LM Studio Discord channel discussed various topics related to model performance and application functionalities:

- GPU Utilization on MacBooks: Questions were raised about determining GPU utilization on MacBook M4 while running LM Studio, highlighting potential slow generation speeds compared to different setups.

- Model Loading Issues: A user reported issues with LM Studio indexing a folder containing GGUF files and suggested troubleshooting steps.

- Pydantic Errors with LangChain: Users encountered Pydantic errors when integrating LangChain, speculating on the cause of the issue.

- Gemma 2 27B Performance: Gemma 2 27B demonstrated exceptional performance at lower precision settings, prompting discussions on the benefits of different precision levels.

- Laptop Recommendations for LLM Inference: Inquiries were made about performance differences between newer Intel Core Ultra CPUs and older i9 models for LLM inference, with recommendations and considerations provided.

Advancing AI Technologies in Different Discords

Discussions in various Discord channels highlighted advancements in AI technologies across different domains. Some key topics included optimizing GPU performance over CPU specifications for specific tasks like LLM, advancements in neural compression techniques by DeepMind, and the introduction of new models like Aria multimodal MoE model. Additionally, discussions covered issues such as handling floating-point exceptions in systems, infrastructure challenges in AI agent development, and benchmarking strategies for fine-tuned LLM models. These conversations showcase the diverse range of topics and developments within the AI community.

Perplexity AI Server Issues and User Concerns

The section discusses the challenges faced by Perplexity AI users, including server issues and lack of communication from the support team. Users reported difficulties logging in and accessing threads, with messages disappearing. There were frustrations over poor support and recurring bugs. Participants called for better communication and transparency from the company. Some users started seeking alternatives to Perplexity, concerned about its value amidst ongoing problems. Discussions also touched on the future impact of technology firms on user experiences and customer service.

HuggingFace AI Discussions

The discussions on HuggingFace AI channels cover a wide range of topics related to AI, ranging from new model releases to collaborations and challenges faced by users. Members discuss the performance of models like Qwen Coder and Llama, share resources for fine-tuning models and dataset preparation, and explore innovative solutions such as using Cloudflare for tunneling models. The diverse range of topics reflects a vibrant community actively engaged in exploring and pushing the boundaries of AI technologies.

Innovations and Collaborations in AI Communities

The AI community showcases various innovations and collaborations. From YouTube tutorials on ChatGPT to discussions on multimodal machine learning and autonomous AI agents, the members explore cutting-edge AI technologies. Opportunities for integration talks, open source contributions, and academic collaborations are highlighted. Furthermore, research topics include advancements in model training techniques, test-time scaling, and multimodal retrieval. Challenges in research networking, unlearning mechanisms in language models, and the character of responses from models are also discussed, reflecting a dynamic and engaging AI community.

AI Project Collaborations and Model Insights

In this section, members discuss various AI projects and collaborations. One member showcases the Google Gemini AI system that emulates reasoning, while another delves into dynamic model functions and growth opportunities for collaborating servers. User experiences with AI models, particularly Bard's launch, are shared, along with insights on training music models. The section also covers discussions on low data image model training techniques, normalized transformers, value residual learning, batch size scaling, and learnable skip connections, highlighting different insights and advancements in each. The exploration of deep neural network modeling, intervention techniques in AI, SVD in model updates, behavioral changes in models, and physics simulations with ML is also discussed, providing a comprehensive view of ongoing AI-related conversations.

FrontierMath Benchmark and Qwen2.5 Coder Model

- Lilian Weng departs OpenAI: After a long tenure, Lilian Weng announced her departure from OpenAI, triggering discussions about community reactions and potential offshoots.

- Introduction of FrontierMath as a new benchmark: FrontierMath, a benchmark for complex math problems, highlights a significant gap in AI models' capabilities, sparking discussions on difficulty and implications.

- Qwen2.5 Coder models launched: The Qwen2.5 Coder family, including the flagship 32B-Instruct model, competes well against existing benchmarks, with details shared and a pending paper publication.

- Dario Amodei on AI scaling: Dario Amodei discussed scaling trends across modalities, hinting at human-level AI in the near future, while addressing challenges in data quality and architecture constraints.

- Character building at Anthropic and OpenAI: Both labs focus on developing AI models with ethical and responsible character and behavior for user-friendly and safer experiences.

Navigating AI Conversations

One participant expressed skepticism about the ARC Prize, stating it is overrated but acknowledged that people will still hillclimb on it regardless. Concerns were raised about achieving high scores on ARC with just pure transformer methods, hinting at challenges faced in the competition. Another perspective suggested that an ensemble/discrete synthesis could outperform a pure transformer approach, potentially solving 75%+ of ARC. The discussion also delved into Gary Marcus defending his position on AGI and the entertainment value from Twitter debates. Comparison between Twitter and Bluesky platforms was made, with some expressing relief at switching to Bluesky. Threads platform was critiqued for lackluster engagement, and upcoming AI-related literature was mentioned. The dialogue also encompassed topics like model merging techniques, roleplaying model evaluation, personality research challenges, dynamic resolution in Qwen2-VL model, and HCI research in AI interaction. Challenges faced in conducting personality research due to privacy concerns were highlighted, along with suggestions for HCI research insights. The section also mentioned Neural Notes exploring language model optimization, discussions on AI progress and GPT models, and the future of AI development. The Interconnects section led to a deep dive into various aspects of AI technology and ongoing debates within the community.

AI Use Cases and User Feedback

Parallel Processing of JSON Objects:

- Multiple chats can be run in parallel to process JSON objects efficiently, reducing processing time.

- Story prompts should focus on desired outcomes rather than restrictions for better story quality.

- Providing precise guidance and examples in prompts for improved story generation.

LM Discord Announcement:

- Users invited to participate in NotebookLM Feedback Survey for future enhancements.

- Participants in the survey may receive a $20 gift code after completion.

- No gifts guaranteed for interest submission, rewards given post-survey completion.

LM Discord Use Cases:

- Users discussed various use cases for NotebookLM, including job search preparation and educational summaries.

- Experimentation with NotebookLM for sports commentary and innovative podcast formats.

- Preferences for visual versus audio learning discussed, along with optimization techniques.

LM Discord General:

- Comparison discussions between NotebookLM and other AI tools ensued focusing on productivity tasks.

- Issues with podcast features, Google Drive syncing, and limitations in mobile usage raised.

- Inquiries about exporting notes, API features, and support for other languages pondered.

LM Studio Hardware Discussion:

- Discussions on Gemma 2 performance, H100 cluster assistance, and home server setup for AI/ML.

- Recommendations for laptop options for LLM inference and analyzing model throughput discrepancies.

- Challenges related to LM Studio model loading, Pydantic errors, and text-to-speech model functionality shared.

Latent Space AI General Chat:

- Launch of Qwen 2.5 Coder model family and insights into AI music analysis.

- In-depth interview with Dario Amodei and challenges faced in FrontierMath benchmark.

- Test-time compute techniques discussed and their implications for AI evaluation.

Stanislas Polu shares Dust's journey

In a recent episode, Stanislas Polu discussed the early days at OpenAI and the development of Dust XP1, achieving 88% Daily Active Usage among employee users. He humorously noted he may have disclosed too much about OpenAI's operations from 2019 to 2022. Voice questions for the big recap: Listeners are encouraged to submit voice questions for the upcoming 2 Years of ChatGPT recap episode via SpeakPipe. This open call aims to gather community insights and inquiries following the successful run of the show. Challenges in AI agents infrastructure: The conversation revisited infrastructure challenges in building effective AI agents, touching on the buy vs. build decisions that startups face. Polu highlighted concerns regarding the evolution and allocation of compute resources in the early days of OpenAI, noting the hurdles encountered. The future of SaaS and AI: A significant segment was dedicated to the discussion on SaaS and its evolving relationship with AI technologies and their impact on future software solutions. The talk also entailed comments on how single-employee companies are competing in a $1B company race, challenging traditional models.

Approach with NVIDIA Enroot, CUDA Coalescing, and Model Optimization with Bitwidth Integration

Seeking Help with NVIDIA Enroot: A member asked about experiences with NVIDIA's Enroot container runtime while setting up a dev environment on a cluster. Despite facing challenges, they welcomed community feedback.

Understanding Coalescing in CUDA: Discussion on coalescing in CUDA being 'across' threads in a warp. Common confusion about access patterns' impact on CUDA performance was noted.

Optimizing Models with Bitwidth Integration: Focus on using bitwidth in error function for model optimization. Suggested exploring linear operations first for GPU quantization efforts. Proposed leveraging QAT frameworks for unique optimizations despite original focus on convolutional models.

Discussing AI Projects and API Issues

This section delves into discussions surrounding AI projects and API issues. Users are seen sharing insights on creating an AI interview bot, testing Aya-Expanse model, and the efficiency of function calling. Additionally, there are inquiries about Cohere API for document-based responses, ORG ID acquisition, and reported disruptions on Cohere endpoints. Feedback on system performance, latency issues, and API errors are also highlighted. The collaborative development of Discord bots using the Cohere API is explored, showcasing features like conversation branches and quick setup with Docker. Positive feedback on the vnc-lm Discord bot is noted, indicating user excitement. The section also covers discussions on hardware requirements for running local AI, testing new updates, and configuration queries in Open Interpreter. It further touches on document parsing, advanced chunking strategies, and PowerPoint generation with user feedback. LlamaIndex discussions cover topics such as Sentence Transformers ingestion pipeline, Docker resource settings, and Text-to-SQL applications. Lastly, DSPy conversations revolve around M3DocRAG's multi-modal question answering success, open-domain benchmarks with M3DocVQA, and DSPy RAG capabilities with vision integration.

LLM Agents (Berkeley MOOC) Hackathon Announcements

Teams participating in the Berkeley MOOC hackathon received updates on the Midterm Check-in for project feedback and the application process for additional compute resources. Criteria for project judging were outlined, emphasizing the need for justifying resource needs. A reminder was given for the upcoming Lambda Workshop, scheduled for Nov 12th. The workshop aims to provide insights on team projects and the hackathon process. Links for the Mid Season Check In Form and resource request form were shared for team submissions and resource requests.

FAQ

Q: What is the FrontierMath benchmark and its significance?

A: The FrontierMath benchmark highlights the challenges faced by current AI models in complex problem-solving, showcasing Moravec's paradox in AI evaluations.

Q: What is the Mixture-of-Transformers (MoT) architecture and its benefits?

A: The Mixture-of-Transformers (MoT) architecture is a sparse multi-modal transformer design that reduces computational costs while maintaining performance across various tasks.

Q: How can Incorrect Reasoning + Explanations enhance Chain-of-Thought prompting?

A: Incorrect Reasoning + Explanations can enhance Chain-of-Thought (CoT) prompting by improving LLM reasoning across models.

Q: What recent acquisition did OpenAI make, and what is its significance?

A: OpenAI acquired the chat.com domain, now redirecting to ChatGPT, signaling a strategic move in the AI industry.

Q: What is Microsoft's Magentic-One framework, and how does it impact the AI industry?

A: Microsoft's Magentic-One is an agent framework that coordinates multiple agents for real-world tasks, marking the beginning of the AI agent era.

Q: What advancements were made by Anthropic with the release of Claude 3.5 Haiku?

A: Anthropic's release of Claude 3.5 Haiku showcased outperformance against GPT-4o on certain benchmarks, despite its higher pricing.

Q: What significant approval did xAI receive, and how did Trump's support play a role?

A: xAI received approval for 150MW of grid power from the Tennessee Valley Authority, with Trump's support aiding Elon Musk in expediting power acquisition.

Q: What are the key features of LangChain's AI tools, Financial Metrics API, Document GPT, and LangPost?

A: LangChain's AI tools include the Financial Metrics API for real-time retrieval of financial metrics, Document GPT with PDF upload and Q&A System, and LangPost, an AI agent generating LinkedIn posts.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!