[AINews] Gemini Live • ButtondownTwitterTwitter

AI Twitter and Reddit Recaps

The AI Twitter Recap highlights recent developments in AI models, tools, applications, engineering insights, ethics, community, events, and humor. It covers topics such as new software engineering systems, open-access models, AI-based Postgres services, and more. On the other hand, the AI Reddit Recap focuses on advanced quantization and model optimization techniques in discussions from subreddits like /r/LocalLlama. It mentions achievements in model quantization, efficient handling of text length, and pre-training language models. The recap also includes information on open-source contributions to LLM development, featuring an extensive collection of RAG implementations and the release of Falcon Mamba 7B model by TII. Users provided feedback on the model's performance, expressing mixed results and skepticism.

Model Performance and Benchmarking

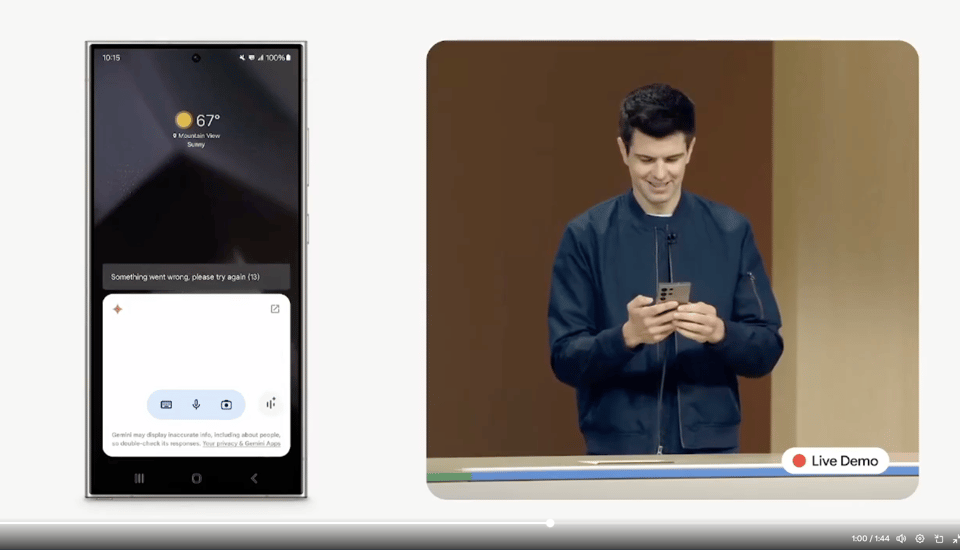

An uncensored model tuned to retain the intelligence of the original Meta Instruct model has been released and has outperformed the original model on the LLM Leaderboard 2. The model's performance sparked discussions about the trade-offs between censorship and utility, with many users praising its ability to handle a wider range of inputs. Mistral Large 2 has been identified as the best LLM currently, outcompeting Claude 3.5 Sonnet for difficult novel problems. Gemini Flash undercut OpenAI 4o mini severely in price, but OpenAI 4o was less expensive than Mistral Large. Google's Gemini Live is now available to Advanced Subscribers, offering conversational overlay on Android and more connected apps. Many users find it to be an improvement over the old voice mode, but note that it lacks live video functionality.

Discord Chats: AI Related Conversations

This section covers various discussions from different Discord channels related to AI topics. Some of the topics discussed include fine-tuning TransformerDecoderLayer in Torchtune, Java by Microsoft's relevance, Cohere For AI Research Lab expansions, issues with JSONL uploads on Coherence, updates on various models like Flux.1 and Grok 2.0, challenges in model fine-tuning, virtual try-on feature development, and more. The conversations range from technical queries to user experiences and project collaborations.

Training Ahma-3B Instruct

The training process for Ahma-3B Instruct involved translating and synthesizing single- and multi-turn data, using ClusterClipping based sampling and selection. This was followed by Supervised Fine-Tuning (SFT) with Qlora using the Unsloth framework, and a fine-tuning step with DPO (Direct Preference Optimization) with a beta of 0.1.

LM Studio General

Vision Adapters: The Key to Vision Models

Only specific LLM models have vision adapters, most of them are going by name 'LLaVa' or 'obsidian'.

- The 'VISION ADAPTER' is a crucial component for vision models; without it, the error you shared will pop up.

Mistral Large: The Current Champion?

A member found Mistral Large 2 to be the best LLM right now, outcompeting Claude 3.5 Sonnet for difficult novel problems.

- However, the member also noted that Gemini Flash undercut OpenAI 4o mini severely in price, but OpenAI 4o was less expensive than Mistral Large.

LLM Studio's Model Explorer is Down

Several members reported that HuggingFace, which powers the LM Studio Model Explorer, is down.

- The site was confirmed to be inaccessible for several hours, with connectivity issues reported across various locations.

Llama 3.1 Performance Issues

A user reported that their Llama 3 8B model is now running at only 3 tok/s, compared to 15 tok/s before a recent update.

- The user checked their GPU offload settings and reset them to default, but the problem persists; the issue appears to be related to a change in the recent update.

LLM Output Length Control

A member is looking for ways to restrict the output length of responses, as some models tend to output whole paragraphs even when instructed to provide a single sentence.

- While system prompts can be modified, the member found that 8B models, specifically Meta-Llama-3.1-8B-Instruct-GGUFI, are not the best at following precise instructions.

OpenAccess AI Collective (axolotl) General Help

One user shared their surprise at OpenAI releasing a benchmark instead of a new model, speculating that this might be a strategic move to steer the field towards better evaluation tools. Another user asked about the functionality of grad clipping, specifically wondering what happens to gradients when they exceed the maximum value. A user noted that Perplexity Pro has gotten very good at reasoning and can even count letters without the tokenizer. They also mentioned a related GitHub repository. Additionally, there was a discussion about creating a 'MoE' version of Llama 3.

Extracting information from technical images for RAG

A user sought advice on extracting information from images like electrical diagrams, maps, and voltage curves for RAG on technical documents. They mentioned encountering difficulties with traditional methods, highlighting the need for capturing information not present in text form but visually interpretable by experts.

AI News and Buttondown

In this section, you can find AI News on other platforms such as Twitter. Additionally, you can subscribe to the newsletter on latent.space. The content is brought to you by Buttondown, which is highlighted as the easiest way to start and grow your newsletter.

FAQ

Q: What are vision adapters in vision models?

A: Vision adapters are crucial components for vision models, often named 'LLaVa' or 'obsidian'. They are necessary to prevent errors in the models.

Q: What is the Mistral Large 2 model known for?

A: The Mistral Large 2 model is known for being identified as the best LLM currently and outcompeting other models like Claude 3.5 Sonnet for difficult novel problems.

Q: What issues were reported regarding LLM Studio's Model Explorer?

A: Several members reported that HuggingFace, which powers the LM Studio Model Explorer, was down for several hours due to connectivity issues across various locations.

Q: What performance issue was reported for the Llama 3 8B model?

A: A user reported that their Llama 3 8B model experienced a drastic decrease in performance, running at only 3 tok/s compared to 15 tok/s before a recent update.

Q: How did users try to address the LLM output length control issue?

A: Users were looking for ways to restrict the output length of responses, as some models tend to output whole paragraphs even when instructed to provide a single sentence. However, they found that certain models like Meta-Llama-3.1-8B-Instruct-GGUFI were not precise in following instructions.

Q: What strategic move did users speculate OpenAI made by releasing a benchmark instead of a new model?

A: Users speculated that OpenAI released a benchmark instead of a new model as a strategic move to steer the field towards better evaluation tools.

Q: What was discussed regarding the functionality of grad clipping?

A: There was a discussion about the functionality of grad clipping, specifically on what happens to gradients when they exceed the maximum value.

Q: What difficulties did a user encounter when trying to extract information from images for RAG on technical documents?

A: A user encountered difficulties with traditional methods when trying to extract information from images like electrical diagrams, maps, and voltage curves for RAG on technical documents, highlighting the challenge of capturing visually interpretable information in a non-text form.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!