[AINews] Gemma 2 2B + Scope + Shield • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recap

Advanced AI Discourse and Innovations

LM Studio Discord

HuggingFace AI Developments

HuggingFace Discourse on Various Topics

Collaboration and Troubleshooting with AI Community

Large Models and GPU Programming

WebGPU Shaders and Real-time Applications

Autoencoders, Text Explanations, and Open Source Library

Interconnects: NLP Discussions

Cohere Discussions

Discussion Highlights in Different Community Channels

LangChain AI Update

Engagement Boost through Game Mechanics

AI Twitter and Reddit Recap

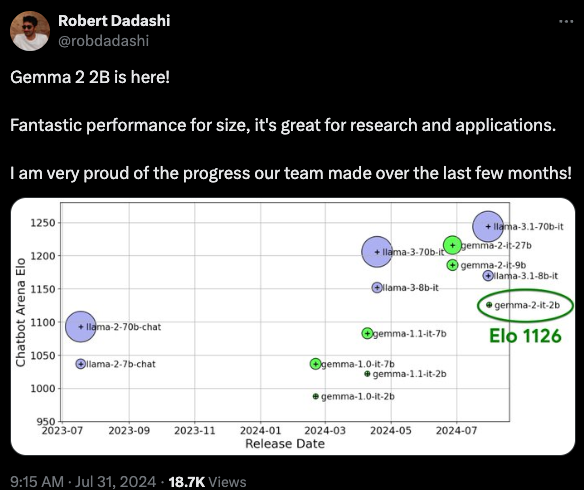

The AI Twitter Recap section covers various updates and releases in the AI community including Meta's Llama 3.1 Performance and the release of Meta's Segment Anything Model 2 (SAM 2). OpenAI is rolling out an advanced Voice Mode and Perplexity AI launched a Publishers Program. In the AI Research and Development field, NVIDIA introduced Project GR00T for scaling up robot data, and there is a rise in interest in quantization techniques. Discussions around LLM-as-a-Judge and various implementations were highlighted. AI Tools and Platforms such as ComfyAGI, Prompt Tuner, and MLflow for LlamaIndex were introduced. In Industry and Career News, the UK government is hiring a Senior Prompt Engineer and Tim Dettmers shared career updates including joining Allen AI and becoming a professor at Carnegie Mellon.

Advanced AI Discourse and Innovations

In this section, AI experts and enthusiasts engage in discussions on various advancements and innovations in the field. Topics include the development of new language models, breakthroughs in ternary model inference speed, and strategies for optimizing AI performance. Additionally, collaborative efforts between companies like Hugging Face and Nvidia are highlighted, along with the introduction of new tools such as MindSearch for enhancing search engines. The section also covers discussions on fine-tuning challenges, prompt engineering strategies, multimodal AI developments, and generative modeling innovations.

LM Studio Discord

Vulkan Support Goes Live

- A new update scheduled for tomorrow will introduce Vulkan support in LM Studio, enhancing GPU performance after the deprecation of OpenCL. Discussions are ongoing regarding compatibility with AMD drivers.

- Users are hopeful that this support will resolve multiple bug reports and frustrations related to Intel graphics compatibility in previous releases.

Gemma 2B Model Heads for Beta

- The upcoming 0.2.31 beta version promises key improvements for users, including support for the Gemma 2B model and options for kv cache quantization. Users can join the Beta Builds role on Discord for notifications about new versions.

- However, users have highlighted challenges with loading models like Gemma 2B, often requiring updates in the underlying llama.cpp code for optimal usage.

AI-Powered D&D Conversations

- A user is exploring creating a D&D stream with multiple AIs as players, focusing on structured interactions. Concerns have been raised about conversation dynamics and speech-to-text complexities during brainstorming.

- This dialogue around AI interactions suggests an innovative approach to enhancing engagement during gameplay, showcasing the flexibility of AI applications.

Maximizing GPU Resources

- Users have confirmed that leveraging GPU offloading significantly aids in efficiently operating larger models, especially in high-context tasks. This approach enhances performance compared to solely relying on CPU resources.

- However, discrepancies in RAM usage across different GPUs, like the 3080ti versus 7900XTX, highlight the importance of careful hardware configuration for AI workloads.

Installation Conflicts and Workarounds

- New users have expressed frustration with installation issues, particularly when downloading models from Hugging Face, citing access agreements as a barrier. Workarounds proposed include alternative models that bypass agreement clicks.

- Moreover, the lack of support for drag-and-drop CSV uploads into LM Studio underlines the current limitations in functionalities for seamless document feeding.

HuggingFace AI Developments

HuggingFace AI Developments

- Llama 3.1 Multilingual Might: Released with 405B, 70B, and 8B parameters, beating competitors like GPT4o.

- Argilla 2.0 Sneak Peek: Introduces easy dataset duplication feature for managing multiple datasets.

- Peft v0.12.0 Launch: Includes new efficient fine-tuning methods like OLoRA and X-LoRA.

- Hugging Face and Nvidia Collaboration: Offer inference-as-a-service for rapid prototyping with AI models.

- VLM Finetuning Task Alert: Allows easy finetuning of PaliGemma model on custom datasets.

- Links mentioned: Multiple tweets discussing various updates and features of HuggingFace.

HuggingFace Discourse on Various Topics

The HuggingFace community engaged in discussions covering a range of topics. Knowledge Distillation, Community Interactions, AI Training Techniques, Fine-tuning Models, and Dialectal Language Processing were among the focal points. Users shared insights on hyperparameters, learning rates, dialectal support in Arabic language models, and training models with RAG. Additionally, the section highlighted Gemma 2 model updates, multigpu support progress, using Lora for fine-tuning, issues with 4bit merging, and installation challenges with Unsloth AI. The community also explored topics like Seq2Seq tasks limitations, referenceless metrics, and the necessity of finetuning models in the NLP category. Lastly, the MegaBeam-Mistral-7B-512k Model, capable of supporting 524,288 tokens and trained on Mistral-7B Instruct-v0.2, was revealed, garnering excitement from the community.

Collaboration and Troubleshooting with AI Community

This section delves into various discussions and interactions within the AI community regarding different topics. From quantization methods to installation issues with Unsloth, members discussed challenges and shared insights on improving models' performance. Additionally, the section highlights a member's initiative to integrate Unsloth Inference with HuggingFace and the continuous strive for advancements in translation models. The researchers' exploration of HDMI eavesdropping, continual pre-training insights, Sailor language models, learning rate trade-offs, and model optimization tips reveal a deeper understanding of model dynamics and strategies for enhanced performance.

Large Models and GPU Programming

The section discusses the importance of large models in delivering superior performance in various domains. It also covers the potential benefits of using PyTorch FSDP for scaling fully sharded data parallel processing. Additionally, there is a focus on CUDA mode discussions related to the Triton programming model and GPU programming, including exploring code reflection for Triton in Java and the advantages of Triton model for writing GPU code. The section also touches upon CUDA memory alignment concerns, issues running torch.compile on T4, cautions around non-blocking transfers, and the effects of pinned memory in LLM inference projects. There are insights shared about Zoox expanding its ML platform team and optimization initiatives, as well as discussions on Ampere A100's processing blocks configuration and the advantages of warp splitting. The section also highlights the discovery of HQQ+ techniques by Apple and the availability of a high-performance Llama 3.1-8B instruct model. Furthermore, discussions include SwiGLU outperforming GELU in speed, challenges with FP8 integration, integrating RoPE in training dynamics, updates on Llama 3 models, and hyperparameter tuning. Lastly, the section explores the achievements in ternary models speed boosts, ternary-int8 dot product performance breakthrough, and CPU performance surpassing fancy bitwise ops in CUDA.

WebGPU Shaders and Real-time Applications

The section discusses the use of shaders with WebGPU to provide a more convenient interface for leveraging WebGPU functions. It also covers interest in using WebGPU for real-time multimodal applications, including simulations and conditional computational branching. There is also keen interest in hybrid model computation, combining CPU SIMD and GPU resources. Additionally, the section explores local device computing with C++, emphasizing the convenience of a portable GPU API in C++. This approach is seen as accessible for experimenting with new computational methods.

Autoencoders, Text Explanations, and Open Source Library

Autoencoders

-

Sparse Autoencoders tackle scaling issues: Automated pipelines help Sparse Autoencoders recover interpretable features, allowing for easier evaluations on GPT-2 and Llama-3 8b features. Key findings indicate that open source models provide reasonable evaluations.

-

Innovative methods for text explanation evaluation: Proposed methods for measuring the recall of explanations include building counterexamples and testing model-generated activations. Smaller models achieve reliable scores with fewer tokens.

-

Release of open source library for feature research: A new library enables research on auto-interpreted features derived from Sparse Autoencoders. The code is available on GitHub.

-

Cost-effective interpretation of model features: Interpreting 1.5M features of GPT-2 is predicted to cost only $1300 for Llama 3.1, a significant reduction from prior methods. This efficiency marks a breakthrough in model feature analysis.

-

Demo and dashboard enhancements: A small dashboard and demo have been created to showcase the features of the new library for auto-interpreted features. The demo can be accessed here and is best viewed on larger screens.

Interconnects: NLP Discussions

Subbarao Kambhampati critiques LLM reasoning:

In a recent YouTube episode, Prof. Kambhampati argues that while LLMs excel at many tasks, they possess significant limitations in logical reasoning and planning. His ICML tutorial delves further into the role of LLMs in planning, supported by various papers on self-correction issues (Large Language Models Cannot Self-Correct Reasoning Yet).

Challenges in intrinsic self-correction benchmarks:

There are concerns about the feasibility of benchmarks where an LLM has to correct its own reasoning trajectory due to an initial error, possibly making it an unrealistic setup. The discussion highlights the difficulty in comparing model performances when the benchmark involves models intentionally generating faulty trajectories for self-correction.

LLMs struggle to self-correct effectively:

Research indicates that LLMs often fail to self-correct effectively without external feedback, raising questions about the viability of intrinsic self-correction. A summary of the study on self-verification limitations reveals that models experience accuracy degradation during self-verification tasks as opposed to using external verifiers.

Feedback loops in LLM reasoning:

A member noted a peculiar aspect of LLM reasoning where an initial mistake could be corrected later due to context changes in the generated output, hinting at potential stochastic influences. This implies that while LLMs might demonstrate some capacity

Cohere Discussions

Interconnects (Nathan Lambert) ▷ #posts (7 messages):

-

Visibility of Text in Screenshots

-

Compression Issues

-

Message Clarity

-

Screenshots hard to read on mobile: Members noted difficulties reading texts in screenshots on mobile or email.

-

Blurry image issue acknowledged: A member admitted to a blurry screenshot.

-

Attention to detail appreciated: Members acknowledged focus on readability, potentially increasing workload.

Cohere ▷ #discussions (41 messages🔥):

- Google Colab for Cohere Tools: Member creating a Google Colab for Cohere API tools.

- Agentic Build Day Attendance: Virtual competition planned for IRL-only Agentic Build Day.

- Rerank API Enhancements: Suggestions to use Rerank API for better semantic matching.

- OpenAI Contributions Tracking: Clarification on Cohere contributions compared to Hugging Face.

- Community Builders Recognition: Enthusiasm for community contributions and upcoming demo.

Cohere ▷ #announcements (1 messages):

- Join Us for Agent Build Day in SF: Overview of hands-on workshops at Agent Build Day with Cohere experts.

- Learn from Cohere Experts: Workshop focus on agentic workflow and human oversight integration.

- Hands-On Experience with Mentorship: Guided hands-on experience with mentors for building agents.

- Advanced RAG Capabilities with Cohere: Overview of Cohere models offering advanced RAG capabilities.

Cohere ▷ #questions (14 messages🔥):

- Rerank API returns 403 Error: Discussion on issues with Rerank API returning errors.

- Inquiry about Internship Status: Inquiry about Cohere internship status and prospects.

- Training for Arabic Dialect Generation: User seeking advice on training models for Arabic dialect generation.

Cohere ▷ #cohere-toolkit (2 messages):

- Community Toolkit isn't activating: Member experiencing issues with community toolkit activation.

- Running development setup with make: Discussion on using make dev for environment initialization.

Discussion Highlights in Different Community Channels

The sections discussed various topics in different community channels. Some highlights include curiosity about Attention Layer Quantization and Axolotl's early stopping capabilities. There were inquiries about manual termination of training runs and a discussion about the 'Gema2b' topic. Contributions and updates in the LlamaIndex platform were also mentioned, such as integrating MLflow, launching the Jamba-Instruct model by AI21 Labs, and open-source contributions. The Torchtune section covered issues with LLAMA_3 model outputs and discussions on generation parameters. Lastly, LangChain AI had discussions regarding Google Gemini integration, streaming tokens from an agent, a Pydantic error, and challenges with LangChain tools.

LangChain AI Update

LangChain AI ▷ #share-your-work (2 messages):

- Build Your Own SWE Agent with new guide: A member created a guide for building SWE Agents using frameworks like CrewAI, AutoGen, and LangChain. The guide emphasizes leveraging a Python framework for effortlessly scaffolding agents compatible with various agentic frameworks.

- Palmyra-Fin-70b makes history at CFA Level III: The newly released Palmyra-Fin-70b model is the first model to pass the CFA Level III exam with a 73% score and is designed for investment research and financial analysis.

- Palmyra-Med-70b excels in medical tasks: The Palmyra-Med-70b model achieved an impressive 86% on MMLU tests, serving applications in medical research with versions available for different tasks. These models are available under non-commercial open-model licenses on platforms like Hugging Face and NVIDIA NIM.

Engagement Boost through Game Mechanics

The trivia app's use of game mechanics has proven to enhance user engagement and retention, with engaging gameplay and an easy-to-understand interface being highlighted as significant features by users.

FAQ

Q: What is Vulkan support in LM Studio and how does it enhance GPU performance?

A: Vulkan support in LM Studio is an update that enhances GPU performance after the deprecation of OpenCL. Users are hopeful that this support will resolve multiple bug reports and frustrations related to Intel graphics compatibility.

Q: What are the key improvements in the upcoming Gemma 2B model version?

A: The upcoming Gemma 2B model version promises support for the Gemma 2B model and options for kv cache quantization. Users can join the Beta Builds role on Discord for notifications about new versions.

Q: What are the challenges highlighted by users when loading models like Gemma 2B?

A: Users have highlighted challenges when loading models like Gemma 2B, often requiring updates in the underlying llama.cpp code for optimal usage.

Q: What innovative approach to AI interactions was discussed concerning creating a D&D stream with multiple AIs as players?

A: A user is exploring creating a D&D stream with multiple AIs as players, focusing on structured interactions. Concerns have been raised about conversation dynamics and speech-to-text complexities during brainstorming.

Q: How do users confirm that leveraging GPU offloading aids in efficiently operating larger models?

A: Users confirm that leveraging GPU offloading significantly aids in efficiently operating larger models, especially in high-context tasks, enhancing performance compared to solely relying on CPU resources.

Q: What are some workarounds proposed for installation issues when downloading models from Hugging Face?

A: New users have expressed frustration with installation issues when downloading models from Hugging Face, citing access agreements as a barrier. Workarounds proposed include alternative models that bypass agreement clicks.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!