[AINews] GPT4o August + 100% Structured Outputs for All (GPT4o August edition) • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Reddit Recap

OpenAI and Anthropic News

Discord AI Communities Highlights

Discord Interactions and Updates

Discord Community Updates

Optimizing AI Resource Usage and HuggingFace Announcements

ChunkAttention Paper and SARATHI Framework

OpenAI API Enhancements

OpenAI DevDay Hits the Road

LangChain AI ▷ #share-your-work

AI Alignment Debate and Image Generation Models

tinygrad (George Hotz) general

Torchtune General Discussion

AI Twitter Recap

AI Twitter Recap

-

AI Model Updates and Benchmarks

- Llama 3.1: Meta released Llama 3.1, surpassing GPT-4 and Claude 3.5 Sonnet on benchmarks with the Llama Impact Grant program expanding.

- Gemini 1.5 Pro: Google DeepMind released Gemini 1.5 Pro outperforming other models on LMSYS and Vision Leaderboard.

- Yi-Large Turbo: Introduced as an upgrade to Yi-Large, priced at $0.19 per 1M tokens.

-

AI Hardware and Infrastructure

- NVIDIA H100 GPUs: Insights shared on H100 performance, highlighting its power for AI workloads.

- Groq LPUs: Plans announced to deploy 108,000 LPUs by end of Q1 2025.

-

AI Development and Tools

- RAG (Retrieval-Augmented Generation): Discussions on the importance of RAG for enhancing AI systems' capabilities.

- JamAI Base: A new platform for building MoA systems without coding.

- LangSmith: New filtering capabilities for traces in LangSmith.

-

AI Research and Techniques

- PEER (Parameter Efficient Expert Retrieval): A new architecture from Google DeepMind using small "experts" for transformer models.

- POA (Pre-training Once for All): A tri-branch self-supervised training framework for pre-training multiple models simultaneously.

- Similarity-based Example Selection: Research showing improvements in low-resource machine translation using in-context example selection.

-

AI Ethics and Societal Impact

- Data Monopoly Concerns: Discussions on potential data monopolies with changes in internet services policies.

- AI Safety: Debates on AI intelligence and safety measures, with Yann LeCun arguing against common AI risk narratives.

-

Practical AI Applications

- Code Generation: Discussions on code generation and its implications.

AI Reddit Recap

The AI Reddit Recap section discusses various topics related to AI developments, model advancements, industry news, and applications. It covers insights on architectural innovations in AI models, advancements in open-source AI models like InternLM 2.5 20B, and the release of Magnum-32b v2 and Magnum-12b v2. Additionally, it delves into the leadership shifts in major AI companies, Reddit discussions about uncensored models like Gemini 1.5 Pro Experimental 0801, and the creation of a game using Large Language Models (LLMs) for spell and world generation. The section also highlights controversies, model performances, uncensored capabilities, and potential scenarios in the AI community and industry.

OpenAI and Anthropic News

This section discusses various updates related to OpenAI, including advancements in AI models, leadership changes, GPU performance, and collaborations. It covers topics such as Gemma 2 2B model supporting on-device operations, Mistral MoEification enhancing AI efficiency, issues with GPT-4o in conversations, and advancements in AI ethics and data practices. Additionally, it highlights various projects and collaborations like Llamafile revolutionizing offline LLM accessibility and the launch of Open Medical Reasoning Tasks project to unite AI and medical communities.

Discord AI Communities Highlights

Discord AI communities showcase various developments and discussions in the field of artificial intelligence. From advancements in model efficiency and challenges in fine-tuning to community reflections on structured outputs and GPU optimizations, a wide array of topics are explored. The sections cover updates from ZLUDA patches in AMD to the unveiling of Gemma 2 2B, new initiatives in medical reasoning tasks, and debates on AI regulation. The AI discord communities serve as platforms for knowledge exchange, problem-solving, and industry insights, shaping the discourse around AI advancements and challenges.

Discord Interactions and Updates

Nathan Lambert's Discord:

- John Schulman moved to Anthropic, sparking discussions on AI ethics and innovation.

- Speculation around OpenAI's Gemini program raised questions on advancements and strategic direction.

- Flux Pro's unique user experience was compared to competitors, focusing on subjective user satisfaction.

- Model benefits were discussed in relation to data decomposition and noise levels affecting startups' strategies.

- Comparison between Claude and ChatGPT initiated conversations on next-gen AI tools.

Alex Atallah's Discord:

- GPT4-4o launched on OpenRouter featuring structured output capabilities and JSON schema responses.

- Performance drama among AI models highlighted ongoing price and efficiency challenges.

- Budget-friendly GPT-4o advancements were noted in token management with reduced input and output costs.

- OpenRouter API cost calculation discussions emphasized using the 'generation' endpoint for accurate expenditure tracking.

- Google Gemini Pro 1.5 users faced resource exhaustion errors due to heavy rate limiting.

LlamaIndex Discord:

- LlamaIndex announced RAG-a-thon with partners @pinecone and @arizeai.

- Webinar on RAG-augmented coding assistants promoted enhancing AI-generated code quality.

- RabbitMQ usage for effective agent communication and Vector DB comparison shared for knowledge enhancement.

- Bug in LlamaIndex's function_calling.py and comparison of CIFAR images' frequency constancy discussed.

- Vector databases' insights shared for educational purposes.

Cohere Discord:

- Debates on open-source classification models like Command R Plus and licensing controversies with Meta's Mistral models.

- Cohere Toolkit's application in developing AI models and exploring third-party API integration like Chat GPT and Gemini 1.5.

Modular (Mojo) Discord:

- Updates on InlineList and List optimization features in Mojo.

- Custom accelerators' potential integration for hardware advancements in Mojo.

LAION Discord:

- Leadership changes at OpenAI with John Schulman departing and Meta's JASCO project facing legal issues.

- Validation accuracy milestones celebrated, and inquiries into CIFAR images and performance metrics.

Tinygrad Discord:

- Discussions on running tinygrad on Aurora supercomputer and precision standards in FP8 Nvidia bounty.

- Bug fixes and study notes shared on computer algebra, aiding in understanding Tinygrad's operations.

DSPy Discord:

- Data mining efficiency with Wiseflow and HybridAGI's usability enhancements.

- LLM-based agents' potential for AGI, improved inference compute performance, and performance metrics comparisons.

OpenAccess AI Collective (axolotl) Discord:

- Synthetic data strategy proposals and discussions on MD5 hash consistency.

- Updates on Bits and Bytes Foundation's library development and UV Python package installer.

Torchtune Discord:

- PPO integration and Qwen2 models support in Torchtune.

- Troubleshooting Llama3 model paths and preference dataset enhancements.

OpenInterpreter Discord:

- Local LLM setup issues and security concerns in Open Interpreter highlighted.

- Inquiries on Python version support and Ollama model setup hints shared.

Discord Community Updates

The Mozilla AI Discord highlighted exciting updates on offline, accessible LLMs and offered a chance to win a gift card for feedback. Additionally, the sqlite-vec release party showcased advancements in vector data handling, while the Machine Learning Paper Talks spurred discussions on new analytical perspectives. A successful AMA by Local AI reiterated the commitment to open-source development. On the MLOps @Chipro Discord, LinkedIn Engineering shared insights on transforming their ML platform with Flyte pipelines, demonstrating practical applications within LinkedIn's infrastructure.

Optimizing AI Resource Usage and HuggingFace Announcements

- Optimize AI Resource Usage: Community members discussed strategies for managing AI resources efficiently to minimize costs and maximize performance.

- Gemma 2 2B runs effortlessly on your device: Google releases Gemma 2 2B, a 2.6B parameter version for on-device use with platforms like WebLLM and WebGPU.

- FLUX takes the stage with Diffusers: The new FLUX model, integrated with Diffusers, promises a groundbreaking text-to-image experience enhanced by bfl_ml's release.

- Argilla and Magpie Ultra fly high: Magpie Ultra v0.1 debuts as the first open synthetic dataset using Llama 3.1 405B and Distilabel for high compute-intensive tasks.

- Whisper Generations hit lightning speeds: Whisper generations now run 150% faster using Medusa heads without sacrificing accuracy.

- llm-sagemaker simplifies LLM deployment: Llm-sagemaker, a new Terraform module, is launched to streamline deploying LLMs like Llama 3 on AWS SageMaker.

ChunkAttention Paper and SARATHI Framework

The ChunkAttention paper introduces a prefix-aware self-attention module aimed at optimizing memory utilization in Large Language Models (LLMs). By breaking down key/value tensors into smaller chunks and utilizing a prefix-tree architecture, memory efficiency is enhanced. On the other hand, the SARATHI framework focuses on improving LLM inference efficiency through chunked-prefills and decode-maximal batching. SARATHI allows decode requests to piggyback during inference, reducing costs and enhancing GPU utilization.

OpenAI API Enhancements

Web Devs Transition to AI Engineering:

- Discussion on the transition from web developer to AI engineer due to the increasing demand for AI integration skills.

NVIDIA Faces Scrutiny for AI Data Practices:

- NVIDIA's involvement in mass data scraping for AI purposes, processing large amounts of video content daily, despite ethical concerns.

John Schulman Leaves OpenAI for Anthropic:

- John Schulman's departure from OpenAI after nine years to focus on AI alignment research at Anthropic.

OpenAI's Global DevDay Tour Announced:

- OpenAI hosting DevDay events in multiple cities to showcase developer applications using OpenAI tools.

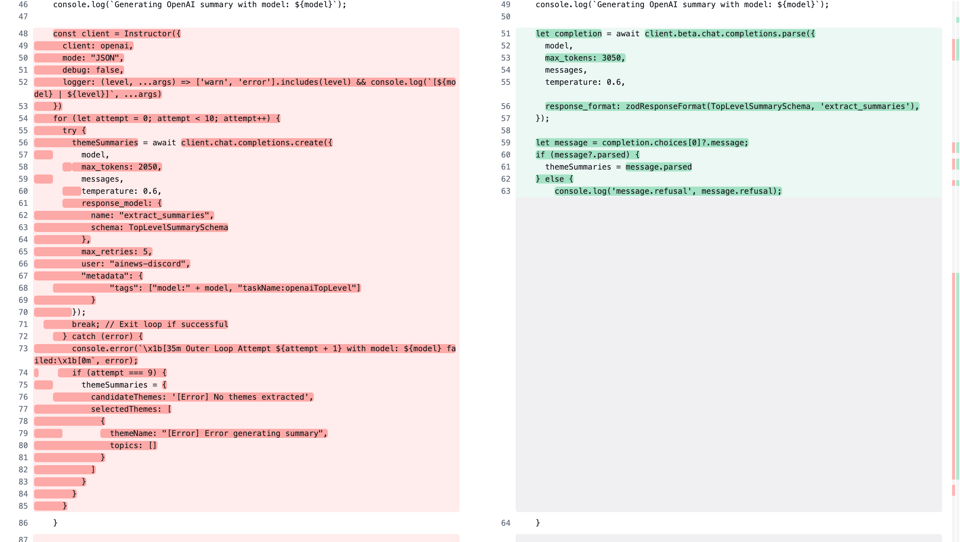

OpenAI's API Now Supports Structured Outputs:

- Introduction of structured output feature in OpenAI's API to ensure model outputs follow exact JSON schemas, improving schema reliability.

OpenAI DevDay Hits the Road

OpenAI announced that DevDay will be traveling to major cities like San Francisco, London, and Singapore this fall for hands-on sessions and demos, inviting developers to engage with OpenAI engineers. This event offers a unique opportunity for developers to learn best practices and witness how their peers worldwide are leveraging OpenAI's technology. Connect with OpenAI Engineers Globally: Developers are encouraged to meet with OpenAI engineers at the upcoming DevDay events to discover how the latest advancements in AI are being implemented globally. These events also provide a platform for participants to collaborate and exchange innovative ideas in the AI development space.

LangChain AI ▷ #share-your-work

AgentGenesis Boosts AI Development:

- AgentGenesis is an AI component library offering copy-paste code snippets to enhance Gen AI application development, promising a 10x boost in efficiency, and is available under an MIT license.

- The project features include a comprehensive code library with templates for RAG flows and QnA bots, supported by a community-driven GitHub repository.

- Call for Contributors to AgentGenesis:

- AgentGenesis is seeking active contributors to join and enhance the ongoing development of their open-source project, which emphasizes community involvement and collaboration.

- Interested developers are encouraged to star the GitHub repository and contribute to the library of reusable code.

AI Alignment Debate and Image Generation Models

Conversations highlighted differing approaches to AI alignment, with practical issues like prompt adherence discussed by John Schulman, while Jan Leike focused on broader implications of AI safety. In the context of image generation models, DALL-E faced competition as members discussed the leading image generation tool and its intuitive comparisons. Flux Pro was noted for its unique experience, emphasizing subjectivity over quantitative benchmarks. The availability of Flux.1 on Replicate sparked discussions on hosting influence. In another section, data-dependency's impact on model performance was analyzed, with startups favoring noisy data strategies, and ICML mentioning Meta's Chameleon. The release of GPT4-4o and issues with structured outputs were highlighted, pointing towards improved token usage. Various topics, such as save rates, API costs, and Google Gemini model limitations, were debated within the community. Lastly, discussions around content licensing, model classifications, and openness in the AI community were explored, shedding light on the nuances of open-source and 'open weights' models.

tinygrad (George Hotz) general

InlineList makes strides with new features:

The development of InlineList is progressing with new features needed, as highlighted by a recent merge.

- Technological prioritization seems to guide the timeline for introducing

__moveinit__and__copyinit__methods inInlineList.

Small buffer optimization adds flexibility to Mojo Lists:

Mojo introduces a small buffer optimization for List using parameters like List[SomeType, 16], which allocates stack space.

- Gabriel De Marmiesse elucidates that this enhancement could potentially subsume the need for a distinct

InlineListtype.

Custom accelerators await Mojo's open-source future:

Custom accelerators like PCIe cards with systolic arrays and CXL.mem are considered potential candidates for Mojo use upon open-sourcing, especially highlighted by dialogue on hardware integration features.

- For now, using Mojo for custom kernel replacements remains challenging, with existing flows, such as lowering PyTorch IR, remaining predominant until Mojo supports features like RISC-V targets.

Torchtune General Discussion

The Torchtune channel focused on various topics including support for DPO in Llama3-8B, model prompt differences in LLAMA3, and ensuring correct LLAMA3 file paths. Users discussed upcoming features like a model page revamp and PreferenceDataset refactor. Additionally, a user shared about Deepgram support inquiry on the OpenInterpreter channel, while Mozilla AI announced updates on Llamafile, a community survey for gift cards, and discussions on machine learning paper talks and a local AI AMA.

FAQ

Q: What are some recent AI model updates and benchmarks discussed in the essay?

A: Recent updates include Llama 3.1 surpassing GPT-4, Gemini 1.5 Pro outperforming other models, and the introduction of Yi-Large Turbo.

Q: What are some developments in AI hardware and infrastructure mentioned?

A: Insights shared on NVIDIA H100 GPUs and plans to deploy 108,000 Groq LPUs by Q1 2025.

Q: Can you explain some new AI development tools and platforms discussed?

A: Discussions include RAG for enhancing AI systems, JamAI Base for coding MoA systems without coding, and LangSmith's new filtering capabilities.

Q: What are some novel AI research techniques mentioned in the essay?

A: PEER architecture using small 'experts,' POA tri-branch self-supervised framework, and example selection for improving low-resource machine translation.

Q: What ethical and societal impact discussions are highlighted in the essay?

A: Discussions on data monopolies, AI safety measures, and debates on AI risk narratives by experts like Yann LeCun.

Q: What practical AI applications are explored in the essay?

A: Discussions on code generation and its implications are featured.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!