[AINews] Grok 2! and ChatGPT-4o-latest confuses everybody • ButtondownTwitterTwitter

Chapters

AI Twitter and Reddit Recap

This section provides a recap of AI-related discussions from Twitter and Reddit. On Twitter, the highlights include updates on Gemini Live by Google DeepMind, limitations of LLMs, an AI Scientist system, tokenization issues, multi-agent systems, prompt engineering, RAG improvements, industry trends like the NoSQL debate, AI alignment concerns, upcoming open-source model releases, and discussions on AI research papers. The Reddit recap from /r/LocalLlama covers the release of InternLM2.5 models in 1.8B and 20B on HuggingFace, challenges with fine-tuning tools, issues with LMDeploy, and the support for llama.cpp. Additionally, discussions on creating advanced AI agents with desktop control for Llama are highlighted.

Gro 2.0 Mini Surprises in LMSYS Arena

Grok 2.0 Mini, identified as the 'sus-column-r' model, is performing competitively on the LMSYS Arena leaderboard against top AI systems. Users are excited about the AI arms race and potential advancements. Discussions also include Elon Musk confirming the model's identity, debates on model size, and potential open-sourcing. Meanwhile, the community reflects on the model's performance while expressing optimism and skepticism. There are discussions about potential unintended consequences of AI autonomy and access to games. The section provides insights into the competitive landscape of AI models and the implications on the industry.

Benchmarking Mojo's Multithreading Dilemma and Network Speed

Two key areas of discussion in this section were the performance benchmarks of Mojo compared to Go and Rust, as well as the multithreading capabilities of Mojo. Members raised questions about the possibility of conducting benchmarks against Go and Rust for Mojo. Additionally, concerns were voiced regarding Mojo's current single-threaded performance and the lack of a robust multi-threading API beyond parallel kernels with MAX. Questions were raised about Mojo's potential support for multiprocessing, network improvements, and handling heavy tasks efficiently. Discussions also touched upon Mojo's network speed capabilities compared to Go and its ability to manage tasks like Rust. Overall, the section highlighted a strong interest in evaluating Mojo's performance and capabilities in relation to other programming languages and platforms.

Visualizing Digraphs with Manim

The focus in this section is on using Manim to visualize digraphs, with a special emphasis on improving video production skills. The section also discusses the 'Tool Use Tuesdays' theme. Additionally, there are updates from various Discord channels, including a hackathon hosted by Poe, the best platform for fine-tuning LLMs by Modal Labs, the impact of an Image Feature Store on training time, and the capabilities of a Generic Feature Store for diverse models. It also mentions struggles faced by Mistral in expanding beyond 8k, Model Merging tactics, and discussions on automated Jupyter Notebook exploration and automation.

Nous Research AI ▷ #ask-about-llms

FP8 Faster Than BF16?

A member asked about the largest FP8 pretraining scale used, suggesting FP8 would be faster than BF16 due to using FP8 multiplications. Another member agreed to try FP8 training.

Nemotron Model Not Trained in FP8

Nemotron-4-340B-Base was trained using 768 DGX H100 nodes, but the model was not trained in FP8. It was confirmed that Nemotron supports mixed FP8 training, but it's unclear if it's actually used.

Databricks Mosaic AI Achieves FP8 Training Breakthrough

Databricks' Mosaic AI platform achieved a 1.4x-1.6x speedup with FP8 training compared to BF16, showcasing the potential of FP8 for training large language models. This was achieved with a 1000 training step test, and while FP8 is promising, it's still relatively new and there's more to learn about its applications.

Character.AI Utilizes Int8 Training

Character.AI employs int8 training, not FP8, for their large language models. The size of their model is not officially known, but an unofficial source suggests one of their earlier models is 108 billion parameters.

Mergekit Support for Gemma2 Models

A member inquired about official support for Gemma2 models in Mergekit. They reported experiencing difficulties when trying to use Mergekit with Gemma2 models, highlighting the need for clarification on its compatibility.

Latent Space AI - General Chat

Pliny Threatens to Leak MultiOn System Prompt:

Pliny, a prominent AI researcher, threatened to leak the full MultiOn system prompt on GitHub if DivGarg9 didn't provide an answer within 15 minutes. This follows an ongoing debate on Twitter regarding the capabilities of various AI models and their performance on specific benchmarks.

AnswerAI ColBERT: Small but Mighty:

AnswerAI has released a small but powerful version of their ColBERT model, called answerai-colbert-small-v1, that beats even bge-base on BEIR benchmark. This demonstrates the effectiveness of smaller models in achieving high performance in certain tasks, potentially offering a more cost-effective solution.

Gemini Live Demo Draws Criticism:

Swyxio criticized Google's Gemini Live Demo on YouTube, deeming it 'cringe'. This was followed by a discussion on the potential of Gemini, with some emphasizing its ability to enhance voice assistants while others remain skeptical.

GPT-4o Improvements Surpass Gemini:

OpenAI's latest GPT-4o model has been tested in the Chatbot Arena and has surpassed Google's Gemini-1.5-Pro-Exp in overall performance. The new GPT-4o model has demonstrated significant improvement in technical domains, particularly in Coding, Instruction-following, and Hard Prompts, solidifying its position as the top performer on the leaderboard.

Grok 2 Makes its Debut:

xAI has released an early preview of Grok-2, a significant advancement from its previous model, Grok-1.5, showcasing capabilities in chat, coding, and reasoning. Grok-2 has been tested on the LMSYS leaderboard and is outperforming both Claude 3.5 Sonnet and GPT-4-Turbo, although it is not yet available through the API.

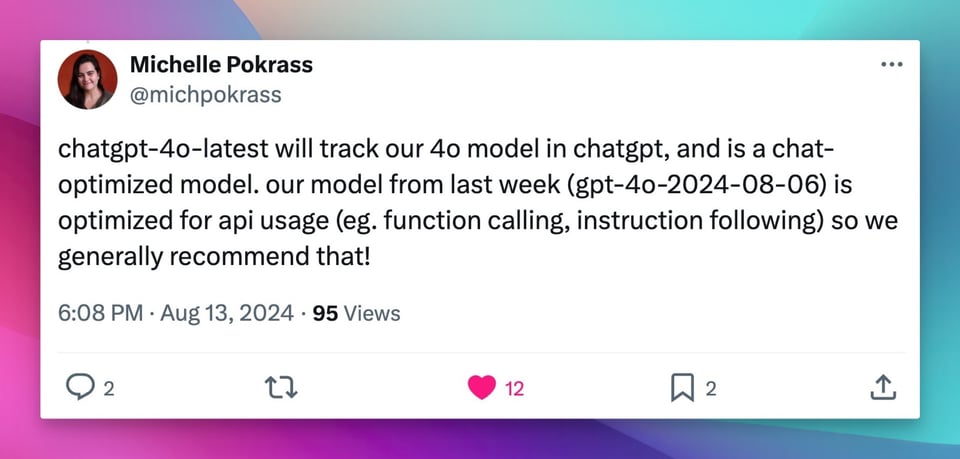

Grok-2 and ChatGPT-4o Latest Updates

A new model called Grok-2 has been released, outperforming Claude 3.5 and GPT-4-Turbo on the LMSYS leaderboard. Anthropic API has introduced prompt caching to reduce costs and latency by up to 90% and 80% respectively. Anthropic's turnaround story is highlighted and compared to other APIs like DeepSeek. In another section, ChatGPT API has improved the GPT-4o model, offering it via the gpt-4o-latest model. Cohere discussions revolve around the release of Grok 2, hiring at xAI, and discussions on speculation and model testing. Lastly, Cohere toolkit users discuss installation issues, building enterprise search chatbots, and other tool integrations.

Cohort Research Project

The user is part of the latest cohort at Fellowship.ai and is utilizing a project for research and learning. There are discussions about language chain support, access to LangGraph Cloud for LangSmith Plus users, and the use of LangChain Postgres library for caching. Members seek solutions for errors related to asyncio.run(), multi-LLM GUI recommendations, and Torchtune functionalities. Additionally, insights on Elon Musk's Grok 2, AI security challenges, and RedOps platform for testing chatbot and voicebot security are shared. LAION discussions include the search for open-source image annotation GUIs, Elon Musk's potential use of licenses, and Schnelle's software tool features. The OpenInterpreter channel talks about RealtimeSTT and Faster-Whisper tools for speech-to-text, hardware channel details, and Tool Use Tuesday plans integrating Open Interpreter and Obsidian.

DiscoResearch General

Members discuss challenges with extending Mistral beyond 8k and a tactic for model merging. Additionally, there is a conversation about building an agentic Jupyter Notebook automation system to improve efficiency and explore different notebook configurations.

FAQ

Q: What is the significance of FP8 training breakthrough achieved by Databricks' Mosaic AI platform?

A: Databricks' Mosaic AI platform achieved a 1.4x-1.6x speedup with FP8 training compared to BF16, showcasing the potential of FP8 for training large language models.

Q: How does Character.AI train their large language models, and what is the unofficial size of one of their earlier models?

A: Character.AI employs int8 training, not FP8, for their large language models. The size of their model is not officially known, but an unofficial source suggests one of their earlier models is 108 billion parameters.

Q: What performance improvements have been demonstrated by AnswerAI's ColBERT model in comparison to bge-base on the BEIR benchmark?

A: AnswerAI has released a small but powerful version of their ColBERT model, called answerai-colbert-small-v1, that beats even bge-base on BEIR benchmark, showcasing its effectiveness and high performance.

Q: What criticisms were raised regarding Google's Gemini Live Demo, and what were the contrasting opinions discussed?

A: Swyxio criticized Google's Gemini Live Demo on YouTube, deeming it 'cringe'. Discussions followed on Gemini's potential to enhance voice assistants, with some emphasizing its capabilities while others remained skeptical.

Q: What advancements have been observed in OpenAI's GPT-4o model, particularly in comparison to Google's Gemini-1.5-Pro-Exp?

A: OpenAI's latest GPT-4o model has surpassed Google's Gemini-1.5-Pro-Exp in overall performance in the Chatbot Arena, demonstrating significant improvements in technical domains like Coding, Instruction-following, and Hard Prompts.

Q: What is the recent development regarding xAI's Grok-2 model and its performance on the LMSYS leaderboard?

A: xAI has released Grok-2, a new model that outperforms Claude 3.5 and GPT-4-Turbo on the LMSYS leaderboard, showcasing advancements in chat, coding, and reasoning capabilities.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!