[AINews] Learnings from o1 AMA • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

OpenAI o1: AI Reddit Recap

AI Integration with Tools and Models

Translation Quality and Tone in LLMs

Translation Fidelity and Fleak AI Updates

Diffusion Discussions

Model Alignment and Testing

Latent Space

PyTorch Quantization Techniques

LlamaIndex General Discussions

Cohere API Discussions

Torchtune Development Discussions

LangChain AI Chat and Project Discussions

AI Twitter Recap

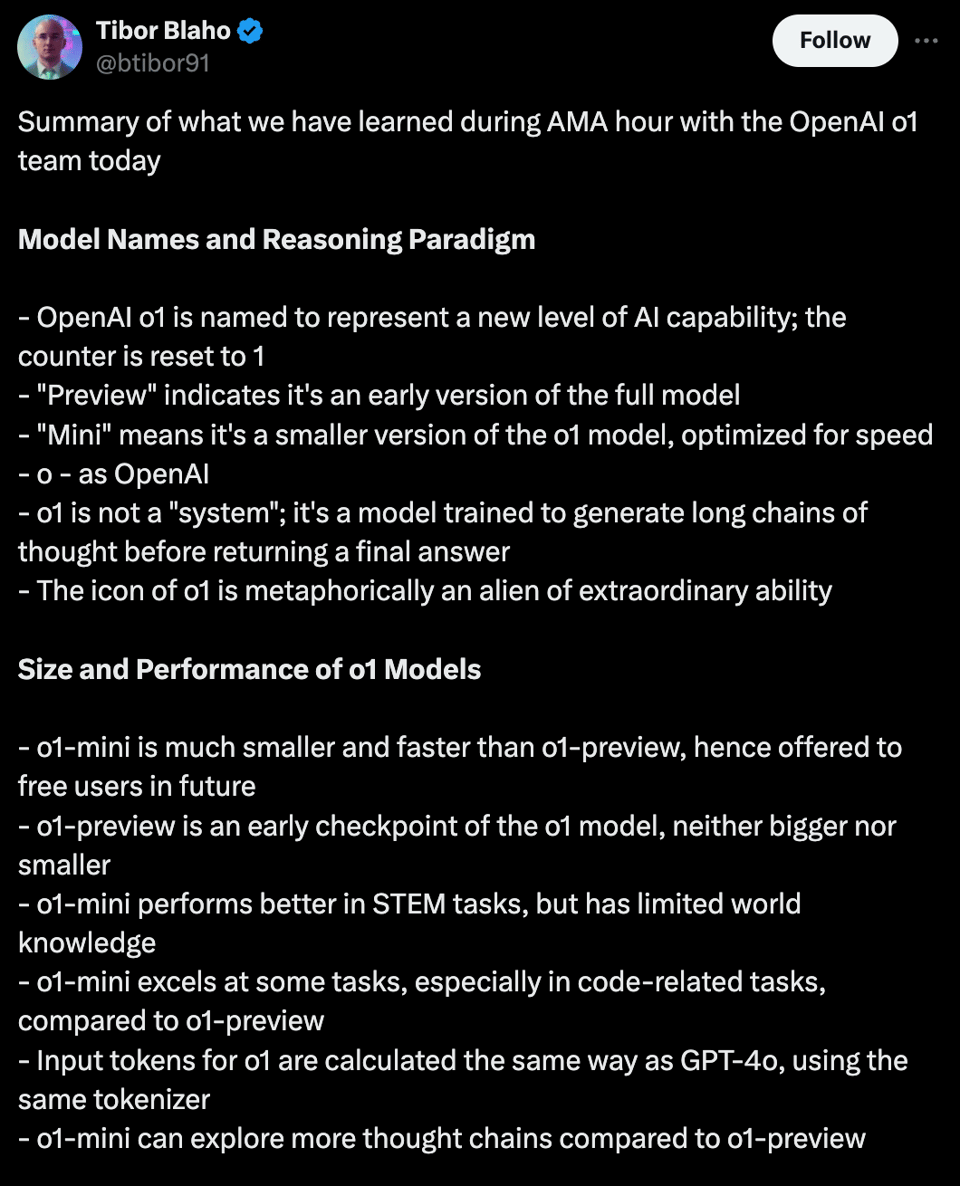

The AI Twitter Recap section covers the highlights and reactions to the release of the o1 Model Series by OpenAI. The section includes discussions on various aspects such as model capabilities, performance improvements, reasoning approach, model variants, technical details, safety improvements, potential applications, developer access, reactions, analysis, and humor. Users on Twitter shared their thoughts on o1 being a paradigm shift in AI development, its comparison to other models, the hidden reasoning tokens, cost considerations, and even indulged in memes and humor related to the model's name.

OpenAI o1: AI Reddit Recap

The section discusses the new OpenAI o1 model and its significant advancements in reasoning capabilities as showcased in various STEM and coding tasks. The model's performance improvements suggest a remarkable leap in AI capabilities for technical and scientific applications. Additionally, debates and discussions around the model, its performance on different tasks, user excitement over its outperforming of human experts, and limitations observed in practical coding tasks are highlighted. Furthermore, the section explores the preliminary LiveBench results where the o1-mini model decisively beats Claude Sonnet 3.5, indicating a significant advancement in AI reasoning capabilities.

AI Integration with Tools and Models

Developers are enhancing their tools with AI integration to boost efficiency and productivity. OpenRouter introduces OAuth support for coding plugins, allowing developers to seamlessly integrate custom AI models. TypeScript simplifies development with LlamaIndex.TS, offering crucial features for AI integration. Vim enthusiasts collaborate to boost coding speed with AI assistance, sharing resources for mastering editors. Fine-tuners face challenges with memory leaks and VRAM limitations, seeking efficient configurations. New tools like MAX 24.5 and Open Interpreter raise the bar in AI performance and efficiency. AI policy discussions heat up with debates over AI model evaluations, as calls for fair benchmarks that factor compute budgets ring louder.

Translation Quality and Tone in LLMs

Members discussed the challenges of fine-tuning LLMs for translations, noting that while LLMs capture the gist of text, they often miss key tone and style elements. This highlights the need for improved translation quality techniques to preserve essential nuances. Additionally, members highlighted struggles in capturing tone and style accurately in translations using LLMs, prompting calls for sharing methods and insights to enhance the overall translation quality.

Translation Fidelity and Fleak AI Updates

This section discusses the concept of translation fidelity and ongoing challenges in the field. It also elaborates on recent updates from Fleak AI, including hosting a private gathering, its focus as a Serverless API Builder, and its community-building initiatives. Fleak AI aims to foster connections and discussions among developers and users. The section provides insights into the alignment lab AI Discord, Mozilla AI Discord, DiscoResearch Discord, and AI21 Labs (Jamba) Discord, highlighting their lack of new messages and welcoming feedback. Lastly, it introduces the detailed by-channel summaries and links for OpenRouter, covering announcements, model releases, rate limits, and technical issues reported by users.

Diffusion Discussions

Batch Size in TTS Training: A user is questioning whether training a TTS model with a batch size of only 4 is detrimental due to limited VRAM, having previously trained on a size of 8. The community's insights on optimal batch sizes in TTS contexts remain awaited.

DDPMScheduler Sampling Step Confusion: A newcomer to diffusion noticed that the sampling step in the DDPMScheduler differs from Algorithm 2 of the DDPM paper.

Model Alignment and Testing

Concerns were raised about the model's inability to align autonomously and the importance of testing the model in adversarial environments. Members also inquired about specific models like Solar Pro 22B and explored precision annealing techniques for training language models. Additionally, the potential benefits of FP8 training regimes were discussed, highlighting the need for reevaluation as training techniques evolve.

Latent Space

Members in the Latent Space channel discussed various topics related to Cursor issues, using AI tools, Vim preferences, HTEC AI Copilot Report, and learning resources for Neovim. Some highlights include: - Cursor facing scaling issues with code completion and document generation, leading to doubts about their research methods. - AI copilots like Cursor and Claude were reviewed by a nearshore consultancy, noting increased efficiency in coding with AI tools. - Vim was praised for enhancing coding speed once mastered, with members sharing resources like Vim Adventures for skill improvement. - The HTEC team evaluated 26 AI tools but found inconclusive results due to limited testing time, raising questions about the depth of their analyses. - The community shared resources for mastering Neovim and engaged in collaborative discussions exploring new tools and techniques for development.

PyTorch Quantization Techniques

Efficient int8 and fp16 matrix multiplication:

- Allows for performing fp16 input/int8 matmul on the GPU without dequantizing, directly casting the int8 weight to fp16 inside the kernel. Current implementation generates a mixed-matmul triton kernel, avoiding unnecessary dequantization.

Insights on PyTorch quantization techniques:

- Recommends trying int4_weight_only quantization with bfloat16 or fp6 quantization (fpx_weight_only(3, 2)) to reduce memory footprint. Reference to documentation provided for further understanding.

Discussion on _weight_int8pack_mm Function:

- Speculated to operate similarly to fp16 input/int8 matmul by casting the weight matrix to the active data type and applying scaling, ensuring efficient handling of mixed data types within matrix multiplication.

Reference to optimum-quanto's kernels:

- Mentions optimum-quanto kernels used for quantization, detailed in their repository, showcasing non-torchao techniques, providing insights on alternative approaches.

LlamaIndex General Discussions

This section discusses various topics related to the LlamaIndex Discord channel, including limitations of LlamaIndex with function calls, understanding workflows, utilizing chat engines for document interactions, differences in CSV readers, and using ChromaDB for document context retrieval. The discussion provides insights into different functionalities and features of LlamaIndex and how users can make the most out of the platform.

Cohere API Discussions

Users discussed setting a spending limit on Cohere API to manage usage and avoid unexpected bills. The conversation also touched on billing concerns, accessing options on the billing dashboard, and recommended contacting Cohere support for assistance. Additionally, users explored rate limiting for API requests to control usage and safeguard against potential threats. Overall, the discussions highlighted the importance of managing API usage effectively and seeking support when faced with billing or usage issues.

Torchtune Development Discussions

The Torchtune development channel is active with discussions on optimizing performance and efficiency. Members are suggesting changes like setting log_peak_memory_stats to True by default for performance monitoring. There is a consensus to switch recipe tests to run on GPU resources for better efficiency. The community is exploring solutions for collating and masking data more efficiently, with batched generation proposed as a solution. Plans are underway to adopt online packing for iterable datasets to enhance data handling and workflow efficiency.

LangChain AI Chat and Project Discussions

This section discusses various projects and topics related to LangChain AI, including inquiries about conversational history, vector database implementation, consultation projects, and the impact of OpenAI's advancements. Additionally, projects like Warhammer Adaptive RAG and NPC builder collaborations are highlighted. In the context of AI engineering positions and fine-tuning LLMs, the section covers opportunities at Vantager, challenges in tone preservation during translations, and considerations for future C extensions in Tinygrad.

FAQ

Q: What is the focus of the AI Twitter Recap section in the given essay?

A: The AI Twitter Recap section covers highlights and reactions related to the release of the o1 Model Series by OpenAI, discussing various aspects such as model capabilities, performance improvements, reasoning approach, model variants, technical details, safety improvements, potential applications, developer access, reactions, analysis, and humor.

Q: What significant advancements in reasoning capabilities are highlighted in the discussion of the new OpenAI o1 model?

A: The new OpenAI o1 model is showcased to have significant advancements in reasoning capabilities, especially in STEM and coding tasks, hinting at a remarkable leap in AI capabilities for technical and scientific applications.

Q: What are some of the challenges and limitations observed in the practical coding tasks regarding the o1 model?

A: Debates and discussions in the section highlight the model's performance on different tasks, user excitement over its outperforming of human experts, and limitations observed in practical coding tasks.

Q: What are some of the discussions around fine-tuning LLMs for translations in the essay?

A: Members discuss the challenges of fine-tuning LLMs for translations, noting that while LLMs capture the gist of text, they often miss key tone and style elements. This emphasizes the need for improved translation quality techniques to preserve essential nuances.

Q: What insights are provided regarding efficient int8 and fp16 matrix multiplication in the essay?

A: The essay discusses how efficient int8 and fp16 matrix multiplication allows performing operations on the GPU without dequantizing, directly casting the int8 weight to fp16 inside the kernel, thus enhancing performance and efficiency.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!