[AINews] Mini, Nemo, Turbo, Lite - Smol models go brrr (GPT4o version) • ButtondownTwitterTwitter

Chapters

Efficiency in AI News

AI Reddit Recap

AI Discord Recap: GPT-4o Mini and Mistral NeMo

Deep Learning Hardware Optimization

OpenInterpreter's Various Discussions

HuggingFace Discord

HuggingFace Community Discussions

Optimizing CUDA Operations and AI Models

Tokenization-Free Models and Interpretability in AI

Nous Research AI World-Sim

Big Model Drop Day Announced

Interconnects: Learning Topics in AI Discussions

Perplexity AI Sharing

NextCloud and Perplexity API; API Formatting and Model Details; LangChain Integration and Tools; Easy Folders Launch; RAG and LangGraph; Query Rewriting; Llama Index General Discussion; OpenInterpreter Milestone; OpenAccess AI Collective Updates

Training Transformers and Performance Discrepancies

Efficiency in AI News

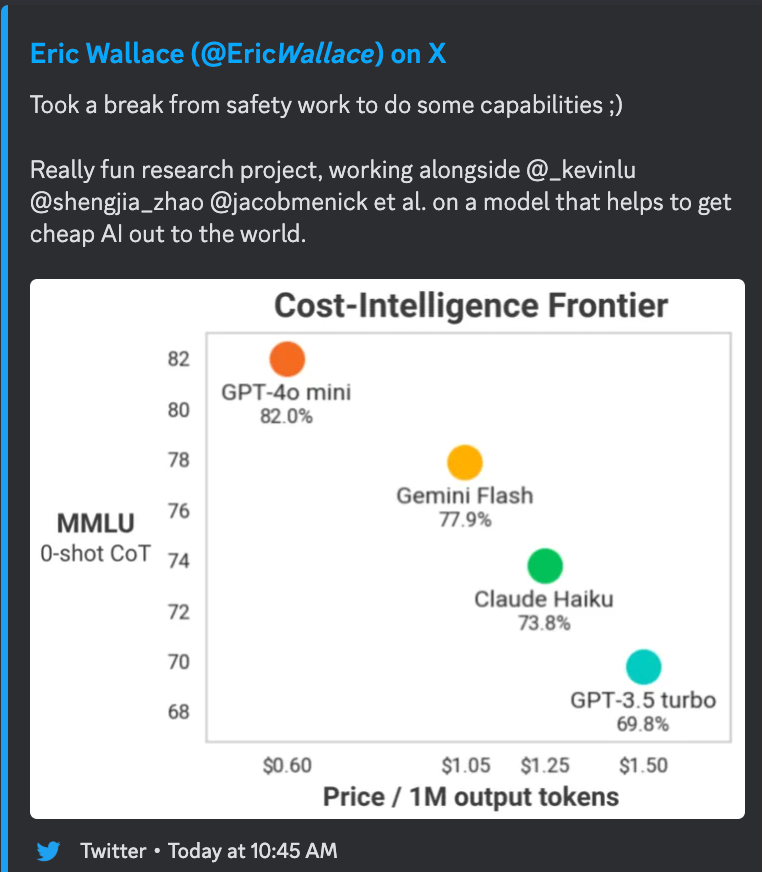

The latest AI News edition covers a range of new AI models and developments. Noteworthy updates include the introduction of GPT-4o-mini with impressive pricing and performance metrics, Mistral Nemo's collaboration with Nvidia and support for a wide context window, and the introduction of Together Lite and Turbo models. Additionally, DeepSeek V2 has been open-sourced, hinting at more unreleased models in the pipeline. The post also provides insights into the upcoming release of Llama 3 400b. The section ends with an observation on the clustering of new developments, possibly due to astrological reasons or upcoming events like ICML.

AI Reddit Recap

The AI Reddit Recap includes various themes related to AI developments and discussions on platforms such as /r/LocalLlama, /r/machinelearning, /r/openai, and others. These themes cover diverse topics such as AI in comic and art creation, advancements in LLM quantization techniques, innovative AI education platforms, real-time AI video generation with Kling AI, and more. The discussions range from the limitations posed by EU regulations on AI models' availability to the use of AI tools in comic creation workflows and the ethical implications of AI-generated art.

AI Discord Recap: GPT-4o Mini and Mistral NeMo

The AI Discord Recap discusses the launch of GPT-4o Mini by OpenAI, a cost-effective and powerful model replacing GPT-3.5 Turbo. Mistral NeMo, a collaboration between Mistral AI and NVIDIA, introduces a 12B model with a 128k context window for advanced reasoning capabilities. The release notes of Mistral NeMo highlight its prowess in coding accuracy and world knowledge. The Discord also delves into hardware optimization, AI advancements, and multimodal AI developments in the industry.

Deep Learning Hardware Optimization

Community discussions highlighted the advantages of utilizing A6000 GPUs for deep learning tasks, particularly in training models with high performance at a reasonable cost. Users reported successful configurations that leverage the A6000's capabilities for various AI applications. Additionally, members shared their configurations, including setups with dual A6000s, which were repurposed from fluid dynamics simulations to AI training. These setups demonstrate the versatility of A6000 GPUs in handling complex computational tasks.

OpenInterpreter's Various Discussions

Discussions in the OpenInterpreter Discord channel cover a range of topics, including the cost-effective performance of an AI model over GPT-4, the speed and versatility of a new multimodal AI, and the impressive entrance of Mistral NeMo with its 12B parameter size and 128k token context window. Members express excitement about the applications of these AI advancements and engage in conversations about their capabilities and potential impact on the field.

HuggingFace Discord

Mac M1’s Model Latency Lag:

- Users experienced latency issues with Hugging Face models on Mac M1 chips due to model loading times during preprocessing pipeline initiation. When experimenting with multiple models, the delay was exacerbated, as each model required individual downloads and loads, contributing to the initial latency.

LAION Discord:

- Meta shifts focus to multimodal AI models, with a sparse discussion following an article share. The lack of substantial EU community debate leaves implications on innovation and accessibility pending scrutiny. Meta's decision to pull Llama models from the EU market has stirred a quiet response among users, with the impact on regional AI tool accessibility still awaiting intensive scrutiny or debate.

- Codestral Mamba promises advancements in code generation with linear time inference, co-created by Albert Gu and Tri Dao. The model aims to enhance coding efficiency while handling infinitely long sequences.

- The introduction of Prover-Verifier Games aims to improve the legibility of language models, supported by a few technical references. The community shows restrained enthusiasm pending practical applications.

- Despite NuminaMath-7B's success at AIMO, highlighted flaws in basic reasoning capabilities pose cautionary tales, with AI veterans contemplating the gravity of strong claims not standing up to elementary reasoning under AIW problem scrutiny.

Torchtune Discord:

- Confusion arose over using torchtune.data.InstructTemplate for custom template formatting, specifically regarding column mapping. Clarification followed that column mapping renames dataset columns, with a query if the alpaca cleaned dataset was being utilized.

- Discourse on CI behavior highlighted automatic runs when updating PRs leading to befuddlement among contributors. The consensus advice was to disregard CI results until a PR transitions from draft to a state ready for peer review.

- An attempt to program an LLM to consistently output 'HAHAHA' faced noncompliance, despite being fed a specific dataset, serving as a precursor to more serious applications using the alpaca dataset for a project.

HuggingFace Community Discussions

Users discussed issues with RVC not working and questioned why repositories are still online while looking for alternative AI voice model projects. Suggestions for solutions were lacking, leaving the problem unresolved. A detailed approach for pre-training Mistral using unsupervised learning was shared, with advice on data format and token exclusion. A user named quirkyboi22 was reminded about proper communication channels when pinging admins for Huggingchat issues. The community suggested emailing the website for such problems, and an official response confirmed awareness of the issues. In another section, members shared updates and new projects within the HuggingFace community. Topics included building sentiment analysis apps with Transformers.js, launching a Community Computer Vision Course, the release of Mistral NeMo model, the automation of ML model training with AutoTrainer, and a reminder on content moderation in Discord. Each discussion was accompanied by relevant links for further information.

Optimizing CUDA Operations and AI Models

The section discusses various topics related to optimizing CUDA operations and advancing AI models. It includes techniques like QGaLoRE for fine-tuning optimization and Mistral AI's MathΣtral and CodeStral projects. Additionally, there are discussions on building CUTLASS repo tutorials and using Nsight CLI for remote profiles. The section also covers topics like deep copying in GPU operations, kernel parameter limits, layernorm optimizations, and quantization strategies. Lastly, it mentions new advancements in AI models like GoldFinch hybrid model, Finch-C2, and GPTAlpha, along with insights on topics like attention mechanisms, patch-level training, and scaling techniques for language models.

Tokenization-Free Models and Interpretability in AI

A member raised a question about the potential benefits or drawbacks of tokenization-free language models for interpretability. The discussion illuminated on the impact of tokenization on AI model interpretability, highlighting the necessity to consider this factor when designing and analyzing language models.

Nous Research AI World-Sim

Two updates were posted in the Nous Research AI World-Sim channel. The first update reported downtime experienced by the WorldSim platform, which was quickly resolved. The second update shared that a member swiftly reported and resolved an issue with WorldSim, thanking the community for their prompt response.

Big Model Drop Day Announced

Today is a big model drop day in the AI community, with significant updates and releases being highlighted. Members are encouraged to opt in to heavily updated thread discussions to stay informed on the latest developments. These discussions ensure users stay up-to-date with the most recent conversations and updates in the community.

Interconnects: Learning Topics in AI Discussions

In a discussion panel led by Nathan Lambert on 'ml-questions,' various topics regarding AI models and datasets were covered:<br><br>- A member inquired about the need for code-related PRM datasets and the usage of AST mutation methods.<br>- There was a discussion on using 'positive, negative, neutral' labels versus scalar values for PRMs, considering challenges in generating calibrated datasets.<br>- Members expressed interest in exploring research on PRMs and synthetic data through an MS program.<br>- A query about a book reference led to a vague response citing extensive litigation.<br><br> These discussions shed light on the ongoing developments and challenges within the AI community.

Perplexity AI Sharing

-

Record-Breaking Stegosaurus Sale: Perplexity AI highlighted a record-breaking sale of a Stegosaurus fossil, generating significant interest.

- The discussion emphasized the staggering price and the historical significance of the sale.

-

Lab-Grown Pet Food Approved: A YouTube video announced the approval of lab-grown pet food, capturing the attention of the community.

- The video highlights ethical considerations and the nutritional benefits of lab-grown options.

-

Anthropic's $100M AI Fund: Perplexity AI revealed Anthropic's launch of a $100M fund aimed at advancing AI technologies.

- Members discussed the potential impact on AI research and future innovations funded by this initiative.

-

H2O-3 Code Execution Vulnerability: A critical page on Perplexity AI described a newly discovered code execution vulnerability in H2O-3.

- The page detailed the risks and potential exploits, urging users to update their systems promptly.

NextCloud and Perplexity API; API Formatting and Model Details; LangChain Integration and Tools; Easy Folders Launch; RAG and LangGraph; Query Rewriting; Llama Index General Discussion; OpenInterpreter Milestone; OpenAccess AI Collective Updates

This section discusses various topics related to NextCloud and Perplexity API, suggestions for formatting-free API responses, API calls to retrieve model details, LangChain integration, Easy Folders launch, RAG and LangGraph implementations. It also covers query rewriting, Llama Index general discussion on different topics, OpenInterpreter hitting a significant milestone, and updates from OpenAccess AI Collective including challenges with high context length, Mistral NeMo release and performance comparison, and training inference capabilities in transformers.

Training Transformers and Performance Discrepancies

A member reported unexpected consequences of having a high context length during training, learning it the hard way. Mistral NeMo, a 12B model with a context window of up to 128k tokens, was released in collaboration with NVIDIA, showcasing state-of-the-art reasoning and coding accuracy. Performance discrepancies were noted in Mistral NeMo compared to Llama 3 8B. A paper discussed training transformers for reasoning, finding that they succeed through extended training beyond overfitting. Additionally, concerns about overfitting in GEM-A, LLama3 as a reference model, the impact of lowering rank on eval loss, and observations of training loss improvement were highlighted in discussions.

FAQ

Q: What are some notable updates in the latest AI News edition?

A: Some notable updates include the introduction of GPT-4o-mini, Mistral Nemo's collaboration with Nvidia, the introduction of Together Lite and Turbo models, open-sourcing of DeepSeek V2, and insights into the upcoming release of Llama 3 400b.

Q: What themes are covered in the AI Reddit Recap?

A: Themes in the AI Reddit Recap cover topics such as AI in comic and art creation, advancements in LLM quantization techniques, innovative AI education platforms, real-time AI video generation with Kling AI, and discussions on the limitations posed by EU regulations on AI models' availability.

Q: What are some key discussions in the Torchtune Discord?

A: Discussions in the Torchtune Discord include confusion over torchtune.data.InstructTemplate usage, CI behavior, challenges with programming an LLM, issues with RVC, and sharing pre-training techniques for Mistral using unsupervised learning.

Q: What is the focus of the discussions in the LAION Discord?

A: Discussions in the LAION Discord focus on shifts towards multimodal AI models, advancements in code generation with Codestral Mamba, Prover-Verifier Games for improving language model legibility, and lessons learned from NuminaMath-7B's basic reasoning flaws.

Q: What important topics are covered in the discussions related to optimizing CUDA operations and advancing AI models?

A: Discussions cover techniques like QGaLoRE for fine-tuning optimization, Mistral AI's MathΣtral and CodeStral projects, building CUTLASS repo tutorials, using Nsight CLI for remote profiles, deeper insights into GPU operations, and advancements in AI models like GoldFinch hybrid model and GPTAlpha.

Q: What was highlighted in the Nous Research AI World-Sim updates?

A: The updates reported downtime experienced by the WorldSim platform which was resolved swiftly, and an issue with WorldSim was reported and resolved promptly by a member.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!