[AINews] not much happened this weekend • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Reddit Recap

Fine-Tuning and Training Challenges, New Model Releases, and API Issues

GPU Memory Showdown and AI Integration Platforms

LAION Discord

Exploration of Advanced Architectures

Discussion Highlights

OpenAI \uf8ff api-discussions

Enhancements and Updates in Tools and Configurations

Response Evaluation and Research Advancements

Multimodal Models and Usage Metrics Feedback

Stable Diffusion Discussion

Interconnects - DSPy General Chat

DSPy LM Migration and New Features

Discord Channel Discussions

AI Twitter Recap

This section provides a recap of AI-related discussions and developments on Twitter, covering various topics such as AI model comparisons, research applications, safety, ethics, and industry news. Some highlights include: - Discussions on OpenAI's o1-preview performance and Claude 3.5 Sonnet - Observations on LLM convergence and Movie Gen by Meta - Implementations of Retrieval Augmented Generation (RAG) and AI in customer support - Surveys on synthetic data generation models and the resurgence of RNNs for long sequence tasks - Insights on biologically-inspired AI safety and debates on AI risk in the industry.

AI Reddit Recap

Theme 1. Advancements in Small-Scale LLM Performance

-

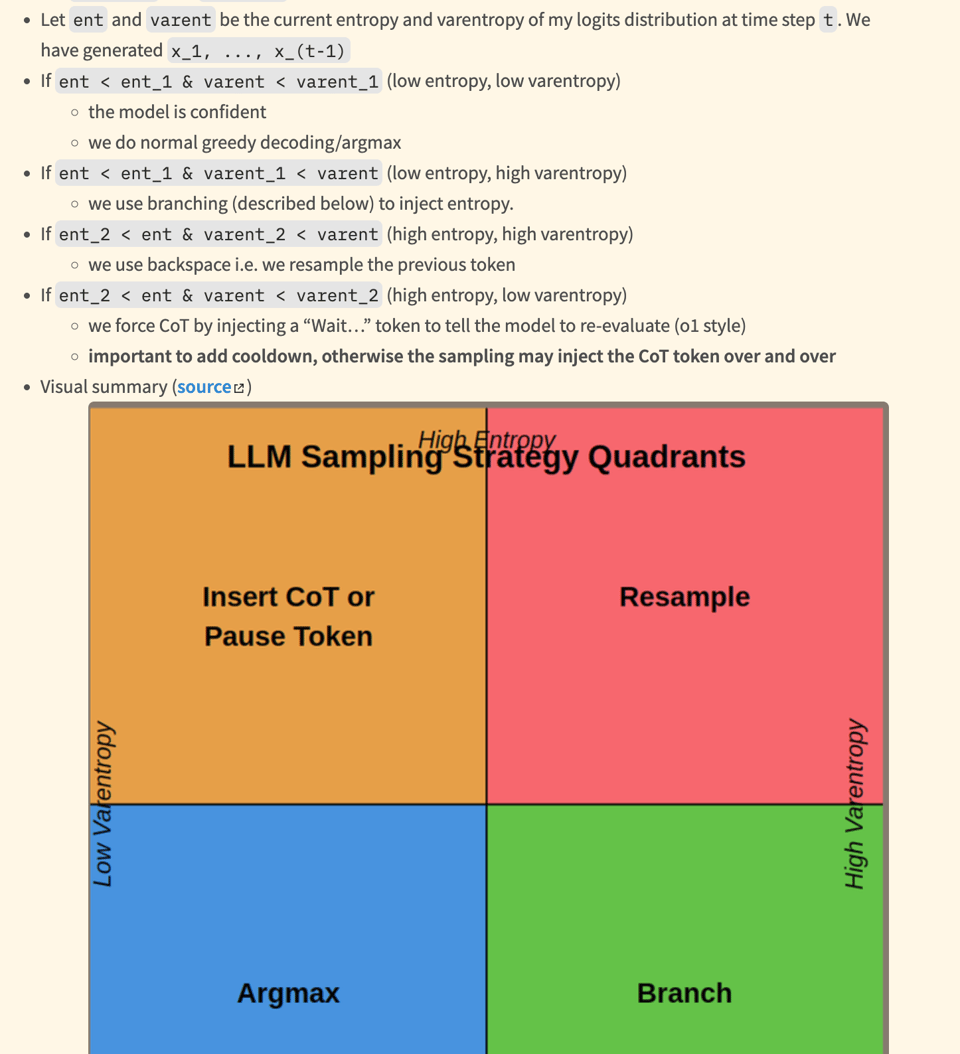

Adaptive Inference-Time Compute: Large Language Models (LLMs) can dynamically adjust computational resources during generation to potentially enhance output quality. This technique aims to improve computational efficiency and effectiveness in LLMs.

-

3B Qwen2.5 finetune beats Llama3.1-8B: Qwen2.5-3B model outperformed Llama3.1-8B on Leaderboard v2 evaluation, offering improved scores across various benchmarks.

Theme 2. Open-Source Efforts to Replicate o1 Reasoning

-

It's not o1, it's just CoT: Critiques open-source attempts to replicate OpenAI's Q*/Strawberry (o1), emphasizing the need for genuine o1 capabilities rather than CoT implementations.

-

A new attempt to reproduce the o1 reasoning on top of the existing models: Aims to enhance existing language models' capabilities without retraining, focusing on specialized prompts for structured and logical outputs.

Theme 3. DIY AI Hardware for Local LLM Inference

-

Built my first AI + Video processing Workstation: Describes a high-performance AI workstation designed for offline processing of Llama 3.2 70B model with impressive throughput capabilities.

-

AMD Instinct Mi60: Details the AMD Instinct Mi60 GPU's performance and compatibility, showcasing benchmark results with Llama-bench.

Theme 5. Multimodal AI: Combining Vision and Language

- Qwen 2 VL 7B Sydney - Vision Model: Introduces a vision language model designed to provide detailed image commentary, particularly excelling at describing dog pictures.

Fine-Tuning and Training Challenges, New Model Releases, and API Issues

This section discusses various themes related to fine-tuning and training challenges, new model releases, and API issues in the AI community. From overcoming fine-tuning bottlenecks with Unsloth Studio to debates on LoRA limitations and the performance of new models like Qwen 2.5 and Dracarys 2, the content covers a range of topics. Additionally, it addresses API errors with Cohere and rising costs of OpenAI API for media analysis, highlighting community discussions and potential solutions for these challenges.

GPU Memory Showdown and AI Integration Platforms

- Comparative discussions highlighted the suitability of Tesla P40 and RTX 4060Ti for AI tasks based on VRAM capacity.

- OpenRouter's collaboration with Fal.ai enhances image workflows via Gemini.

- API4AI facilitates easy integration with services like OpenAI and Azure.

- Users reported issues with OpenRouter API double generation and 404 errors.

- Math models like o1-mini excel in STEM tasks within OpenRouter.

- Inquiries for non-profit discounts reflect the need for affordable tech access for educational initiatives.

LAION Discord

The LAION Discord channel featured updates on the LlamaIndex Agentic RAG-a-thon event, challenges faced with the fschad package, discussions on O1 performance, interest in the Clip Retrieval API, and insights shared from training with 80,000 epochs. Additionally, a new tool AutoArena was introduced, reflecting the community's interest in practical AI development tools.

Exploration of Advanced Architectures

Curiosity about Parallel Scan Algorithm

A member inquired about the concept of a parallel scan algorithm, particularly in the context of training minimal RNNs efficiently in parallel. Another member shared a document on parallel prefix sums to provide further insights.

Exploration of Self-Improvement in LLMs

A study delved into the potential of Large Language Models (LLMs) to enhance their reasoning abilities through self-improvement using Chain-of-Thought (CoT) on pretraining-scale data without the need for supervised datasets. Leveraging vast amounts of unstructured text in pretraining data holds the promise of significantly boosting LLMs' reasoning capabilities.

Discussion Highlights

- Members discussed various oriented object detection models including YOLO v8 OBB, Rotated Faster R CNN, Rotated RetinaNet, Oriented R CNN, and Gliding Vertex.

- Concerns were raised about inaccurate bounding boxes with a fine-tuned DETR model, particularly in the bottom right region of images.

- Inquiries were made about CNN Autoencoder output smoothing and extending the character set in TrOCR models.

- The HuggingFace NLP channel had discussions on summarization issues, running Google T5 model locally, log data analysis, and loading models from Hugging Face.

- Within the GPU Mode channel, members discussed optimization techniques, Flux 1.1 Pro model efficiency, using pretrained weights in AutoencoderKL, and strategies for handling out of memory errors.

OpenAI \uf8ff api-discussions

Optimizing ChatGPT's Functions for Clarity:

A user suggested that improving ChatGPT's ability to analyze questions and clarify context could enhance performance, particularly in straightforward tasks like counting letters in words.

Effective Keyword Selection from Large Data Sets:

A user seeks to select 50 keywords from an extensive set of 12,000 based on media file content, raising concerns about the model’s context window limitations.

Challenges in Prompt Engineering:

There was a widespread concern regarding the complexity of prompt construction, especially when users needed deterministic algorithms to create prompts.

The Need for Different Communication Styles:

Users discussed the need for LLMs to adapt to unconventional communication styles, with one expressing frustration in simulating meaningful dialogue with AI.

Diverse Learning Approaches in AI Interaction:

Participants emphasized that everyone learns differently, comparing understanding AI to dog training, where technical knowledge may help some learners but not all.

Enhancements and Updates in Tools and Configurations

/read-only Gets Major Updates:

- The /read-only command now supports shell-style auto-complete for the full file system, in addition to repo file paths like /add and globs such as src/**/*.py. These enhancements facilitate easier navigation and management of files in the project.

YAML Config Format Overhaul:

- The YAML config file format has been updated to utilize standard list syntax with - list entries, ensuring better readability. Moreover, the --yes flag has been renamed to --yes-always, necessitating updates in existing YAML and .env files.

Launch Updates with Sanity Checks:

- A sanity check for the --editor-model has been added during launch, enhancing the integrity of the operation. Additionally, a --skip-sanity-check-repo switch is now available to speed up the launch process in larger repositories.

Bugfixes and Performance Improvements:

- A bugfix ensures that architect mode handles Control-C properly, improving overall user experience. The repo-map has been made deterministic, accompanied by improved caching logic for better performance.

Response Evaluation and Research Advancements

The section discusses various response evaluation methods, including pretraining on instruct models and fine-tuning strategies for Llama 3.1. It highlights a paper on COCONUT redefining reasoning for LLMs and the introduction of GenRM for reward models. Additionally, it covers the release of SwiftSage v2 for enhanced reasoning and new methods for contextualized document embeddings. The content also includes links to research papers on Meta Movie Gen, COCONUT reasoning paradigm, GenRM reward models, SwiftSage v2 introduction, and Contextualized Document Embeddings.

Multimodal Models and Usage Metrics Feedback

- Media Integration Models: The use of video, audio, and multimodal models is seen as a logical progression, with some users emphasizing their growing importance in the AI landscape.

- Issues with Double Generation Responses: Users encountered double generation responses when using the OpenRouter API due to specific setup issues. Adjustments were made to address 404 errors and possible timeouts.

- Math Models Performance: The use of the <code>o1-mini</code> model was highlighted for its effectiveness in math STEM tasks, sparking discussions on LaTeX rendering capabilities for math formulas within the OpenRouter chat room.

- Feedback on Usage Metrics: New usage metrics, detailing prompt and completion tokens, were observed in API responses, revealing information previously unknown to some users. The metrics adhere to GPT4 tokenizer standards.

- Discount Inquiries for Non-Profit Organizations: An inquiry about potential discounts or credit options for non-profit educational organizations in Africa was made, reflecting broader interests in accessibility and supportive pricing within the AI community.

Stable Diffusion Discussion

This section discusses the comparison between AMD and NVIDIA for image generation in Stable Diffusion, where the RTX 4070 is recommended over the RX 6900 XT. Additionally, CogVideoX surpasses older models like Svd in text-to-video generation. Users also share their preferences between ComfyUI and Forge UI for Stable Diffusion, with ComfyUI being favored for its ease. There are also discussions about training challenges with LoRA for SDXL and inquiries about post-generation edits. The section highlights ongoing improvements to enhance user experience in Stable Diffusion.

Interconnects - DSPy General Chat

The discussion in the DSPy General chat included various topics such as the implementation of TypedPredictors without schema formatting logic, the addition of traceability in DSPy for cost management, transitioning to the dspy.LM interface, evaluating custom LM versus custom Adapter options, and the deprecation of custom LM clients. Members shared insights on these topics and provided troubleshooting solutions for issues encountered during the transition. Overall, the chat focused on enhancing functionalities and addressing technical challenges in the DSPy framework.

DSPy LM Migration and New Features

The documentation highlights a decrease in the need for custom LM clients since DSPy 2.5, advocating for migration to dspy.LM. Users are advised to consult migration guides for leveraging new features and ensuring compatibility with future updates. Additionally, refer to the provided links for creating custom LM clients and relevant pull requests. It is essential to stay updated with the migration process and embrace the advancements in DSPy for optimized performance.

Discord Channel Discussions

This section provides insights into various discussions happening on different Discord channels related to AI and technology. From comparing costs between different platforms to innovative ideas like integrating a digital assistant cap, the community members explore a range of topics including upcoming events, research projects, and tools for automating QA processes. The section also highlights community inquiries about data pipelines for model fine-tuning, building agents that can spend money, and seeking teammates for hackathons.

FAQ

Q: What is the concept of adaptive inference-time compute in Large Language Models (LLMs)?

A: Adaptive inference-time compute in LLMs refers to the ability of these models to dynamically adjust computational resources during generation to potentially enhance output quality, aiming to improve computational efficiency and effectiveness.

Q: How did the Qwen2.5-3B model perform compared to the Llama3.1-8B model?

A: The Qwen2.5-3B model outperformed the Llama3.1-8B model on Leaderboard v2 evaluation, offering improved scores across various benchmarks.

Q: What was highlighted regarding open-source attempts to replicate OpenAI's Q*/Strawberry (o1)?

A: Critiques emphasized the need for genuine o1 capabilities rather than CoT implementations, suggesting a new attempt to reproduce o1 reasoning on existing models with specialized prompts for structured and logical outputs.

Q: What were the key details shared about DIY AI hardware for local LLM inference, specifically using the AMD Instinct Mi60 GPU?

A: Details included the description of a high-performance AI workstation for offline processing of the Llama 3.2 70B model and benchmark results showcasing compatibility and performance with the AMD Instinct Mi60 GPU.

Q: What is the significance of Multimodal AI in combining vision and language, as demonstrated by the Qwen 2 VL 7B Sydney - Vision Model?

A: Multimodal AI combines vision and language to provide detailed image commentary, with the specific model excelling at describing dog pictures, showcasing advancements in AI technology.

Q: How was the potential for self-improvement in Large Language Models (LLMs) discussed?

A: The discussion highlighted the potential for LLMs to enhance reasoning abilities through self-improvement using Chain-of-Thought (CoT) on pretraining-scale data, without the need for supervised datasets, by leveraging vast amounts of unstructured text.

Q: What updates were mentioned about the /read-only command and YAML config format in the context of project management?

A: Updates included the support for shell-style auto-complete in the /read-only command, standardizing the YAML config file format, and introducing sanity checks and performance improvements for better project management.

Q: What comparisons were made between AMD and NVIDIA for image generation in Stable Diffusion?

A: The discussion highlighted the recommendation of RTX 4070 over RX 6900 XT, as well as the surpassing performance of CogVideoX in text-to-video generation, showcasing advancements in image generation technology.

Q: What were the key topics of discussion in the DSPy General chat related to the DSPy framework?

A: Discussions included the implementation of TypedPredictors, traceability for cost management, transitioning to the dspy.LM interface, evaluation of custom LM versus custom Adapter options, and the deprecation of custom LM clients, focusing on enhancing functionalities and addressing technical challenges.

Q: What insights were shared about the migration process and upgrades in DSPy for optimized performance?

A: The documentation highlighted the decrease in the need for custom LM clients since DSPy 2.5, advocating for migration to dspy.LM, providing migration guides for new features, and ensuring compatibility with future updates to optimize performance.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!