[AINews] o1: OpenAI's new general reasoning models • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

AI Reddit and Discord Recap

Innovations in LLM Training and Optimization

CUDA MODE Discord

Discord Discussions on OpenAI Models

OpenAI AI Enhancements

AI and ML Discussions

Amazon Multilingual Dataset and NLP Tasks

Developer Collaborations and Project Support

TorchAO and Challenges

Eleuther: Contributions and Transitions

Transition to Postdoc and Module Error

Challenges and Discussions in AI Communities

AI Twitter Recap

AI Twitter Recap

-

Nvidia and AI Chip Competition:

- Market share shifts: Nvidia is starting to lose share to AI chip startups; CUDA vs. Llama comparison; Nvidia still dominates in training but may face competition in inference; SambaNova's impressive performance.

-

New AI Models and Releases:

- Pixtral 12B release; LLaMA-Omni introduction for speech interaction; Reader-LM details shared; General OCR Theory model description.

-

AI Research and Developments:

- Research on superposition prompting; LongWriter paper discussion; AI usage in scientific discovery.

AI Reddit and Discord Recap

The AI Reddit recap highlighted various themes including new AI model releases, open-source speech models, benchmarking LLM deployments, AI coding assistants, and societal impacts. The Discord recap discussed OpenAI O1 model launches, challenges in AI training, innovations in AI tools, and applications in gaming and personal assistants. Both platforms addressed concerns over AI model costs, performance, practicality, and privacy features in the latest updates.

Innovations in LLM Training and Optimization

PLANSEARCH Algorithm Boosts Code Generation:

Research on the PLANSEARCH algorithm demonstrates it enhances LLM-based code generation by identifying diverse plans, improving efficiency. By broadening the range of generated observations, PLANSEARCH shows valuable performance improvements across benchmarks like HumanEval+ and LiveCodeBench.

Dolphin Architecture for Efficient Context Processing:

The Dolphin architecture introduces a 0.5B parameter decoder, promoting energy efficiency for long context processing while reducing latency by 5-fold. Empirical results reveal a 10-fold improvement in energy efficiency, positioning it as a significant advancement in model processing techniques.

CUDA MODE Discord

Exciting Compute Credits for CUDA Hackathon: Organizers secured $300K in cloud credits, along with access to node clusters for participants, including SSH access for serverless scaling with the Modal stack. Support for torch.compile integrated into model.generate() function in version 0.2.2 of MobiusML repo eliminates HFGenerator dependency. GEMM FP8 Implementation brings SplitK GEMM, addressing issue #65 with added documentation for developers. Aurora Innovation hiring engineers for commercial launch seeking experts in GPU acceleration and CUDA/Triton tools. Participants express concerns about OpenAI's competitiveness with rivals, emphasizing the need for innovative training strategies.

Discord Discussions on OpenAI Models

LLM Finetuning (Hamel + Dan) Discord

- A member praised Literal AI for its usability, emphasizing integrations and intuitive design for smoother operations.

- LLM observability discussed for quicker iterations and debugging using logs to fine-tune models, aiming at improved performance and cost reduction.

- Emphasis on prompt performance tracking for reliable LLM outputs to maintain quality assurance.

- Insights shared on building robust LLM monitoring and analytics system for optimal production performance.

- Discussion on fine-tuning LLMs for translations, highlighting the challenges and opportunities for developers.

Gorilla LLM (Berkeley Function Calling) Discord

- Concerning results from recent Gorilla LLM tests, perfect accuracy in irrelevance but 0.0 in both java and javascript categories.

- User seeks help on prompt splicing for qwen2-7b-chat, aiming to enhance testing experience with effective prompt strategies.

MLOps @Chipro Discord

- Member queries on predictive maintenance experiences, seeking resources on models and practices for unsupervised methods.

- Discussion on mechanical and electrical focus in monitoring, aiming to enhance maintenance and reduce failure rates.

OpenAI AI Enhancements

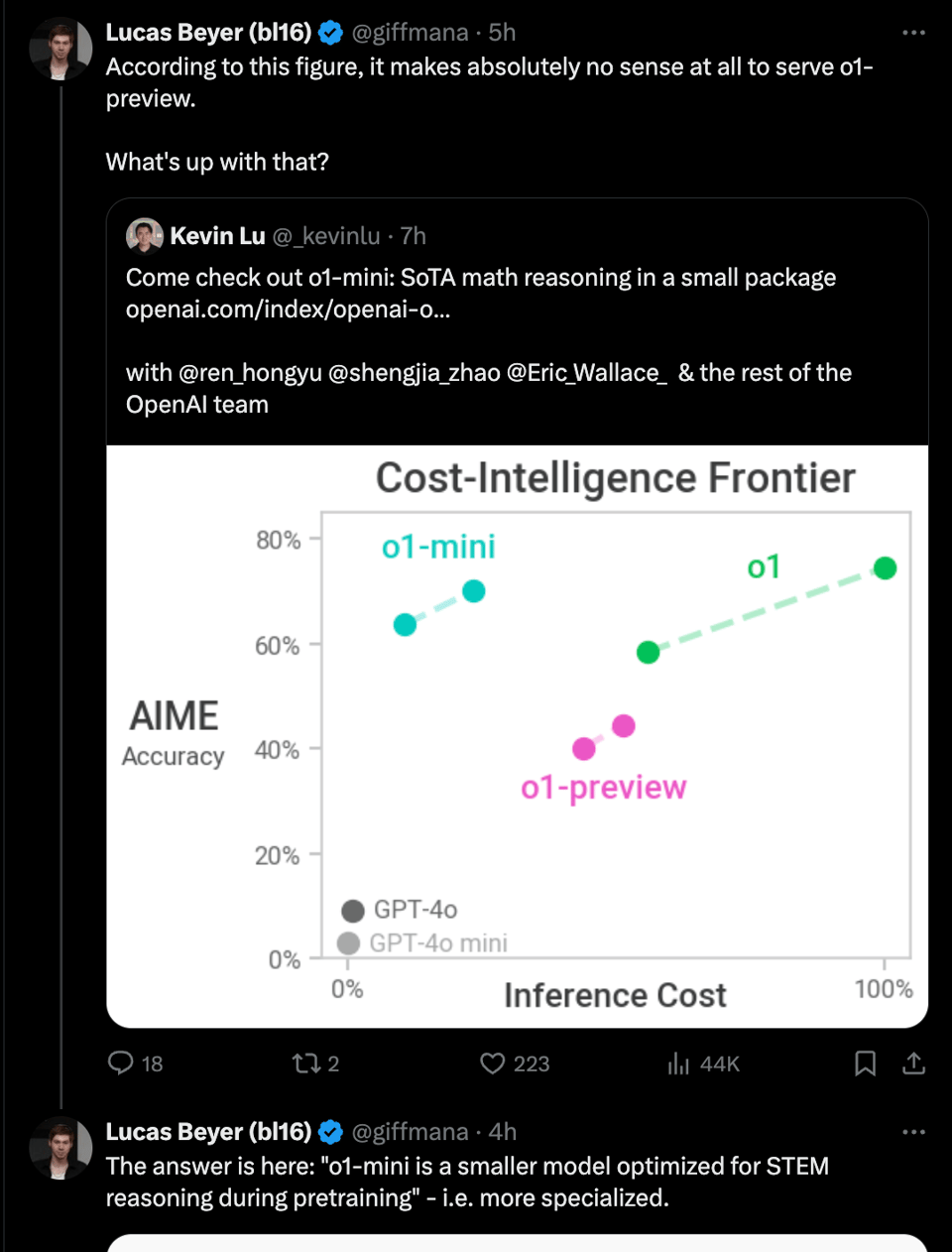

The section discusses enhancements in AI models by OpenAI. The introduction of the PLANSEARCH algorithm has shown significant improvements in performance for LLM-based code generation. The algorithm focuses on exploring diverse ideas to mitigate the lack of diversity in LLM outputs, resulting in more efficient code generation. Additionally, the rollout of OpenAI's o1 models introduces improved reasoning capabilities, aiming to solve complex tasks in areas like science, coding, and math efficiently. The models are designed to spend more time thinking before responding and offer developers API access for better integration. Users have mixed reviews about the o1 model, highlighting its performance in comparison to previous models like GPT-4o. The community is discussing the practicality and creative aspects of the new models, like o1 and GPT, emphasizing the need for improvements to meet the desired standards. The section also outlines discussions on API access, model performance, and user satisfaction with the new AI enhancements.

AI and ML Discussions

This section provides insights into various discussions related to AI and machine learning within the HuggingFace and Modular (Mojo) communities:

-

General Reasoning Engines: Users explore the need for general reasoning engines capable of solving syllogisms and applying logic beyond specific domains like math, discussing the potential use of LLMs in conjunction with reasoning engines.

-

Revolutionizing CLI Tools with Ophrase and Oproof: An article delves into how tools like Ophrase and Oproof are transforming command-line interfaces, highlighting their effectiveness in simplifying tasks.

-

A New Persian Dataset Launch: The introduction of a Persian dataset containing translations of 6K sentences from Wikipedia aims to enhance Persian language modeling and access to diverse language resources.

-

Improving Performance with Arena Learning: Insights are shared on how Arena Learning enhances performance in post-training scenarios, discussing techniques that lead to significant improvements in model outcomes.

-

Fine-Tuning LLMs on Kubernetes: A comprehensive guide details the process of fine-tuning LLMs using Kubernetes and leveraging Intel® Gaudi® Accelerator technology, serving as a practical resource for developers optimizing model training workflows.

These discussions highlight a range of topics from new dataset introductions to performance improvement strategies, showcasing the diverse interests and ongoing developments in the AI and machine learning communities.

Amazon Multilingual Dataset and NLP Tasks

- The blog post discusses the Amazon Multilingual Counterfactual Dataset (AMCD) used for counterfactual detection in NLP tasks.

- The dataset contains annotated sentences from Amazon reviews to identify hypothetical scenarios in user feedback.

Developer Collaborations and Project Support

A user inquires about the Llamafile project on GitHub, receiving confirmation that it works as intended. Another member seeks a developer from the US or Europe for collaborative projects, emphasizing meeting deadlines and integrity. In another discussion, members explore finetuning Gemma with Alpaca and using Unsloth for Llama 3.1. They also tackle serving quantized models with vLLM, dataset formatting issues, and choosing learning rate schedulers for fine-tuning. Feedback and guidance are shared to address these technical challenges.

TorchAO and Challenges

Several challenges and limitations were discussed regarding TorchAO, focusing on its incompatibility with compiler mode and its primarily CPU model with convolutional architecture. There were also inquiries about the availability of documentation and resources for TorchAO, particularly concerning CUDA operations with different input types. Members highlighted issues with linear operations initialization and the need for further clarification on certain functionalities. Additionally, advanced quantization techniques were explored to optimize memory footprint, suggesting potential solutions for efficient quantization with different data types.

Eleuther: Contributions and Transitions

A member in Eleuther shared insights on contributing to ongoing projects by suggesting opening an issue followed by a PR for contributions. Additionally, discussions involved transitioning from a PhD to a postdoc role. There was also a mention of encountering an error with sqlitedict within a virtual environment.

Transition to Postdoc and Module Error

A member shared their excitement about finishing their PhD in Germany and moving on to a postdoc focused on safety and multi-agent systems. They also mentioned enjoying hobbies like playing chess and table tennis. Another member faced an issue with the 'sqlitedict' module not being found despite being in a virtual environment. A recommendation was made to seek solutions in the appropriate channel. The Eleuther research channel discussed the performance of RWKV-7 model with extreme CoT, the underwhelming results of Pixtral 12B compared to Qwen 2 7B VL, the announcement of Transfusion in PyTorch project, the teaser of OpenAI's new o1 model series, and discussions on model improvements and functions including forking and joining states in RNN-style models.

Challenges and Discussions in AI Communities

In this chunk of discussions within various AI communities, topics ranged from skepticism towards new OpenAI releases being seen as marketing strategies to discussions in different AI platforms like LAION, LangChain AI, and the MLOps @Chipro. Members expressed concerns about code duplication, chat template features, and LLM monitoring setups. There were also mentions of new tools like the HTML Chunking Package and Flux by RenderNet. Issues of community engagement and AI model performance were highlighted, emphasizing the ongoing conversations and challenges faced in these AI communities.

FAQ

Q: What is the PLANSEARCH algorithm and how does it impact LLM-based code generation?

A: The PLANSEARCH algorithm enhances LLM-based code generation by identifying diverse plans, thus improving efficiency and performance across benchmarks.

Q: What advancements does the Dolphin architecture bring in terms of efficient context processing?

A: The Dolphin architecture introduces a 0.5B parameter decoder that promotes energy efficiency for long context processing and reduces latency by 5-fold, resulting in a 10-fold improvement in energy efficiency.

Q: What were the key highlights of the recent discussions in the AI communities regarding AI model improvements by OpenAI?

A: The discussions highlighted the introduction of the PLANSEARCH algorithm for better code generation, the launch of OpenAI's o1 models with improved reasoning capabilities in various domains, and the community's mixed reviews on the performance of new models like o1 compared to previous models.

Q: What are some of the key topics discussed in the HuggingFace and Modular (Mojo) communities related to AI and machine learning?

A: Discussions in these communities touched on the need for general reasoning engines, the revolutionizing effect of tools like Ophrase and Oproof in CLI interfaces, the launch of a new Persian dataset for language modeling, techniques for performance enhancement through Arena Learning, and a guide on fine-tuning LLMs using Kubernetes and Intel® Gaudi® Accelerator technology.

Q: What was the Amazon Multilingual Counterfactual Dataset (AMCD) introduced for, and what does it contain?

A: The AMCD was used for counterfactual detection in NLP tasks, containing annotated sentences from Amazon reviews to identify hypothetical scenarios in user feedback.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!