[AINews] o3-mini launches, OpenAI on "wrong side of history" • ButtondownTwitterTwitter

Chapters

AI Twitter Recap

Hardware, Infrastructure, and Scaling

Debate Over DeepSeek's Open-Source Model and Chinese Origins

User Reactions and Feedback on AI Models

Deep Learning & AI Updates in Various Discords

Unsloth AI (Daniel Han) Help

AI Conversations and Discussions

Perplexity AI ▷ #pplx-api (2 messages)

Interconnects (Nathan Lambert) AI Discussions

Deep Learning Model Discussions

Decentralized Training, Crypto and Performance Comparison

Eleuther & GPU Interpretability

Reasoning Gym, Game Development, Feedback, and Dependency Management

Meetup with Arize AI and Groq, LlamaReport beta release, o3-mini support

AI Twitter Recap

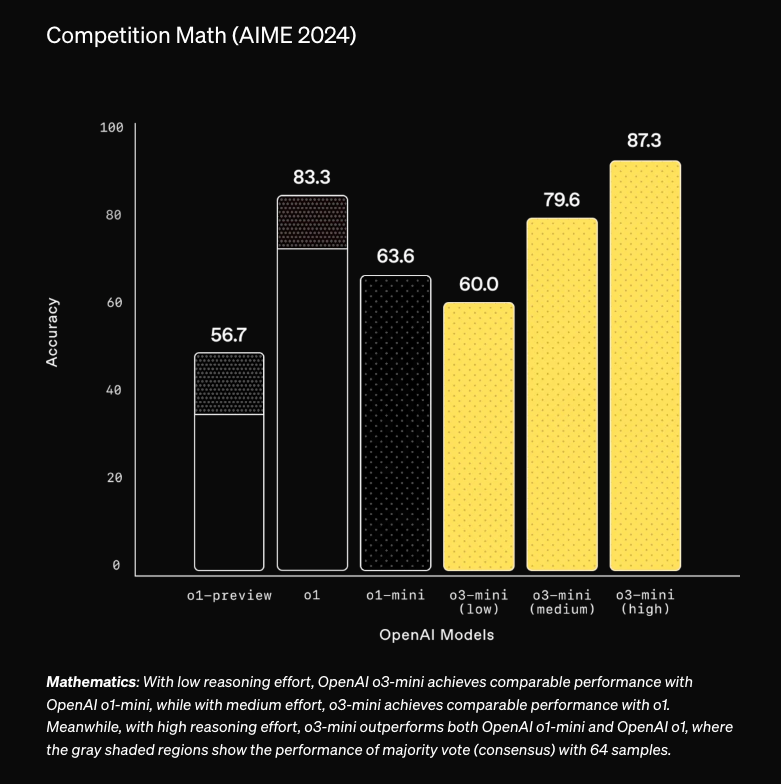

The AI Twitter recap section provides updates on recent model releases and their performance. OpenAI's o3-mini model is highlighted, emphasizing its capabilities in science, math, and coding. MistralAI released Mistral Small 3 (24B) and DeepSeek R1 is supported by Text-generation-inference v3.1.0. Allen AI introduced Tülu 3 405B, and Qwen 2.5 models were added to the PocketPal app. Other model releases include Virtuoso-medium by arcee_ai and Velvet-14B, a family of Italian LLMs. The section offers insights into various models, their features, performance comparisons, and availability on different platforms.

Hardware, Infrastructure, and Scaling

DeepSeek has reported to have over 50,000 GPUs, including H100, H800, and H20 acquired pre-export control. Their infrastructure investments include $1.3B server CapEx and $715M operating costs. DeepSeek potentially plans to subsidize inference pricing by 50% to gain market share. They use Multi-head Latent Attention (MLA), Multi-Token Prediction, and Mixture-of-Experts to drive efficiency. There are concerns about the inadequacy of VRAM in the Nvidia RTX 5090 with only 32GB, with suggestions that GPUs with larger VRAM capacities (128GB, 256GB, 512GB, or 1024GB) could challenge Nvidia's dominance. OpenAI's first full 8-rack GB200 NVL72 is now operational in Azure, showcasing compute scaling capabilities. A VRAM reduction of 60-70% change to GRPO in TRL is expected soon. Google DeepMind has introduced a distributed training paper that reduces the number of parameters to synchronize, quantizes gradient updates, and overlaps computation with communication, achieving similar performance with 400x less bits exchanged. There are observations about models trained on reasoning data potentially harming instruction following.

Debate Over DeepSeek's Open-Source Model and Chinese Origins

The discussion dives into the performance, security concerns, and positive impacts of the DeepSeek R1 model. It highlights the challenges faced by users in running the model locally, the leaks in the DeepSeek database, and the advancements in hosting solutions like Cerebras. Additionally, the integration of DeepSeek R1 with other tools like Aider and Cursor IDE is examined, showcasing new opportunities for code generation and reasoning tasks within the AI ecosystem.

User Reactions and Feedback on AI Models

Users shared mixed reactions and feedback on various AI models in different Discord communities. Some praised the O3 Mini for its functions, while others found DeepSeek to be more efficient in coding tasks. Discussions also revolved around cost-effectiveness, performance comparisons, and future developments in the AI field. Additionally, members explored new AI techniques, debated model efficiencies, and shared experiences with different AI tools.

Deep Learning & AI Updates in Various Discords

Discussions in various Discord channels covered a wide range of topics related to deep learning and AI. Some highlights include the Psyche project for decentralized training, debates on using blockchain for verification, comparisons between different AI models like o3-mini and Sonnet, and discussions on autoregressive generation with CLIP embeddings. Other topics ranged from innovative hiring practices in the tech industry to the disappearance of the 50 Series GPUs soon after launch. The sections touch on various advancements, challenges, and insights in the rapidly evolving field of artificial intelligence.

Unsloth AI (Daniel Han) Help

Discussion on Help Channel:

-

Running DeepSeek R1 with Ollama: Users are attempting to run the DeepSeek R1 model, specifically the 1.58-bit version, with varying degrees of success using Ollama, a wrapper around llama.cpp. Issues faced include errors when starting the model and performance bottlenecks, leading to suggestions to run directly with llama-server instead.

-

Finetuning Techniques and Learning Rate: Discussions on appropriate learning rates for finetuning models suggest starting around e-5 or e-6, with considerations for dataset size influencing adjustments. Monitoring results and evaluation metrics after training a sufficient number of epochs is recommended to gauge the effectiveness of the learning rate.

-

Integration Issues with AI Frameworks: Concerns regarding the latency of using Ollama's API for local LLMs prompted discussions about exploring alternatives like OpenWebUI for better performance. Users were advised on the limitations and potential challenges associated with integrating local LLMs into their applications.

-

Memory and Performance Challenges: Users share experiences regarding memory constraints when fine-tuning large models and the impact of disk speed on inference rates. Recommendations included optimizing storage solutions and exploring offloading strategies to improve performance.

-

Current Limitations in Model Support: It was noted that Unsloth does not currently support multi-GPU training for fine-tuning models, with a focus on supporting all models first. This limitation has implications for users needing more RAM for fine-tuning larger models.

AI Conversations and Discussions

The discussions around AI models, particularly O3 Mini, R1, and O1, noted their various performance differences. Users engaged in testing O3 Mini, comparing its limits and performance to existing models. Perplexity AI users shared their experiences with the app, citing frustrations with model settings and features. Other topics included the AI Prescription Bill proposing regulation for AI use in healthcare, Kansas battling a TB outbreak, Nadella predicting the 'Jevons Paradox' for AI, an asteroid potentially carrying life seeds, and exploration of the Harvard Dataset.

Perplexity AI ▷ #pplx-api (2 messages)

Sonar Reasoning struggles with context:

A member pointed out that sonar reasoning isn't effective for specific inquiries, citing a plane crash over the Potomac as an example. While the model technically provided correct information, it delivered data from the 1982 incident instead of the recent crash.

Recent vs Historical Data Confusion:

The discussion highlighted a notable issue where sonar reasoning can deliver outdated information, leading to potential confusion in crucial scenarios. The member emphasized that although the older data was accurate, it may not meet the user's immediate needs in time-sensitive situations.

Interconnects (Nathan Lambert) AI Discussions

In the AI Discussions section, users are delving into various topics related to AI models and tools. There is confusion over the message limits for O3 Mini High and O3 Mini, with speculation of a bug due to lack of prior mention. Users are comparing the performance of AI models like Claude 3.6 Sonnet, DeepSeek R1, and O1 for tasks such as coding, noting issues with instruction following in O3 Mini. Concerns are raised about the reliability of AI detection tools and their impact on students. DeepSeek's capabilities and competitive nature against bigger companies are being discussed, with some users impressed by its performance as an open-source model. Additionally, there is speculation about how reasoning models like O1 use Chain of Thought (CoT) to enhance performance, sparking curiosity about its visibility for better query understanding.

Deep Learning Model Discussions

This section delves into discussions around various deep learning models and their limitations. Users reported issues with the Vision model's inability to distinguish between ground and lines, likening it to needing 'new glasses'. The limitations of models like 4o were also highlighted, particularly in differentiating visual elements. Additionally, the release of O3 Mini sparked mixed reactions, with some users noting disappointing performance compared to previous models. Discussions also touched on market trends among AI models, with a focus on DeepSeek's impact on pricing. Overall, the section provides insights into the challenges and advancements within the realm of deep learning models.

Decentralized Training, Crypto and Performance Comparison

The section discusses the Psyche project aiming for decentralized training coordination, skepticism around crypto involvement at Nous, performance of AI models like o3-mini and Sonnet, community sentiments on AI and crypto, and insights on various AI-related topics. It highlights the use of blockchain for training verification, concerns about scams in the crypto space, the effectiveness of smaller vs. larger AI models, and the desire for positive blockchain impact. Additionally, it compares the performance of o3-mini and Sonnet models, emphasizing speed and ease of use. Various links to related content and community discussions on AI-related topics are also mentioned.

Eleuther & GPU Interpretability

The section covers discussions related to research on superhuman reasoning, backtracking vector discovery, autoregressive models, and inefficiencies in neural network training. Members explore critiques and alternative metrics for training language models, the balance between generalization and memorization, and inefficiencies in practical training methods. The community also delves into the potential of random order autoregressive models and the importance of developing more efficient training strategies. Links to relevant papers and tweets are provided for further reading.

Reasoning Gym, Game Development, Feedback, and Dependency Management

The Reasoning Gym gallery now features 33 datasets. Feedback was given on dataset generation proposals, encouraging members to open issues for their ideas. Discussions on Game Development and Algorithmic Challenges highlighted the need for quicker validation of answers and interest in developing real multi-turn games. Feedback on Game Design and Performance involved sharing insights on porting a game and encouraging sharing concepts for feedback. Another topic was about Dependency Management and considering adding Z3Py as a dependency for simplification, with the affirmation that new dependencies would be considered if they bring sufficient benefits.

Meetup with Arize AI and Groq, LlamaReport beta release, o3-mini support

Three messages were highlighted related to LlamaIndex's blog. The first message discussed a meetup with Arize AI and Groq where agents and tracing were discussed, showcasing live demos using Phoenix by Arize. The second message talked about the LlamaReport beta release and provided a video demonstrating its features set for 2025 release. The last message announced instant support for o3-mini with instructions for installation using pip install command, with further details available in a Twitter announcement.

FAQ

Q: What AI models were highlighted in the AI Twitter recap section?

A: The AI Twitter recap section highlighted models like o3-mini by OpenAI, Mistral Small 3 (24B) by MistralAI, DeepSeek R1, Tülu 3 405B by Allen AI, Qwen 2.5 in PocketPal app, Virtuoso-medium by arcee_ai, and the Velvet-14B family of Italian LLMs.

Q: What are some of the technologies used by DeepSeek to drive efficiency in their model?

A: DeepSeek uses technologies like Multi-head Latent Attention (MLA), Multi-Token Prediction, and Mixture-of-Experts to drive efficiency in their model.

Q: What concerns were raised regarding the VRAM capacity of the Nvidia RTX 5090?

A: Concerns were raised about the inadequacy of the 32GB VRAM capacity in the Nvidia RTX 5090, with suggestions that GPUs with larger VRAM capacities (128GB, 256GB, 512GB, or 1024GB) could challenge Nvidia's dominance.

Q: What significant infrastructure investments did DeepSeek make?

A: DeepSeek made infrastructure investments totaling $1.3B in server CapEx and $715M in operating costs to support their model.

Q: What are the current challenges faced by users in running the DeepSeek R1 model locally?

A: Challenges faced by users in running the DeepSeek R1 model locally include leaks in the DeepSeek database, difficulties in model performance, and advancements in hosting solutions such as Cerebras.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!