[AINews] Skyfall • ButtondownTwitterTwitter

Chapters

AI Discord Recap

Open-Source Datasets and Model Development

OpenInterpreter Discord

Discord Channel Discussions

Unsloth AI & HuggingFace Messages

AI Projects and Discussions

Perplexity AI and OpenAI Discussions

LM Studio Chat Discussions: Models, Troubleshooting, and Setup

Mojo Programming Language Discussions

Handling Nightly and Stable Releases

Modal Sponsors and Discussions

AI Discussions on Nous Research Discord

Collaborative Efforts in Bitnet Group

Challenges and Discussions on Large Language Models and AI Tasks

Interconnected Discussions on Various Topics

AI Stack Devs - AI Town Dev

Issues and Fine-Tuning Feedback

Improving Support System and Rate Limit Issues

Conversations on Various AI Topics

AI Discord Recap

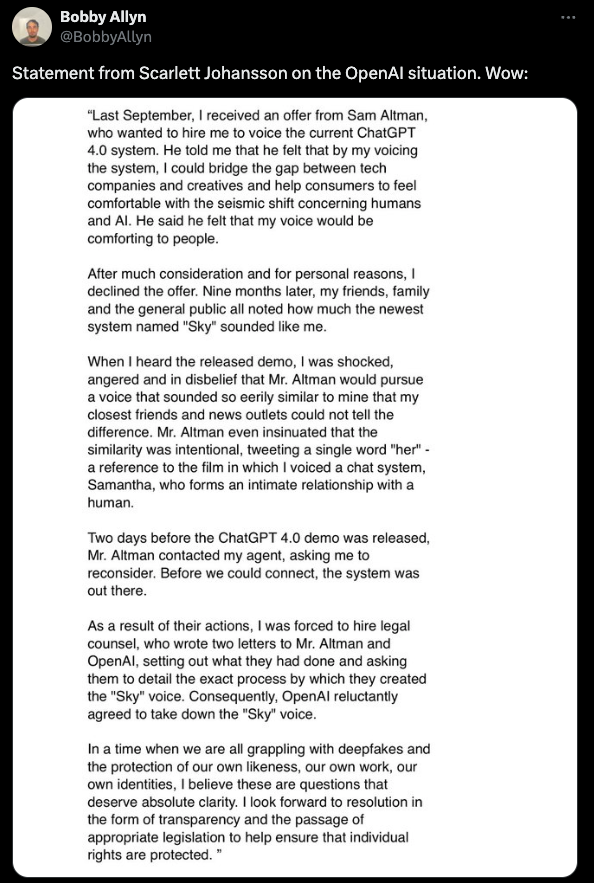

A summary of the latest discussions on AI-related topics in various Discord channels. The discussions include advancements and challenges in LLM Fine-Tuning, debates on the LLM Fine-Tuning course, discussions on LoRA fine-tuning techniques, and debates on optimal configurations and preventing overfitting. Additionally, the summary covers discussions on Hugging Face's commitment to providing free GPUs for AI technology development, the Chameleon model's performance in generating images and text, and the ongoing debate on Scarlett Johansson suing OpenAI for the use of her voice in GPT-4o. Furthermore, the summary briefly mentions BlackRock's investments in Europe to meet AI power needs and the privacy concerns related to AI-generated erotica.

Open-Source Datasets and Model Development

Frustrations were expressed over the restrictive non-commercial license of the CommonCanvas dataset. Efforts were made to create high-quality open-source datasets to avoid hallucinations in captions damaging visual language models (VLLMs) and text-to-image (T2I) models. The Sakuga-42M dataset introduced the first large-scale cartoon animation dataset, addressing a gap in cartoon-specific training data. Concerns arose over the CogVLM2 license restricting use against China's interests and requiring Chinese jurisdiction for disputes.

OpenInterpreter Discord

Hugging Face is donating $10 million in free shared GPU resources to small developers, academics, and startups. The initiative leverages their financial standing and recent investments, supporting AI development and research endeavors. This move aims to provide access to valuable resources, fostering innovation and collaboration within the community.

Discord Channel Discussions

This section highlights various discussions and topics across different Discord AI channels. From debugging issues with the RAG model and GPU-enabled cloud services in the Mozilla AI Discord, to the fine-tuning frenzy in the MLOps @Chipro Discord, and YOLO model speed discussions in the tinygrad (George Hotz) Discord, there are diverse conversations encompassing technical challenges, advanced model deployment strategies, and AI model performance evaluations. The Unsloth AI Discord also covers a range of topics from Python text formatting and regex usage to criticisms of AI leaders like Sam Altman and OpenAI, signaling ongoing debates about AI ethics, legal concerns, and content recommendations shared among community members.

Unsloth AI & HuggingFace Messages

Unsloth AI (Daniel Han) ▷ #showcase (22 messages🔥): - Text2Cypher model finetuned: A member finetuned a Text2Cypher model (a query language for graph databases) using Unsloth. They shared a LinkedIn post praising the ease and the gguf versions produced. - New article on sentiment analysis: A member published an extensive article on fine-tuning LLaMA 3 8b for sentiment analysis using Unsloth, with code and guidelines. They shared the article on Medium. - Critical data sampling bug in Kolibrify: A significant bug was found in Kolibrify's data sampling process. A fix that theoretically improves training results will be released next week, and retraining is already ongoing to evaluate effectiveness. - Issue in curriculum dataset handling: The curriculum data generator was ineffective due to using datasets.Dataset.from_generator instead of datasets.IterableDataset.from_generator. A member overhauled their pipeline, matched dolphin-mistral-2.6's performance using only ~20k samples, and plans to publish the model soon.

AI Projects and Discussions

Among the projects and discussions highlighted in this section, members shared a YouTube video showcasing a business advisor AI project using Langchain and Gemini technologies. Another user introduced a study companion program with GenAI for innovative educational experiences. Additionally, new models training support was added to SimpleTuner and SDXL Flash models were rolled out for improved AI model performance. In the realm of NLP, users discussed topics ranging from Connectionist Temporal Classification relevance to handling conversation history in LLM-based bots.

Perplexity AI and OpenAI Discussions

The section discusses various issues and queries related to Perplexity AI and OpenAI discussions on Discord. Users talked about the limitations of GPT-4o in handling conversations effectively, the desire for new features like uploading images and videos, and concerns about API rate limits. Additionally, there were discussions on model switching, downtime frustrations, and user experiences with different models. The content also touches on LM Studio challenges, including unsupported architecture errors and running models on systems with limited VRAM.

LM Studio Chat Discussions: Models, Troubleshooting, and Setup

Medical LLM Recommendation

A member inquired about medical LLMs, and another suggested trying OpenBioLLM-Llama3-8B-GGUF. They noted its 8.03B parameters and Lama architecture. Additional resources were shared.

SVG and ASCII Art Benchmarks

A member shared LLM benchmarking results for generating SVG art, with mentions of top models like WizardLM2 and GPT-4. ASCII art capabilities were also discussed.

Embedding Models for German

A discussion on finding suitable embedding models for German suggested manual conversion using tools like llama.cpp and mentioned a specific multilingual model for potential conversion.

Generating Text-based Art

A user shared using the MPT-7b-WizardLM model for generating uncensored stories. Questions on configuration and prompt settings were answered by the model's creator.

Image Quality Concerns

A brief discussion highlighted using tools like automatic1111 and ComfyUI for better image generation control. High-quality models from Civit.ai were recommended, with a caution about NSFW content.

Mojo Programming Language Discussions

Mojo Programming Language Discussions

-

Mojo on Windows Woes: Users expressed concerns about the lack of direct support for Mojo on Windows, with future support anticipated through WSL.

-

Mojo vs. Bend Programming Debate: Comparison between Mojo and Bend programming languages highlighted the performance focus of Mojo, contrasting with Bend's limitations.

-

Community Engagement and Resources: Excitement around upcoming community meetings and shared resources, fostering a playful and engaged spirit within the community.

-

Fun with Mojo Syntax: Users experimented with creating whimsical Mojo code using emojis and backticks, sparking humorous exchanges.

These discussions continue to showcase the diverse interests and engagement within the Mojo programming community.

Handling Nightly and Stable Releases

In this section, members of the Modular (Mojo 🔥) community discuss various topics related to the 'nightly' branch. Discussions include strategies for tackling PR DCO check failures, transitioning from 'nightly' to stable releases, struggles with segfaults and bugs, flaky tests and ongoing fixes, and alias issues leading to segfaults. Links to relevant resources are also shared, providing insights into these discussions.

Modal Sponsors and Discussions

Charles from Modal announced the sponsorship of the course and shared links to the getting started guide and a hello world example for running code in the cloud without infrastructure setup. The discussion revolved around creating Modal accounts via GitHub, editing email settings, and the $500 credits process. Members explored Modal feature queries, reported problems with onboarding and usage optimization, and engaged in community support discussions. Detailed responses, solutions, and recommendations were shared, including examples like embedding Wikipedia with Modal and TensorRT-LLM serving.

AI Discussions on Nous Research Discord

Regex for Formatted Text Search:

Discussions on using regex to handle finding text with specific formats, but complexities may require more advanced approaches like semantic search or symbolic language processing.

Tool Calling Issues with Hermes2 and Vercel AI SDK:

Members faced difficulties due to tool call triggering problems when using Vercel AI SDK with Hermes2, highlighting the need for specific prompt handling.

Local Model Advantages for Sensitive Tasks:

Using local models like Llama3 can be advantageous for tasks needing cost predictability, consistency, or sensitive data handling compared to external models like GPT or Claude.

Finetuning vs. Better Prompting:

Debates on whether it's more effective to fine-tune models like Llama3 for specific cases or rely on prompting and retrieval-augmented generation with models like GPT-4, stressing that specific use cases may dictate the approach.

Benchmarks for Rerankers:

Members are seeking public evaluation data to benchmark fine-tuned re-rankers accurately, necessitating clear methodologies and datasets for precise assessments.

Collaborative Efforts in Bitnet Group

Members of the Bitnet group engaged in discussions regarding various topics ranging from implementing uint2 data types in PyTorch to planning regular meetings for the group. Issues with uint4 data type in Torch were highlighted, with a focus on memory efficiency and the need for packing data types. The group also refined code examples for unpacking uint8 data to trinary values and discussed project management challenges in creating custom CUDA extensions and data type creation. Overall, the group emphasized collaborative efforts and adherence to best practices for efficient development.

Challenges and Discussions on Large Language Models and AI Tasks

In this section, there are discussions on the disconnect between tokenizer creation and model training in language models, the creation of a joint embedding space for different modalities, the development of a single generalist agent beyond text outputs, and the proposal of an embodied multimodal language model for real-world applications. Additionally, there are debates on FLOP calculations, sample efficiency metrics, theoretical questions on compute efficiency, and the significance of scaling laws in AI tasks. Furthermore, insights on model evaluation speed optimization, AI safety, and benchmark events are shared, along with discussions on AI ethics, model performances, and challenges faced by research teams.

Interconnected Discussions on Various Topics

Member shared results from tests on ORPO, expressing that it seemed okay but not great due to combining SFT with margin-based loss. Criticism of Chamath Palihapitiya for promoting SPACs and enjoyment over All In Podcast hosts' failures was highlighted. There were discussions on automating OnlyFans DMs and an episode on OpenAI developments. Other topics included vocab size scaling laws, hysteresis in control theory, and AI music generation interest. The section also covered LlamaIndex discussions on data governance, embeddings, and model configuration, as well as challenges with multi-agents and tools.

AI Stack Devs - AI Town Dev

Members in the AI Town Dev channel are actively discussing technical details related to the AI Town platform. They are troubleshooting errors, sharing ways to save and extract conversations, and suggesting integrating world context into character prompts for a richer narrative experience. There are also discussions about the tech stack being used, including Convex for the backend, JS/TS for app logic, Pixi.js for graphics, and Clerk for authentication. Additionally, they are exploring the possibility of using a web app to dump SQLite files from AI Town and the potential benefits of adding context directly into character prompts.

Issues and Fine-Tuning Feedback

Discussed a problem where auto top-up payments were declined, resulting in a user’s credits falling below allowable limits and being unable to manually top-up. The issue was identified as likely being blocked by the user's bank (WISE EUROPE SA/NV).

-

Model Recommendations and Fine-Tuning Feedback: Users shared their experiences with various models, with mentions of “Cat-LLaMA-3-70B”, Midnight-Miqu models, and the need for better fine-tuning methods as opposed to "random uncleaned data" approaches. One user noted, "Try Cat-LLaMA-3-70B, it's very impressive when you actually manage to get it to work."

-

Wizard LM 8x22B Request Failure Issues: A user asked about frequent failures with Wizard LM 8x22B on OpenRouter, which were identified as temporary surges in request timeouts (408) from several providers.

Improving Support System and Rate Limit Issues

In the 'Cohere' Discord channel, members discussed improving the support system on Discord, noting long-standing issues with unanswered questions and clarifying that it operates more as a community-supported chat. Users also shared experiences with the Trial API, mentioning rate limit issues and confirming that free API keys are available but limited, mainly suitable for prototyping. Additionally, there were discussions about translating with CommandR+, hosting Cohere AI apps on platforms like Vercel, and distinguishing between portfolio and production use cases.

Conversations on Various AI Topics

This section covers diverse discussions on AI-related topics from different groups. The topics range from technical details like polynomial degree limits for sine approximation and bitshifting in tinygrad to more advanced AI concepts like optimizing GPT-4o for legal reasoning tasks and concerns about AI complexity and prompt injection. Additionally, there are talks on integrating advanced models with legacy systems and the importance of resilience in fault-tolerant systems.

FAQ

Q: What is LLM Fine-Tuning?

A: LLM Fine-Tuning refers to the process of fine-tuning Language Model(s) for specific tasks or domains to improve their performance and adaptability.

Q: What are some challenges in LLM Fine-Tuning?

A: Challenges in LLM Fine-Tuning may include data quality, overfitting, hyperparameter tuning, and selecting the optimal configuration to achieve desired results.

Q: What is the Sakuga-42M dataset?

A: The Sakuga-42M dataset is a large-scale cartoon animation dataset designed to address the need for cartoon-specific training data in AI models.

Q: Why is there frustration over the CommonCanvas dataset's restrictive non-commercial license?

A: Frustration over the CommonCanvas dataset's restrictive non-commercial license may stem from limitations it imposes on using the dataset for commercial purposes, hindering widespread use and innovation.

Q: What is the goal of Hugging Face's donation of free shared GPU resources?

A: Hugging Face's donation of free shared GPU resources aims to provide valuable GPU access to small developers, academics, and startups to support AI development and research efforts, fostering innovation and collaboration within the community.

Q: What are some of the discussions in the Mojo programming community?

A: Discussions in the Mojo programming community may include topics like support for Windows, comparing Mojo with other programming languages, community engagement, and creating whimsical code.

Q: What are the advantages of using local models like Llama3 for sensitive tasks?

A: Using local models like Llama3 for sensitive tasks can offer advantages such as cost predictability, consistent performance, and enhanced data security compared to relying on external models.

Q: What were some issues discussed regarding the integration of AI Town's tech stack?

A: Discussions regarding AI Town's tech stack may have covered topics like troubleshooting errors, saving and extracting conversations, integrating world context into character prompts, and exploring different technology components used within the platform.

Get your own AI Agent Today

Thousands of businesses worldwide are using Chaindesk Generative

AI platform.

Don't get left behind - start building your

own custom AI chatbot now!